Introduction to the Workshop

Overview

Teaching: 15 min

Exercises: 0 minQuestions

What is The Carpentries?

What will the workshop cover?

What else do I need to know about the workshop?

Objectives

Introduce The Carpentries.

Go over logistics.

Introduce the workshop goals.

What is The Carpentries?

The Carpentries is a global organization whose mission is to teach researchers, and others, the basics of coding so that you can use it in your own work. We believe everyone can learn to code, and that a lot of you will find it very useful for things such as data analysis and plotting.

Our workshops are targeted to absolute beginners, and we expect that you have zero coding experience coming in. That being said, you’re welcome to attend a workshop if you already have a coding background but want to learn more!

To provide an inclusive learning environment, we follow The Carpentries Code of Conduct. We expect that instructors, helpers, and learners abide by this code of conduct, including practicing the following behaviors:

- Use welcoming and inclusive language.

- Be respectful of different viewpoints and experiences.

- Gracefully accept constructive criticism.

- Focus on what is best for the community.

- Show courtesy and respect towards other community members.

You can report any violations to the Code of Conduct by filling out this form.

Introducing the instructors and helpers

Now that you know a little about The Carpentries as an organization, the instructors and helpers will introduce themselves and what they’ll be teaching/helping with.

The etherpad & introducing participants

Now it’s time for the participants to introduce themselves. Instead of verbally, the participants will use the etherpad to write out their introduction. We use the etherpad to take communal notes during the workshop. Feel free to add your own notes on there whenever you’d like. Go to the etherpad and write down your name, role, affiliation, and work/research area.

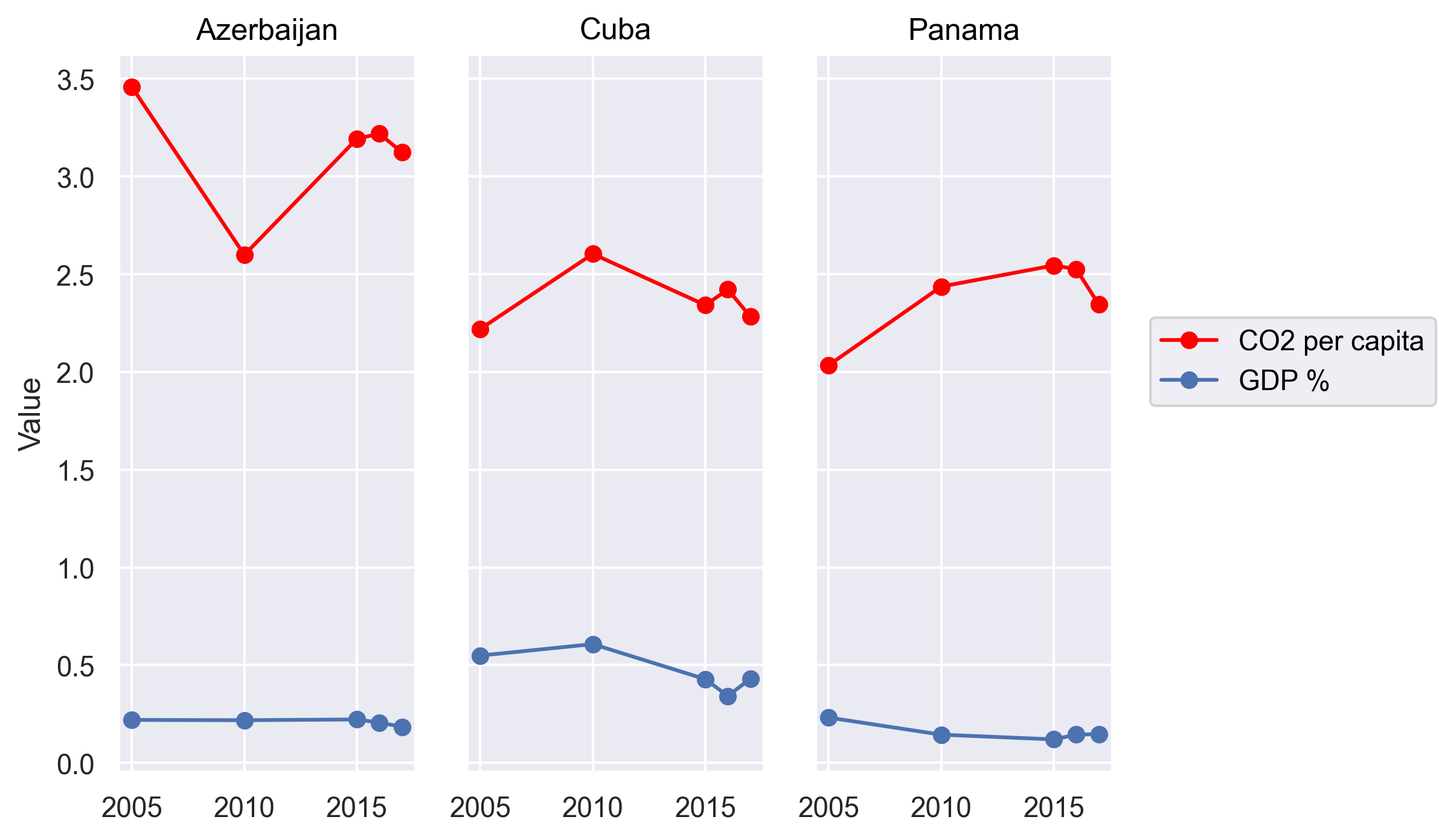

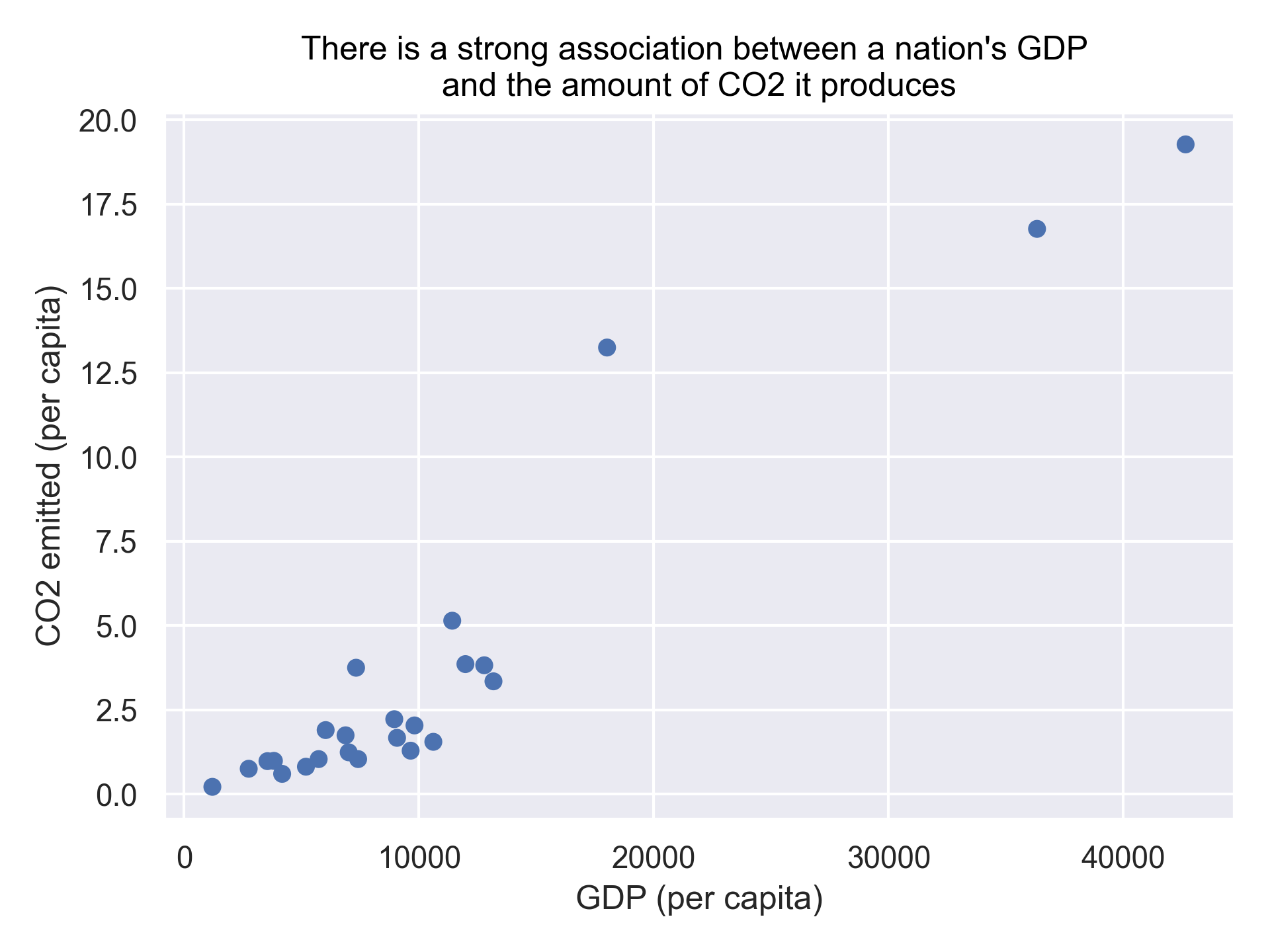

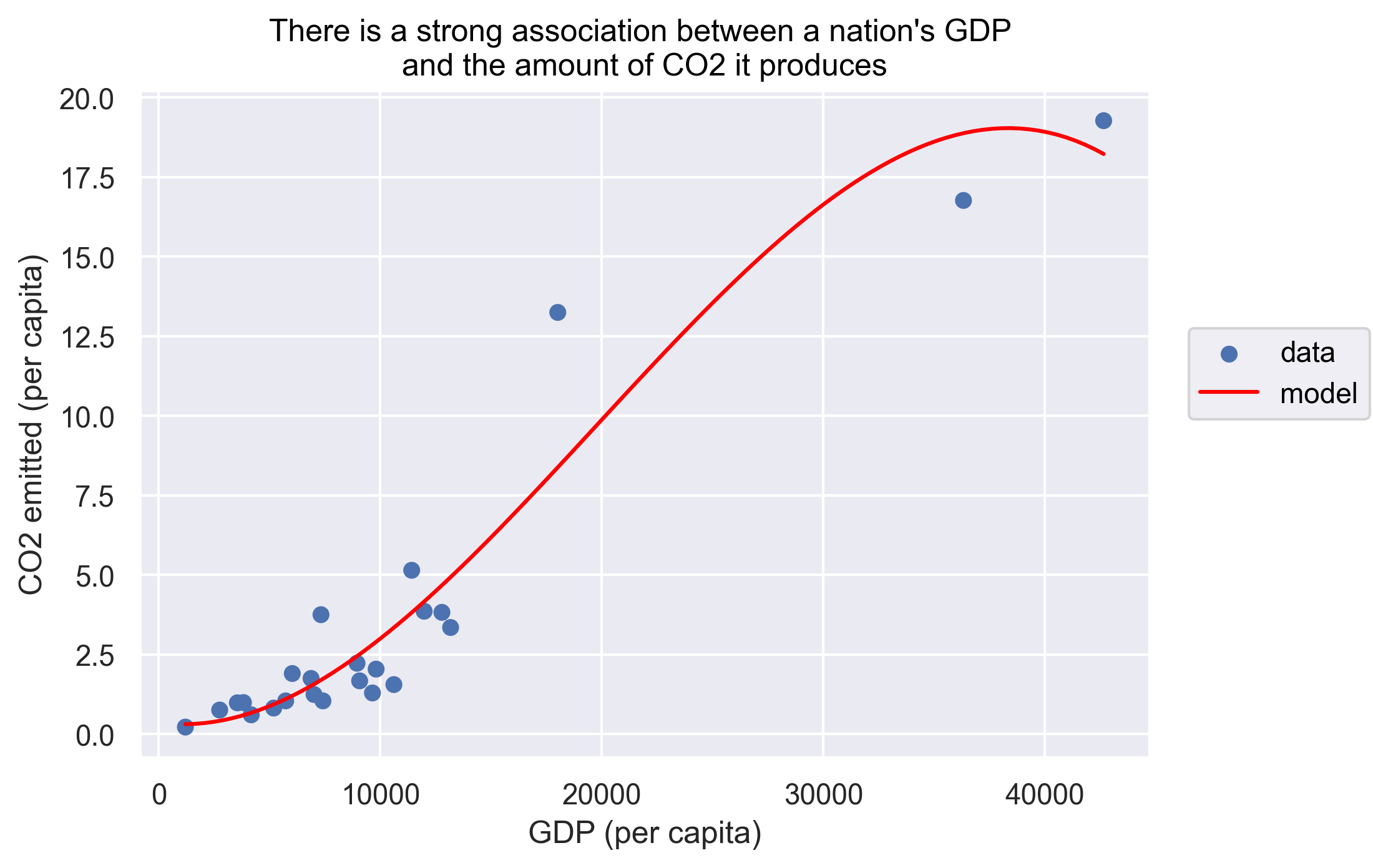

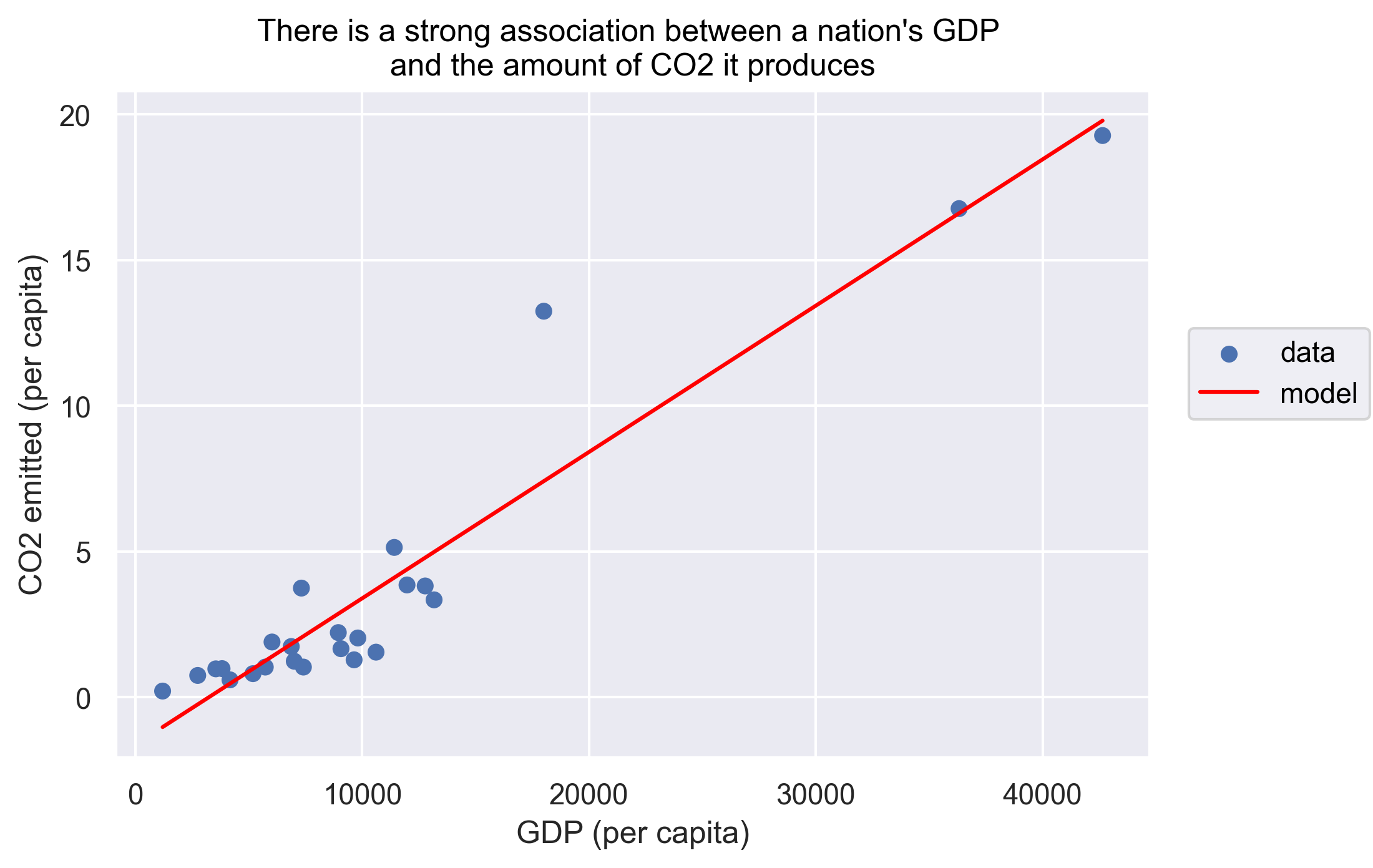

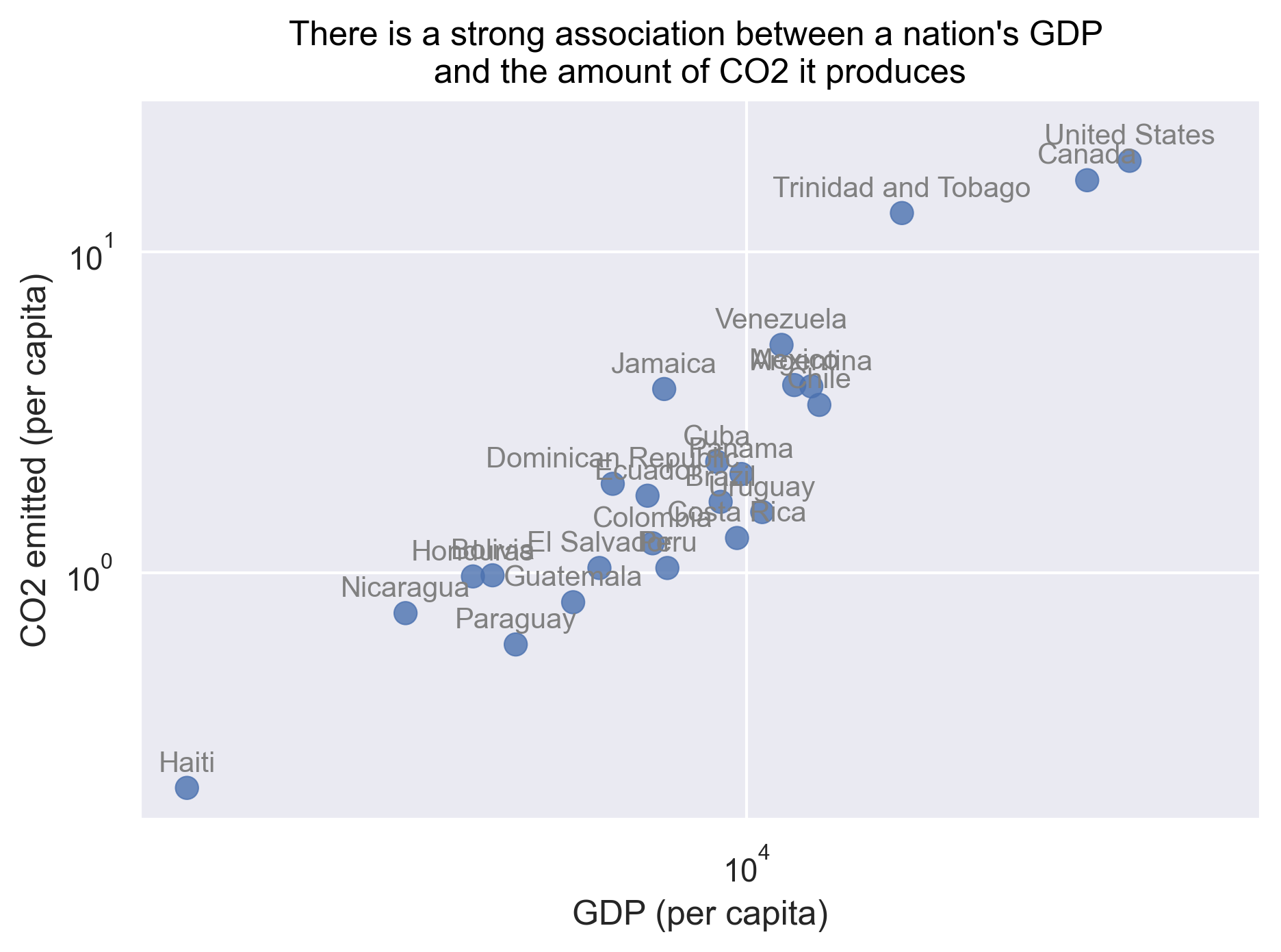

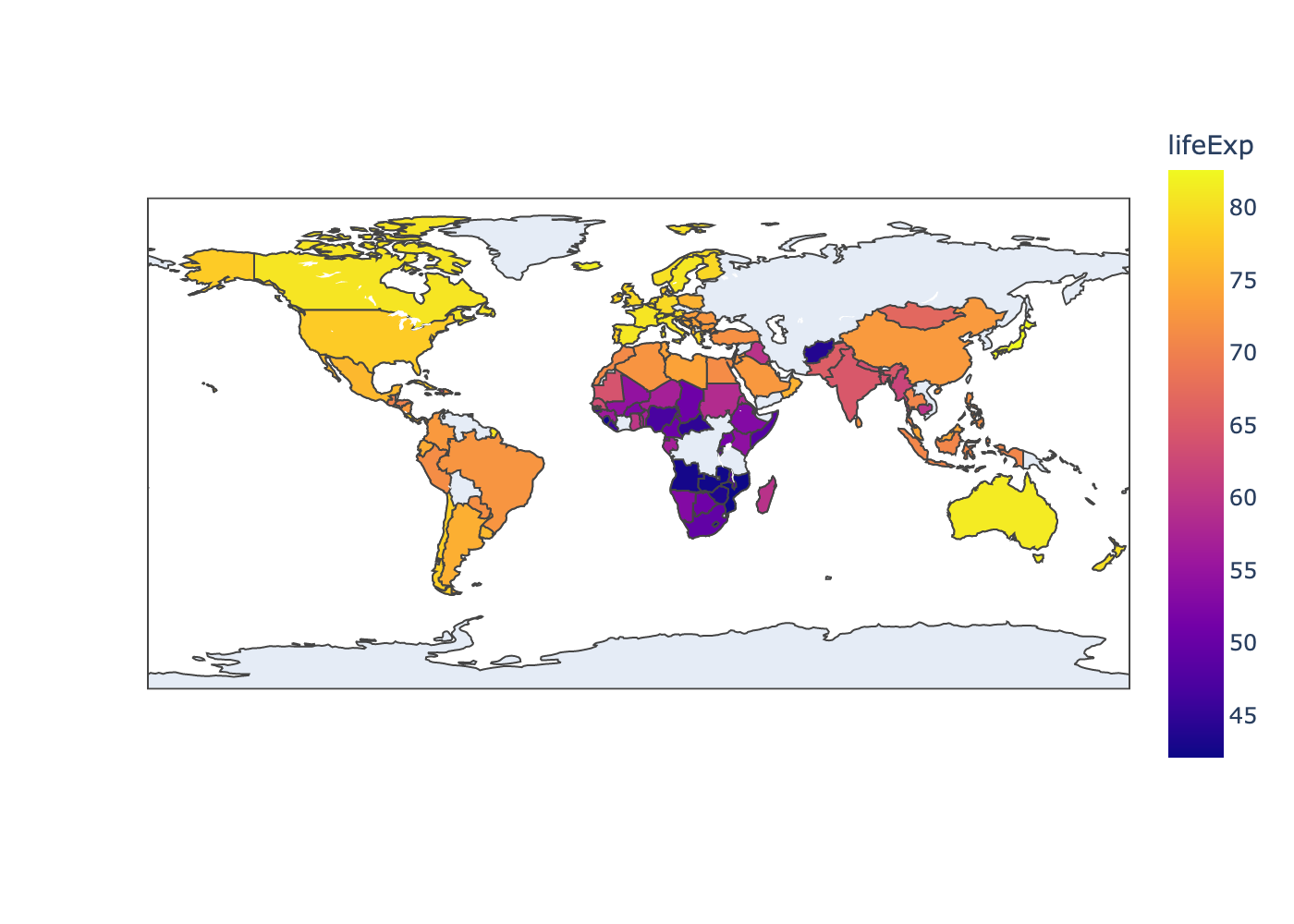

The “goal” of the workshop

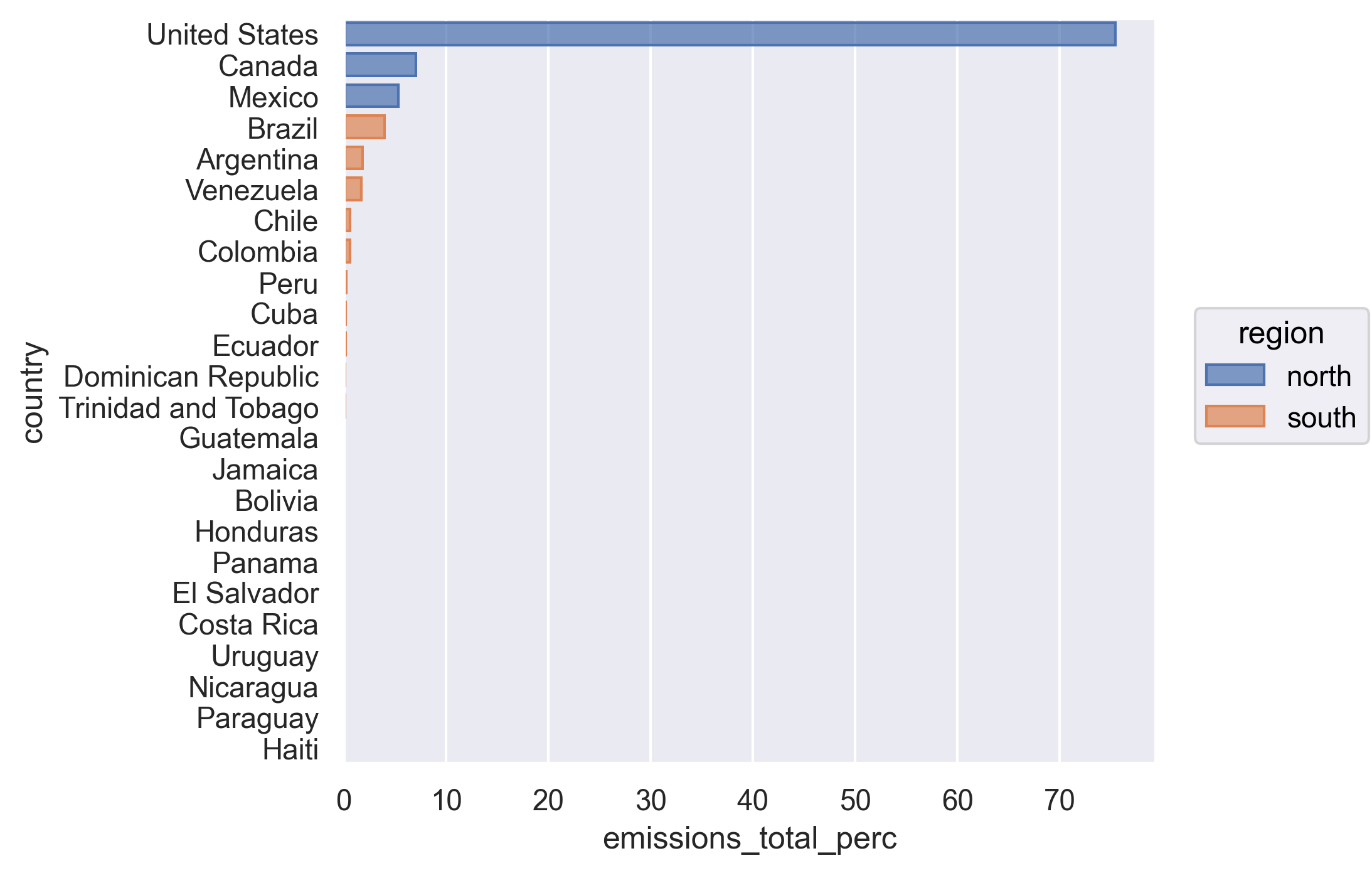

Now that we all know each other, let’s learn a bit more about why we’re here. Our goal is to write a report to the United Nations on the relationship between GDP, life expectancy, and CO2 emissions. In other words, we are going to analyze how countries’ economic strength or weakness may be related to public health status and climate pollution, respectively.

To get to that point, we’ll need to learn how to manage data, make plots, and generate reports. The next section discusses in more detail exactly what we will cover.

What will the workshop cover?

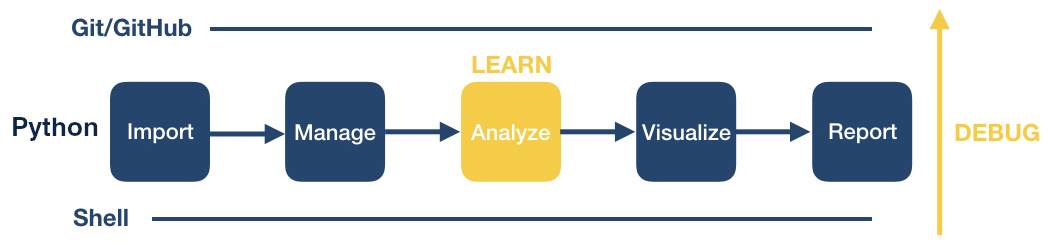

This workshop will introduce you to some of the programs used everyday in computational workflows in diverse fields: microbiology, statistics, neuroscience, genetics, the social and behavioral sciences, such as psychology, economics, public health, and many others.

A workflow is a set of steps to read data, analyze it, and produce numerical and graphical results to support an assertion or hypothesis encapsulated into a set of computer files that can be run from scratch on the same data to obtain the same results. This is highly desirable in situations where the same work is done repeatedly – think of processing data from an annual survey, or results from a high-throughput sequencer on a new sample. It is also desirable for reproducibility, which enables you and other people to look at what you did and produce the same results later on. It is increasingly common for people to publish scientific articles along with the data and computer code that generated the results discussed within them.

The programs to be introduced are:

- Python, JupyterLab: a general purpose program and a interface to it. We’ll use these tools to manage data and make pretty plots!

- Git: a program to help you keep track of changes to your programs over time.

- GitHub: a web application that makes sharing your programs and working on them with others much easier. It can also be used to generate a citable reference to your computer code.

- The Unix shell (command line): A tool that is extremely useful for managing both data and program files and chaining together discrete steps in your workflow (automation).

We will not try to make you an expert or even proficient with any of them, but we hope to demonstrate the basics of controlling your code, automating your work, and creating reproducible programs. We also hope to provide you with some fundamentals that you can incorporate in your own work.

At the end, we provide links to resources you can use to learn about these topics in more depth than this workshop can provide.

Asking questions and getting help

One last note before we get into the workshop.

If you have general questions about a topic, please raise your hand (in person or virtually) to ask it. Virtually, you can also ask the question in the chat. The instructor will definitely be willing to answer!

For more specific nitty-gritty questions about issues you’re having individually, we use sticky notes (in person) or Zoom buttons (red x/green check) to indicate whether you are on track or need help. We’ll use these throughout the workshop to help us determine when you need help with a specific issue (a helper will come help), whether our pace is too fast, and whether you are finished with exercises. If you indicate that you need help because, for instance, you get an error in your code (e.g. red sticky/Zoom button), a helper will message you and (if you’re virtual) possibly go to a breakout room with you to help you figure things out. Feel free to also call helpers over through a hand wave or a message if we don’t see your sticky!

Other miscellaneous things

If you’re in person, we’ll tell you where the bathrooms are! If you’re virtual we hope you know. :) Let us know if there are any accommodations we can provide to help make your learning experience better!

Key Points

We follow The Carpentries Code of Conduct.

Our goal is to generate a shareable and reproducible report by the end of the workshop.

This lesson content is targeted to absolute beginners with no coding experience.

Python for Plotting

Overview

Teaching: 120 min

Exercises: 30 minQuestions

What are Python and JupyterLab?

How do I read data into Python?

How can I use Python to create and save professional data visualizations?

Objectives

To become oriented with Python and JupyterLab.

To be able to read in data from csv files.

To create plots with both discrete and continuous variables.

To understand transforming and plotting data using the seaborn library.

To be able to modify a plot’s color, theme, and axis labels.

To be able to save plots to a local directory.

Contents

- Introduction to Python and JupyterLab

- Python basics

- Loading and reviewing data

- Understanding commands

- Creating our first plot

- Plotting for data exploration

- Bonus

- Glossary of terms

Bonus: why learn to program?

Share why you’re interested in learning how to code.

Solution:

There are lots of different reasons, including to perform data analysis and generate figures. I’m sure you have more specific reasons for why you’d like to learn!

Introduction to Python and JupyterLab

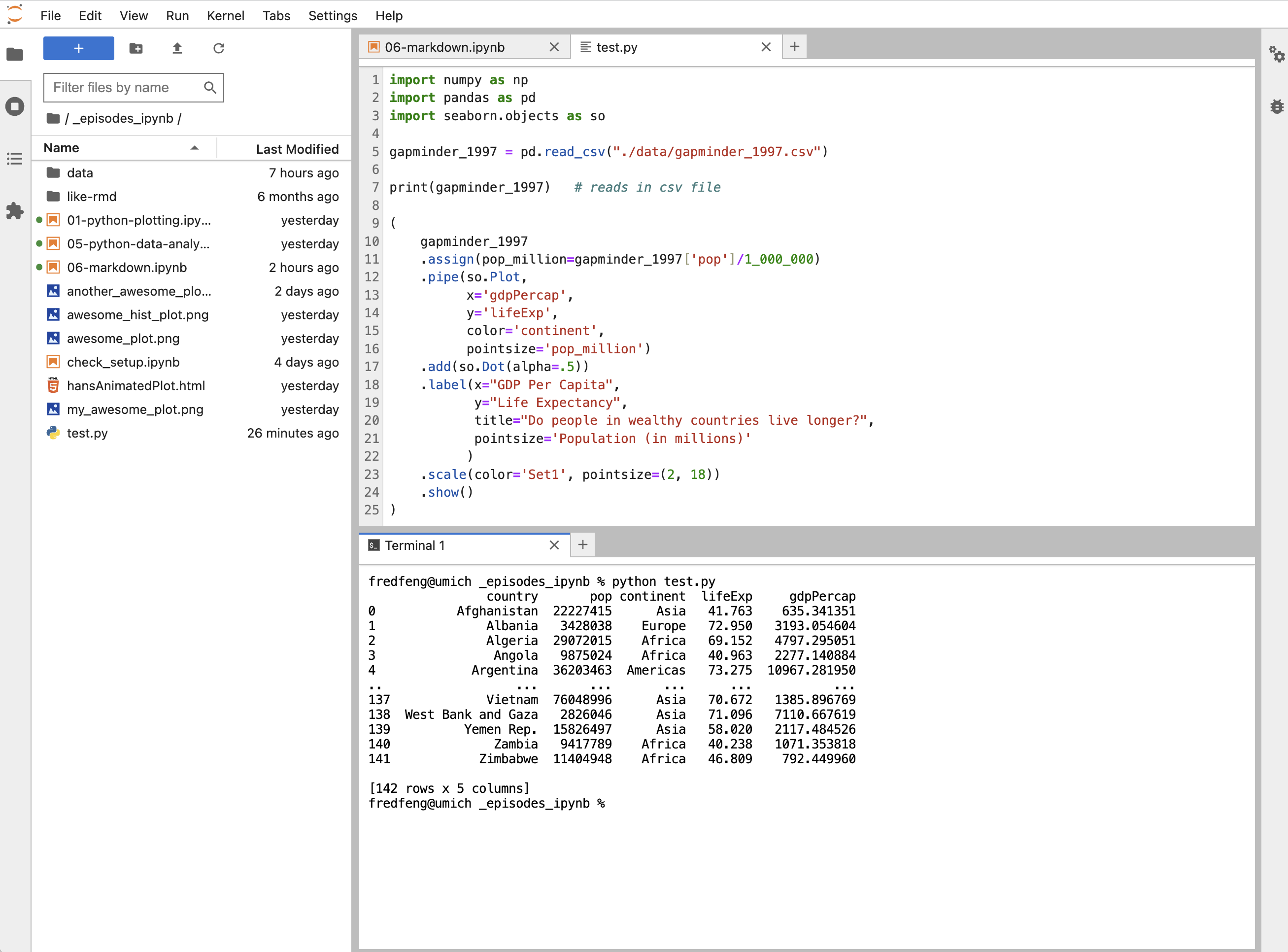

In this session we will be testing the hypothesis that a country’s life expectancy is related to the total value of its finished goods and services, also known as the Gross Domestic Product (GDP). To test this hypothesis, we’ll need two things: data and a platform to analyze the data.

You already downloaded the data. But what platform will we use to analyze the data? We have many options!

We could try to use a spreadsheet program like Microsoft Excel or Google sheets that have limited access, less flexibility, and don’t easily allow for things that are critical to “reproducible” research, like easily sharing the steps used to explore and make changes to the original data.

Instead, we’ll use a programming language to test our hypothesis. Today we will use Python, but we could have also used R for the same reasons we chose Python (and we teach workshops for both languages). Both Python and R are freely available, the instructions you use to do the analysis are easily shared, and by using reproducible practices, it’s straightforward to add more data or to change settings like colors or the size of a plotting symbol.

But why Python and not R?

There’s no great reason. Although there are subtle differences between the languages, it’s ultimately a matter of personal preference. Both are powerful and popular languages that have very well developed and welcoming communities of scientists that use them. As you learn more about Python, you may find things that are annoying in Python that aren’t so annoying in R; the same could be said of learning R. If the community you work in uses Python, then you’re in the right place.

To run Python, all you really need is the Python program, which is available for computers running the Windows, Mac OS X, or Linux operating systems. In this workshop, we will use Anaconda, a popular Python distribution bundled with other popular tools (e.g., many Python data science libraries). We will use JupyterLab (which comes with Anaconda) as the integrated development environment (IDE) for writing and running code, managing projects, getting help, and much more.

Bonus Exercise: Can you think of a reason you might not want to use JupyterLab?

Solution:

On some high-performance computer systems (e.g. Amazon Web Services) you typically can’t get a display like JupyterLab to open. If you’re at the University of Michigan and have access to Great Lakes, then you might want to learn more about resources to run JupyterLab on Great Lakes.

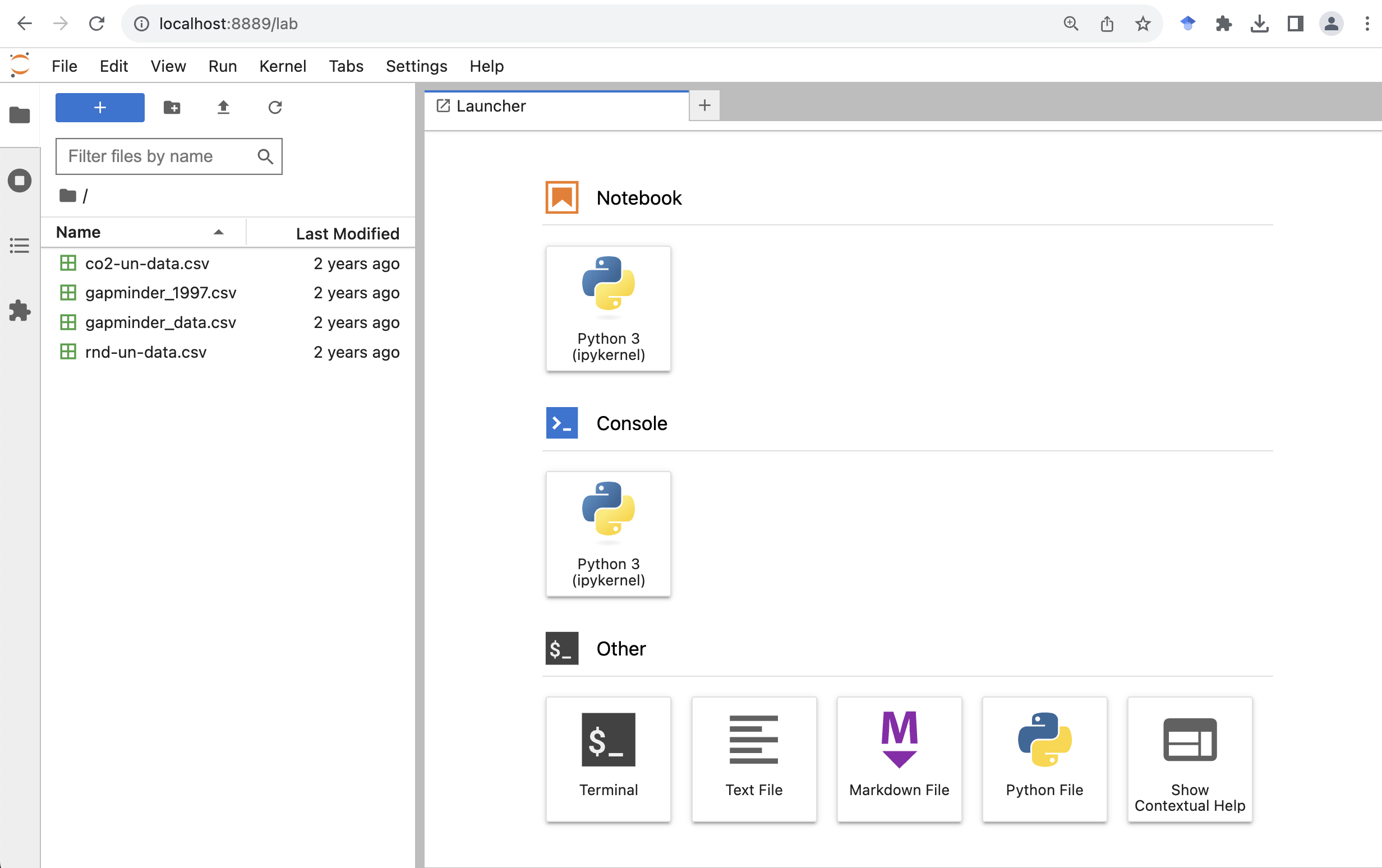

To get started, we’ll spend a little time getting familiar with the JupyterLab interface. When we start JupyterLab, on the left side there’s a collapsible sidebar that contains a file browser where we can see all the files and directories on our system.

On the right side is the main work area where we can write code, see the outputs, and do other things. Now let’s create a new Jupyter notebook by clicking the “Python 3” button (under the “Notebook” category) on the “Launcher” tab .

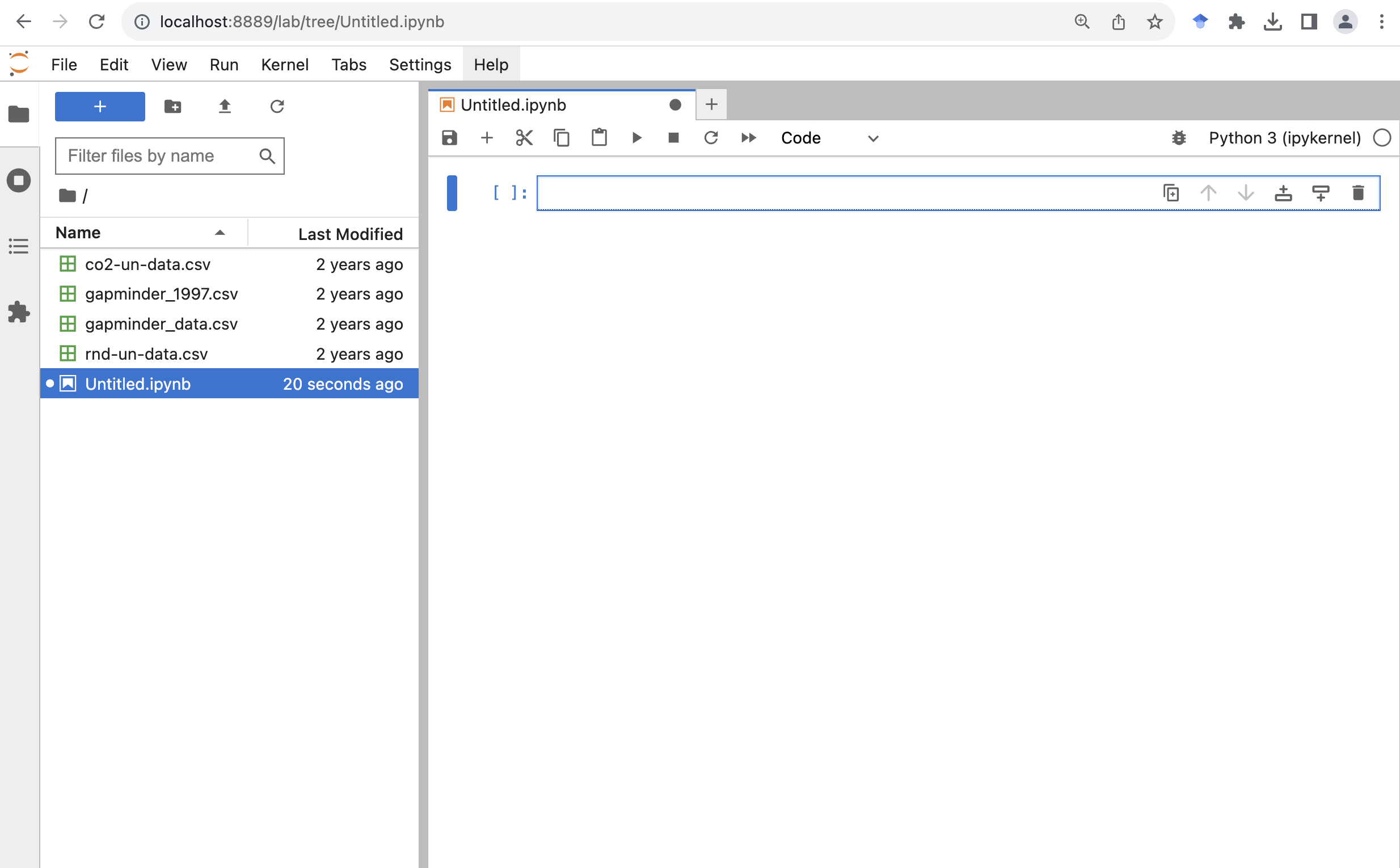

Now we have created a new Jupyter notebook called Untitled.ipynb.

The file name extension ipynb indicates it’s a notebook file.

In case you are interested, it stands for “IPython Notebook”, which is the former name for Jupyter Notebook.

Let’s give it a more meaningful file name called gdp_population.ipynb

To rename a file we can right click it from the file browser, and then click “Rename”.

A notebook is composed of “cells”. You can add more cells by clicking the plus “+” button from the toolbar at the top of the notebook.

Python basics

Arithmetic operators

At a minimum, we can use Python as a calculator.

If we type the following into a cell, and click the run button (the triangle-shaped button that looks like a play button), we will see the output under the cell.

Another quicker way to run the code in the selected cell is by pressing on your keyboard Ctrl+Enter (for Windows) or Command+Return (for MacOS).

Addition

2 + 3

5

Subtraction

2 - 3

-1

Multiplication

2 * 3

6

Division

2 / 3

0.6666666666666666

Exponentiation

One thing that you might need to be a little careful about is the exponentiation.

If you have used Microsoft Excel, MATLAB, R, or some other programming languages,

the operator for exponentiation is the caret ^ symbol.

Let’s take a look at if that works in Python.

2 ^ 3

1

Hmm. That’s not what we expected. It turns out in Python (and a few other languages), the caret symbol is used for another operation called bitwise exclusive OR.

In Python we use double asterisks ** for exponentiation.

2 ** 3

8

Order of operations

We can also use parentheses to specify what operations should be resolved first. For example, to convert 60 degrees Fahrenheit to Celsius, we can do:

5 / 9 * (60 - 32)

15.555555555555555

Assignment operator

In Python we can use a = symbol, which is called the assignment operator, to assign values on the right to objects on the left.

Let’s assign a number to a variable called “age”.

When we run the cell, it seems nothing happened.

But that’s only because we didn’t ask Python to display anything in the output after the assignment operation.

We can call the Python built-in function print() to display information in the output.

We can also use another Python built-in function type() to check the type of an object, in this case, the variable called “age”.

And we can see the type is “int”, standing for integers.

age = 26

print(age)

print(type(age))

26

<class 'int'>

Let’s create another variable called “pi”, and assign it with a value of 3.1415. We can see that this time the variable has a type of “float” for floating-point number, or a number with a decimal point.

pi = 3.1415

print(pi)

print(type(pi))

3.1415

<class 'float'>

We can also assign string or text values to a variable. Let’s create a variable called “name”, and assign it with a value “Ben”.

name = Ben

print(name)

NameError: name 'Ben' is not defined

We got an error message.

As it turns out, to make it work in Python we need to wrap any string values in quotation marks.

We can use either single quotes ' or double quotes ".

We just need to use the same kind of quotes at the beginning and end of the string.

You do need to use the same kind of quotes at the beginning and end of the string.

We can also see that the variable has a type of “str”, standing for strings.

name = "Ben"

print(name)

print(type(name))

Ben

<class 'str'>

Single vs Double Quotes

Python supports using either single quotes

'or double quotes"to specify strings. There’s no set rules on which one you should use.

- Some Python style guide suggests using single-quotes for shorter strings (the technical term is string literals), as they are a little easier to type and read, and using double-quotes for strings that are likely to contain single-quote characters as part of the string itself (such as strings containing natural language, e.g.

"I'll be there.").- Some other Python style guide suggests being consistent with your choice of string quote character within a file. Pick

'or"and stick with it.

Assigning values to objects

Try to assign values to some objects and observe each object after you have assigned a new value. What do you notice?

name = "Ben" print(name) name = "Harry Potter" print(name)Solution

When we assign a value to an object, the object stores that value so we can access it later. However, if we store a new value in an object we have already created (like when we stored “Harry Potter” in the

nameobject), it replaces the old value.

Guidelines on naming objects

- You want your object names to be explicit and not too long.

- They cannot start with a number (2x is not valid, but x2 is).

- Python is case sensitive, so for example, weight_kg is different from Weight_kg.

- You cannot use spaces in the name.

- There are some names that cannot be used because they are the names of fundamental functions in Python (e.g.,

if,else, `for; runhelp("keywords")for a complete list). You may also notice these keywords change to a different color once you type them (a feature called “syntax highlighting”).- It’s best to avoid dots (.) within names. Dots have a special meaning (methods) in Python and other programming languages.

- It is recommended to use nouns for object names and verbs for function names.

- Be consistent in the styling of your code, such as where you put spaces, how you name objects, etc. Using a consistent coding style makes your code clearer to read for your future self and your collaborators. The official Python naming conventions can be found here.

Bonus Exercise: Bad names for objects

Try to assign values to some new objects. What do you notice? After running all four lines of code bellow, what value do you think the object

Flowerholds?1number = 3 Flower = "marigold" flower = "rose" favorite number = 12Solution

Notice that we get an error when we try to assign values to

1numberandfavorite number. This is because we cannot start an object name with a numeral and we cannot have spaces in object names. The objectFlowerstill holds “marigold.” This is because Python is case-sensitive, so runningflower = "rose"does NOT change theFlowerobject. This can get confusing, and is why we generally avoid having objects with the same name and different capitalization.

Data structures

Python lists

Rather than storing a single value to an object, we can also store multiple values into a single object called a list. A Python list is indicated with a pair of square brackets

[], and different items are separated by a comma. For example, we can have a list of numbers, or a list of strings.squares = [1, 4, 9, 16, 25] print(squares) names = ["Sara", "Tom", "Jerry", "Emma"] print(names)We can also check the type of the object by calling the

type()function.type(names)listAn item from a list can be accessed by its position using the square bracket notation. Say if we want to get the first name, “Sara”, from the list, we can do

names[1]'Tom'That’s not what we expected. Python uses something called 0-based indexing. In other words, it starts counting from 0 rather than 1. If we want to get the first item from the list, we should use an index of 0. Let’s try that.

names[0]'Sara'Now see if you can get the last name from the list.

Solutions:

names[3]A cool thing in Python is it also supports negative indexing. If we just want the last time on a list, we can pass the index of

-1.names[-1]

Python dictionaries

Python lists allow us to organize items by their position. Sometimes we want to organize items by their “keys”. This is when a Python dictionary comes in handy.

A Python dictionary is indicated with a pair of curly brackets

{}and composed of entries of key-value pairs. The key and value are connected via a colon:, and different entries are separated by a comma,. For example, let’s create a dictionary of capitals. We can separate the entries in multiple lines to make it a little easier to read, especially when we have many entries. In Python we can break lines inside braces (e.g.,(),[],{}) without breaking the code. This is a common technique people use to avoid long lines and make their code a little more readable.capitals = {"France": "Paris", "USA": "Washington DC", "Germany": "Berlin", "Canada": "Ottawa"}We can check the type of the object by calling the

type()function.type(capitals)dictAn entry from a dictionary can be accessed by its key using the square bracket notation. Say if we want to get the capital for USA, , we can do

capitals["USA"]'Washington DC'Now see if you can get the capital from another country.

Solutions:

capitals["Canada"]'Ottawa'

Calling functions

So far we have used two Python built-in functions, print() to print some values on the screen, and type() to show the type of an object.

The way we called these functions is to first type the name of the function, followed by a pair of parenthesis.

Many functions require additional pieces of information to do their job. We call these additional values “arguments” or “parameters”.

We pass the arguments to a function by placing values in between the parenthesis.

A function takes in these arguments and does a bunch of “magic” behind the scenes to output something we’re interested in.

Do all functions need arguments? Let’s test some other functions.

It is common that we may want to use a function from a module.

In this case we will need to first import the module to our Python session.

We do that by using the import keyword followed by the module’s name.

To call a function from a module, we type the name of the imported module, followed by a dot ., followed by the name of the function that we wish to call.

Below we import the operating system module and call the function getcwd() to get the current working directory.

import os

os.getcwd()

'/Users/fredfeng/Desktop/teaching/workshops/um-carpentries/intro-curriculum-python/_episodes_ipynb'

Sometimes the function resides inside a submodule, we can specify the submodule using the dot notation.

In the example below, we call the today() function which is located in the date submodule inside the datetime module that we imported.

import datetime

datetime.date.today()

datetime.date(2023, 11, 4)

While some functions, like those above, don’t need any arguments, in other

functions we may want to use multiple arguments.

When we’re using multiple arguments, we separate the arguments with commas.

For example, we can use the print() function to print two strings:

print("My name is", name)

My name is Harry Potter

Pro-tip

Each function has a help page that documents what a function does, what arguments it expects and what it will return. You can bring up the help page a few different ways. You can type

?followed by the function name, for example,You can also place the mouse curse next to a function, and press Shift+Tab to see its help doc.

Learning more about functions

Look up the function

round(). What does it do? What will you get as output for the following lines of code?round(3.1415) round(3.1415, 3)Solution

The

round()function rounds a number to a given precision. By default, it rounds the number to an integer (in our example above, to 3). If you give it a second number, it rounds it to that number of digits (in our example above, to 3.142)Notice how in this example, we didn’t include any argument names. But you can use argument names if you want:

round(number=3.1415, ndigits=3)

Position of the arguments in functions

Which of the following lines of code will give you an output of 3.14? For the one(s) that don’t give you 3.14, what do they give you?

round(number=3.1415) round(number=3.1415, ndigits=2) round(ndigits=2, number=3.1415) round(2, 3.1415)Solution

The 2nd and 3rd lines will give you the right answer because the arguments are named, and when you use names the order doesn’t matter. The 1st line will give you 3 because the default number of digits is 0. Then 4th line will give you 2 because, since you didn’t name the arguments, x=2 and digits=3.1415.

Sometimes it is helpful - or even necessary - to include the argument name, but often we can skip the argument name, if the argument values are passed in a certain order. If all this function stuff sounds confusing, don’t worry! We’ll see a bunch of examples as we go that will make things clearer.

Comments

Sometimes we may want to write some comments in our code to help us remember what our code is doing, but we don’t want Python to think these comments are a part of the code you want to evaluate. That’s where comments come in! Anything after a

#sign in your code will be ignored by Python. For example, let’s say we wanted to make a note of what each of the functions we just used do:datetime.date.today() # returns today's dateos.getcwd() # returns our current working directorySome other time we may want to temporarily disable some code without deleting them. We can comment out lines of code by placing a

#sign at the beginning of each line.A handy keyboard shortcut for that is move the mouse cursor to the line you wish to comment out, then press Ctrl+/ (for Windows) or Command+/ (for MacOS) to toggle through comment and uncomment. If you wish to comment out multiple lines, first select all the lines, then use the same keyboard shortcut to comment or uncomment.

Loading and reviewing data

Data objects

In the above we introduced Python lists and dictionaries. There are other ways to store data in Python. Most objects have a table-like structure with rows and columns. We will refer to these objects generally as “data objects”. If you’ve used pandas before, you may be used to calling them “DataFrames”.

Understanding commands

The first thing we usually do when starting a new notebook is to import the libraries that we will need later to the python session. In general, we will need to first install a library before we can import it. If you followed the setup instruction and installed Anaconda, some common data science libraries are already installed.

Here we can go ahead and import them using the import keyword followed by the name of the library.

It’s common to give a library an alias name or nickname, so we can type less words when calling the library later.

The alias is created by using the keyword as.

By convention, numpy’s alias is np, and pandas’s alias is pd.

Technically you can give whatever the alias you want, but please don’t :)

import numpy as np

import pandas as pd

pd.read_csv()

TypeError: read_csv() missing 1 required positional argument: 'filepath_or_buffer'

We get an error message. Don’t panic! Error messages pop up all the time, and can be super helpful in debugging code.

In this case, the message tells us the function that we called is “missing 1 required positional argument: ‘filepath_or_buffer’”

If we think about it. We haven’t told the function what CSV files to read. Let’s tell the function where to find the CSV file by passing a file path to the function as a string.

gapminder_1997 = pd.read_csv("gapminder_1997.csv")

gapminder_1997

country pop continent lifeExp gdpPercap

0 Afghanistan 22227415 Asia 41.763 635.341351

1 Albania 3428038 Europe 72.950 3193.054604

2 Algeria 29072015 Africa 69.152 4797.295051

3 Angola 9875024 Africa 40.963 2277.140884

4 Argentina 36203463 Americas 73.275 10967.281950

.. ... ... ... ... ...

137 Vietnam 76048996 Asia 70.672 1385.896769

138 West Bank and Gaza 2826046 Asia 71.096 7110.667619

139 Yemen Rep. 15826497 Asia 58.020 2117.484526

140 Zambia 9417789 Africa 40.238 1071.353818

141 Zimbabwe 11404948 Africa 46.809 792.449960

[142 rows x 5 columns]

The read_csv() function took the file path we provided, did who-knows-what behind the scenes, and then outputted a table with the data stored in that CSV file.

All that, with one short line of code!

We can check the type of the variable by calling the Python built-in function type.

type(gapminder_1997)

pandas.core.frame.DataFrame

In pandas terms, gapminder_1997 is a named DataFrame that references or stores something. In this case, gapminder_1997 stores a specific table of data.

Reading in an Excel file

Say you have an Excel file and not a CSV - how would you read that in? Hint: Use the Internet to help you figure it out!

Solution

Pandas comes with the

read_excel()function which provides the same output as the output ofread_csv().

Creating our first plot

We will mostly use the Vega-Altair (Altair for short), a popular Python data visualization library, to make our charts.

We first import the Altair module.

All charts start by calling the Altair’s Chart() function.

In a Jupyter notebook cell type the following:

import altair as alt

alt.Chart(gapminder_1997)

When we run it, we will see the following error message

SchemaValidationError: '{'data': {'name': 'data-86c7d3f994999d48e1df64fba25df47d'}}' is an invalid value.

'mark' is a required property

What we’ve done is to call the Chart() function to instantiate a Chart object and told it we will be using the data from the gapminder_1997, the DataFrame that we loaded from the CSV file.

So we’ve made a Chart object, now we need to start telling it what we actually want to draw in this chart.

The geometric objects in a chart, such as bars, lines, points, are called marks in Altair.

For those of you who used ggplot in R, it’s the “geoms”.

Now we need to specify the marks in the chart.

We will talk about a few different marks today,

but for our first plot, let’s draw our data using the “circle” mark for each value in the data set.

To do this, we apply the mark_circle() method to the chart.

alt.Chart(gapminder_1997).mark_circle()

The geometric objects of a chart, such as circles, bars, have a bunch of visual properties such as an x and y position, size, color, etc. When creating a data visualization, we map a variable in our dataset to a visual property in our chart.

To create our chart, we need to map variables from our data gapminder_1997 to

the visual properties using the Chart() function.

Since we have already told Chart that we are using the data in the gapminder_1997, we can access the columns of gapminder_1997 using the data frame’s column names.

(Remember, Python is case-sensitive, so we have to be careful to match the column names exactly!)

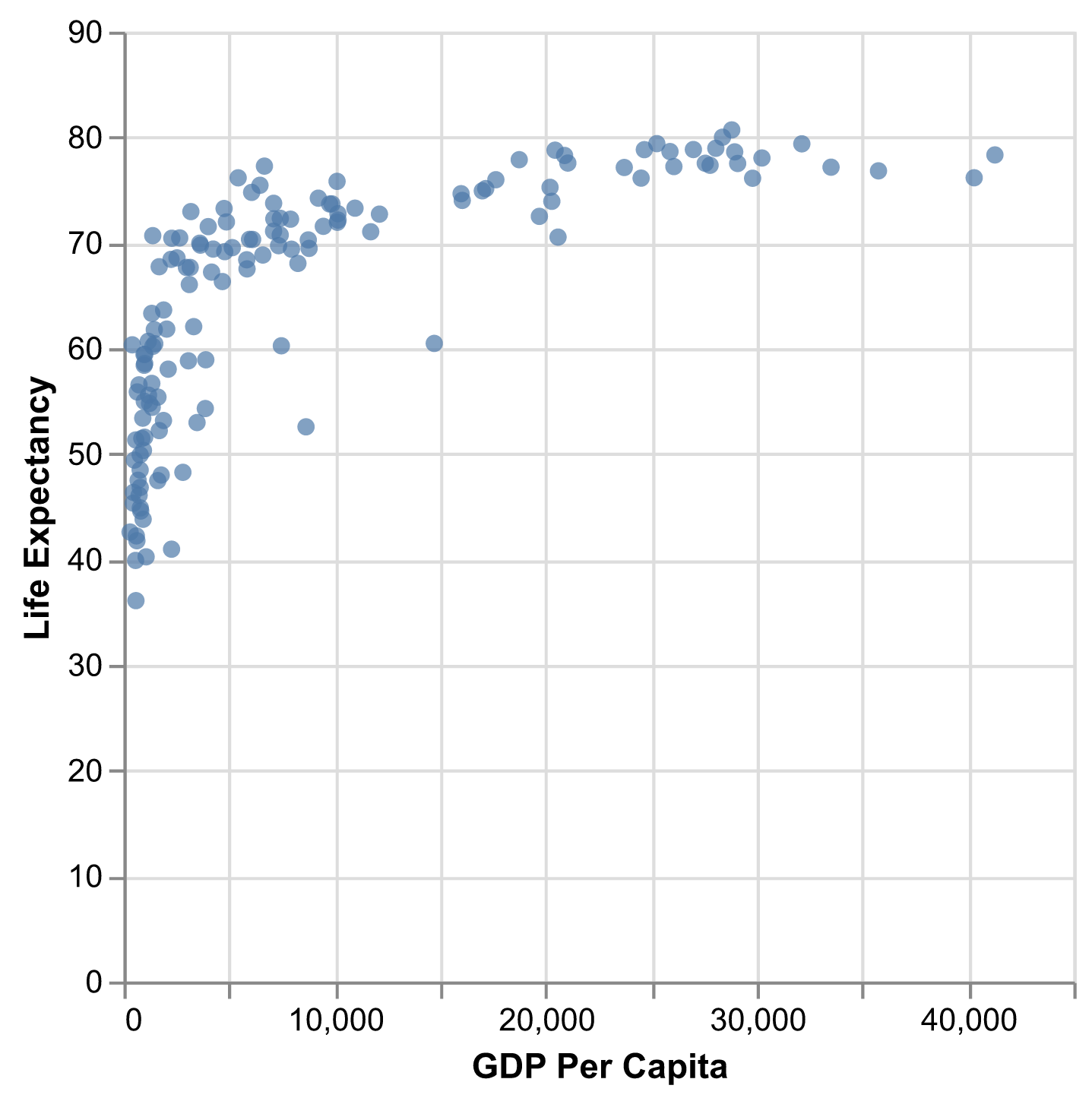

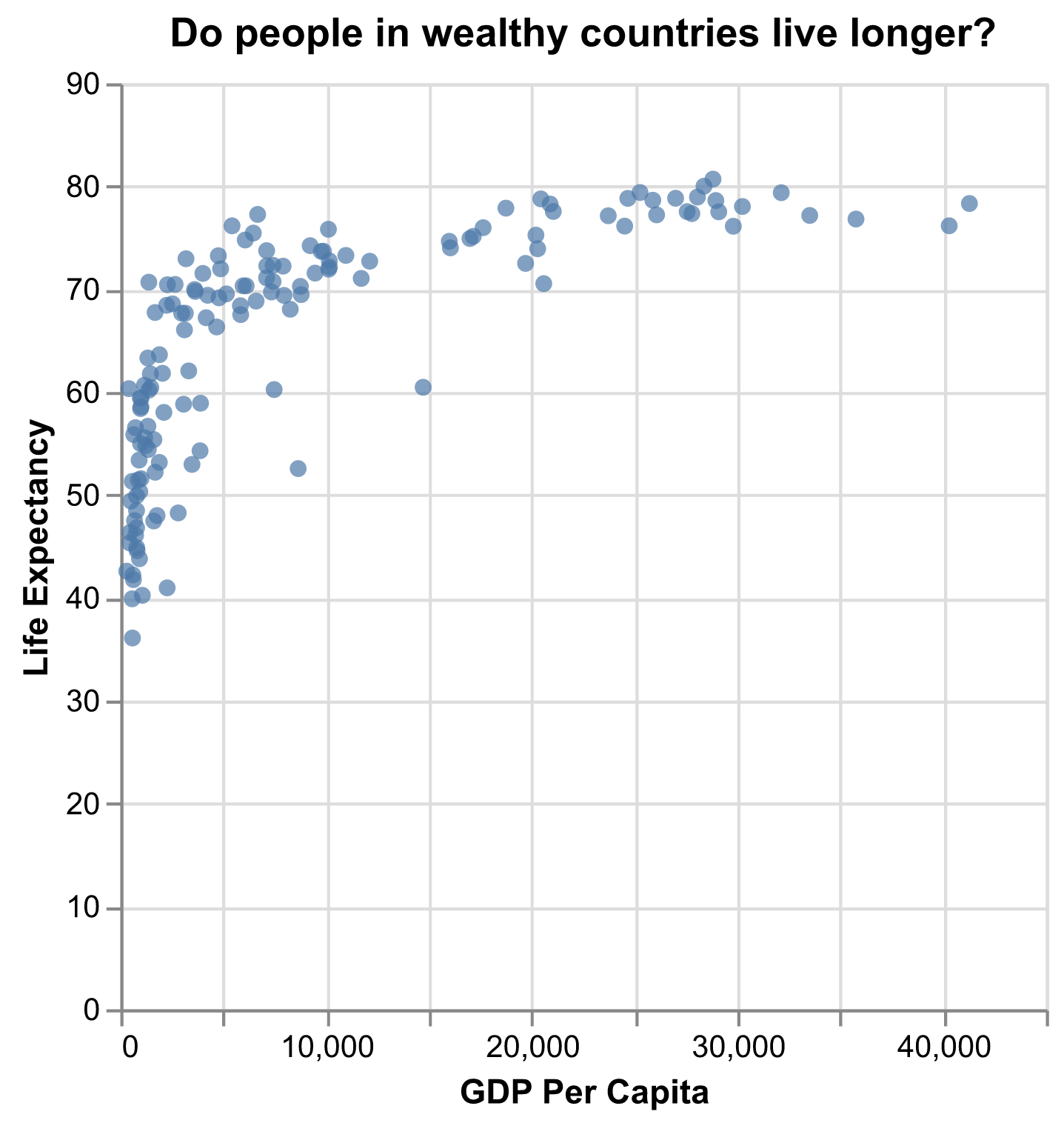

We are interested in whether there is a relationship between GDP and life expectancy, so let’s start by telling our plot object that we want to map the GDP values to the x axis.

alt.Chart(gapminder_1997).mark_point().encode(

x='gdpPercap',

)

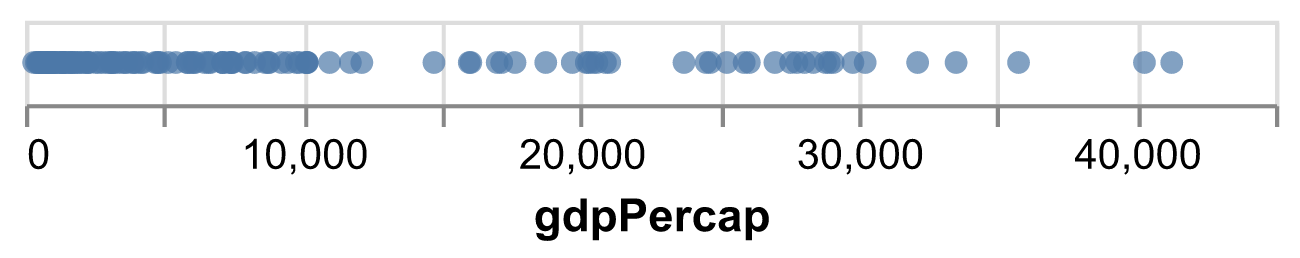

We see a sometimes called dot plot, where the GDP values are encoded on the x axis. Now let’s add the life expectancy to the y axis of the plot.

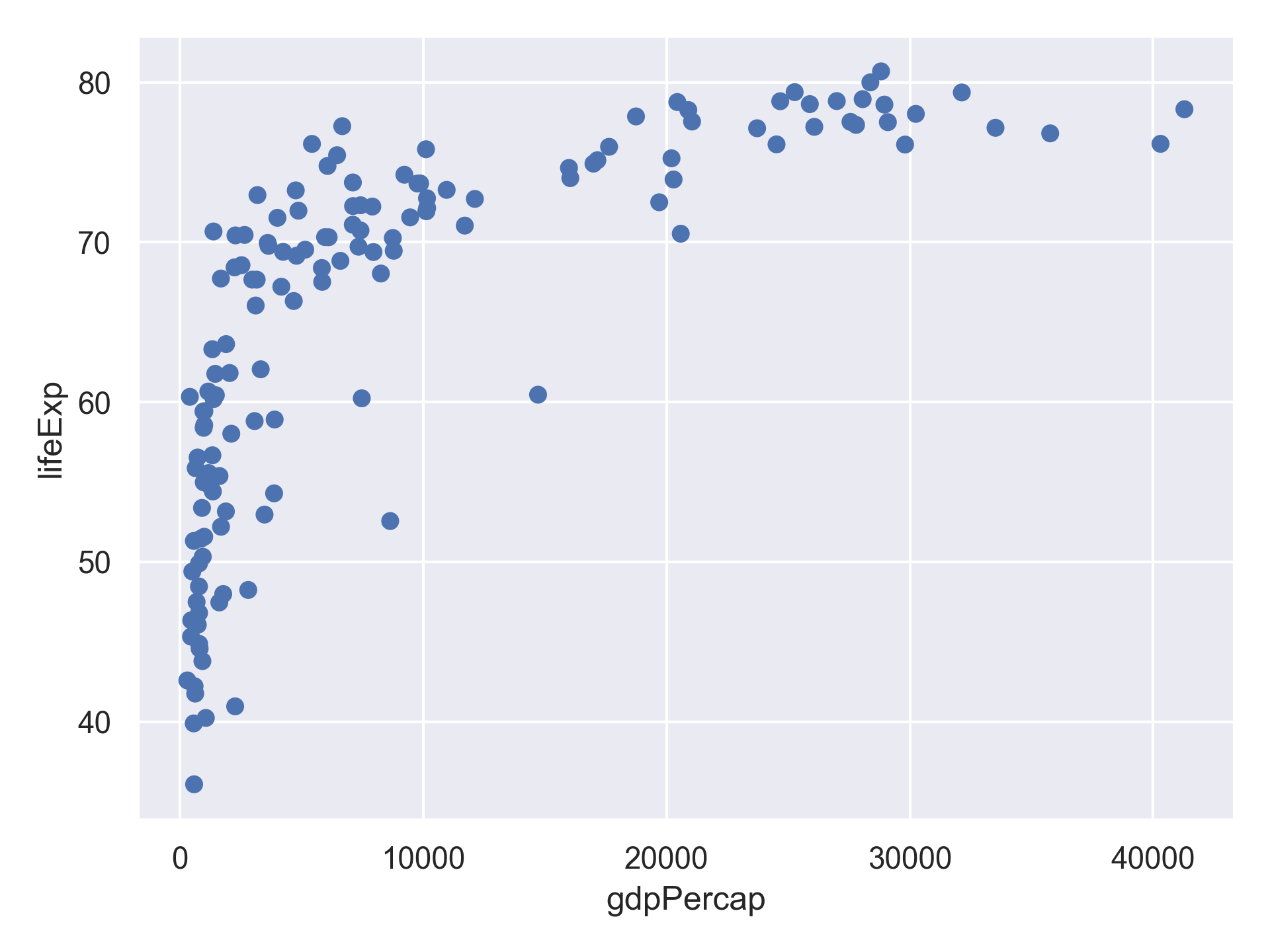

alt.Chart(gapminder_1997).mark_circle().encode(

x='gdpPercap',

y='lifeExp',

)

Excellent. We’ve now told our plot where the x and y values are coming from and what they stand for. But we haven’t told our plot how we want it to draw the data.

What we just did is to pass the name of the two columns to the x and y inside the encode method.

This offers a quick and concise way to generate a chart if we just want to quickly explore the data and don’t mind using the default settings.

For example, you may notice the labels of the x and y axis are using the column names from the dataframe.

If we want to customize the chart, for example, using a different axis label or axis range, Altair offers a different way to specify the channel encoding options.

We can add labels for the axes and title by applying the label() method to our plot.

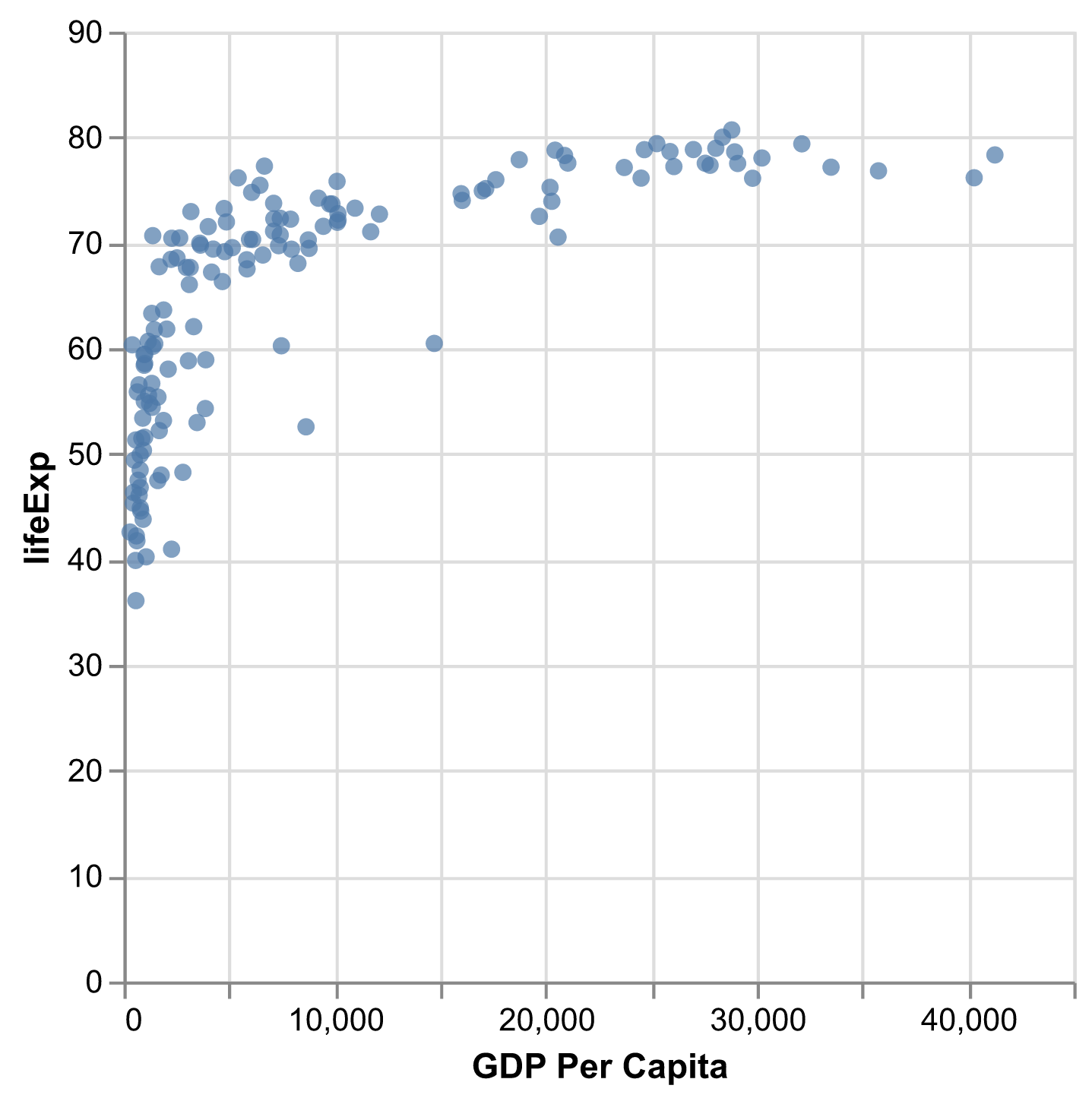

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y='lifeExp',

)

Give the y axis a nice label.

Solution

alt.Chart(gapminder_1997).mark_circle().encode( x=alt.X('gdpPercap', title='GDP Per Capita'), y=alt.Y('lifeExp', title='Life Expectancy'), )

Now it finally looks like a proper plot!

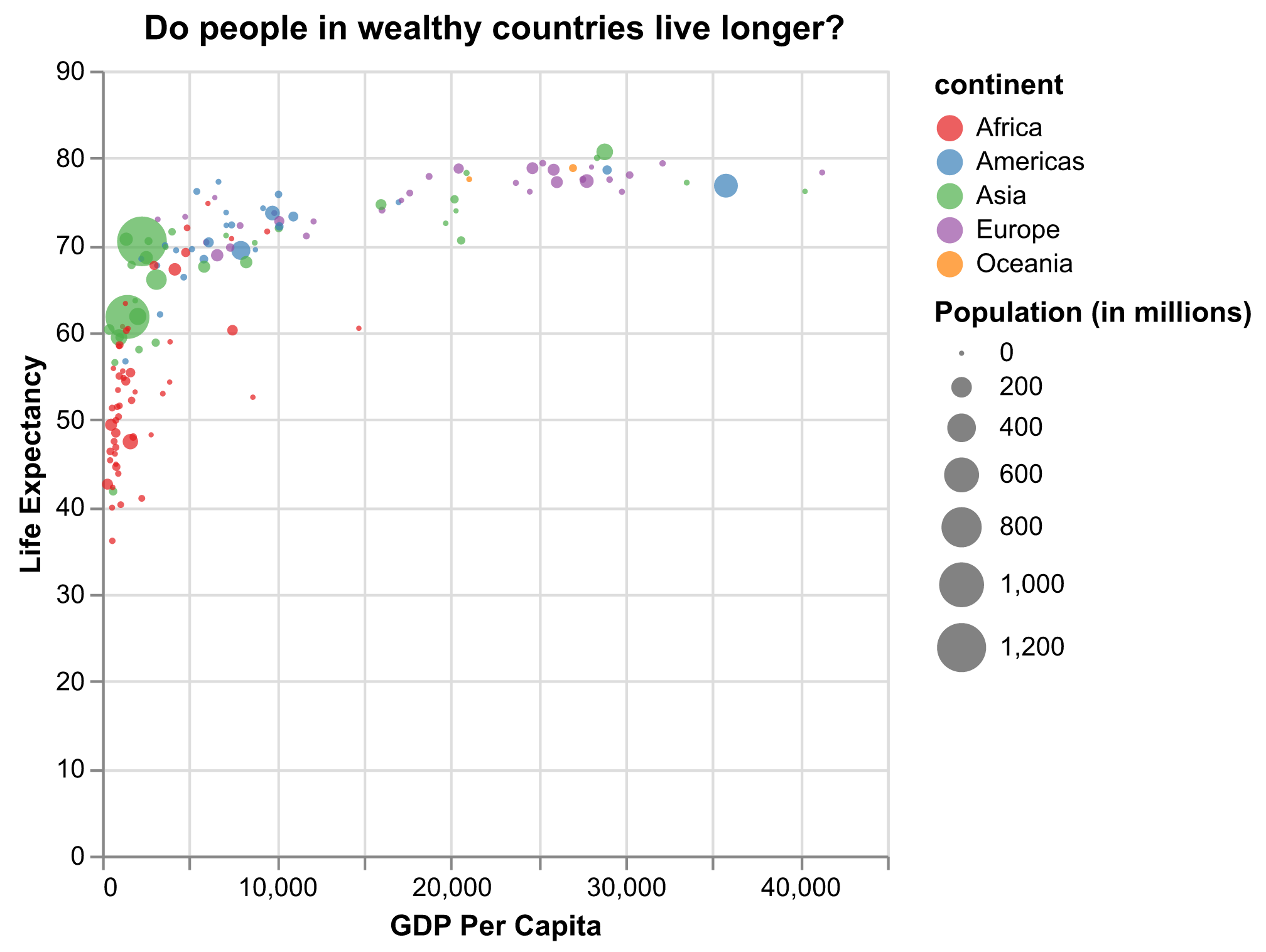

We can now see a trend in the data.

It looks like countries with a larger GDP tend to have a higher life expectancy.

Let’s add a title to our plot to make that clearer.

We can specify that using the same label() method, but this time we will use the title argument.

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y=alt.Y('lifeExp', title='Life Expectancy'),

).properties(

title='Do people in wealthy countries live longer?',

)

No one can deny we’ve made a very handsome plot!

But now looking at the data, we might be curious about learning more about the points that are the extremes of the data.

We know that we have two more pieces of data in the gapminder_1997 that we haven’t used yet.

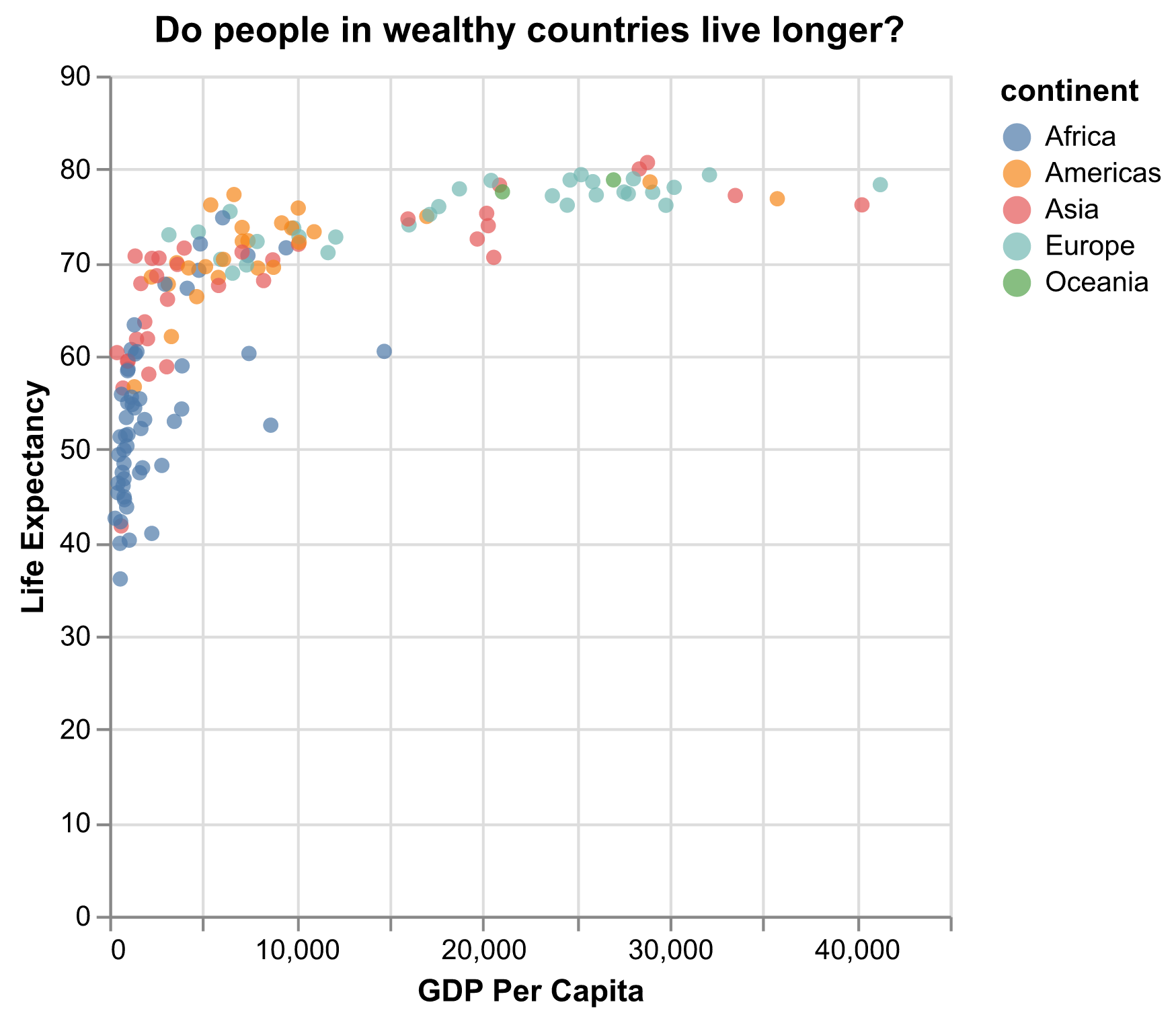

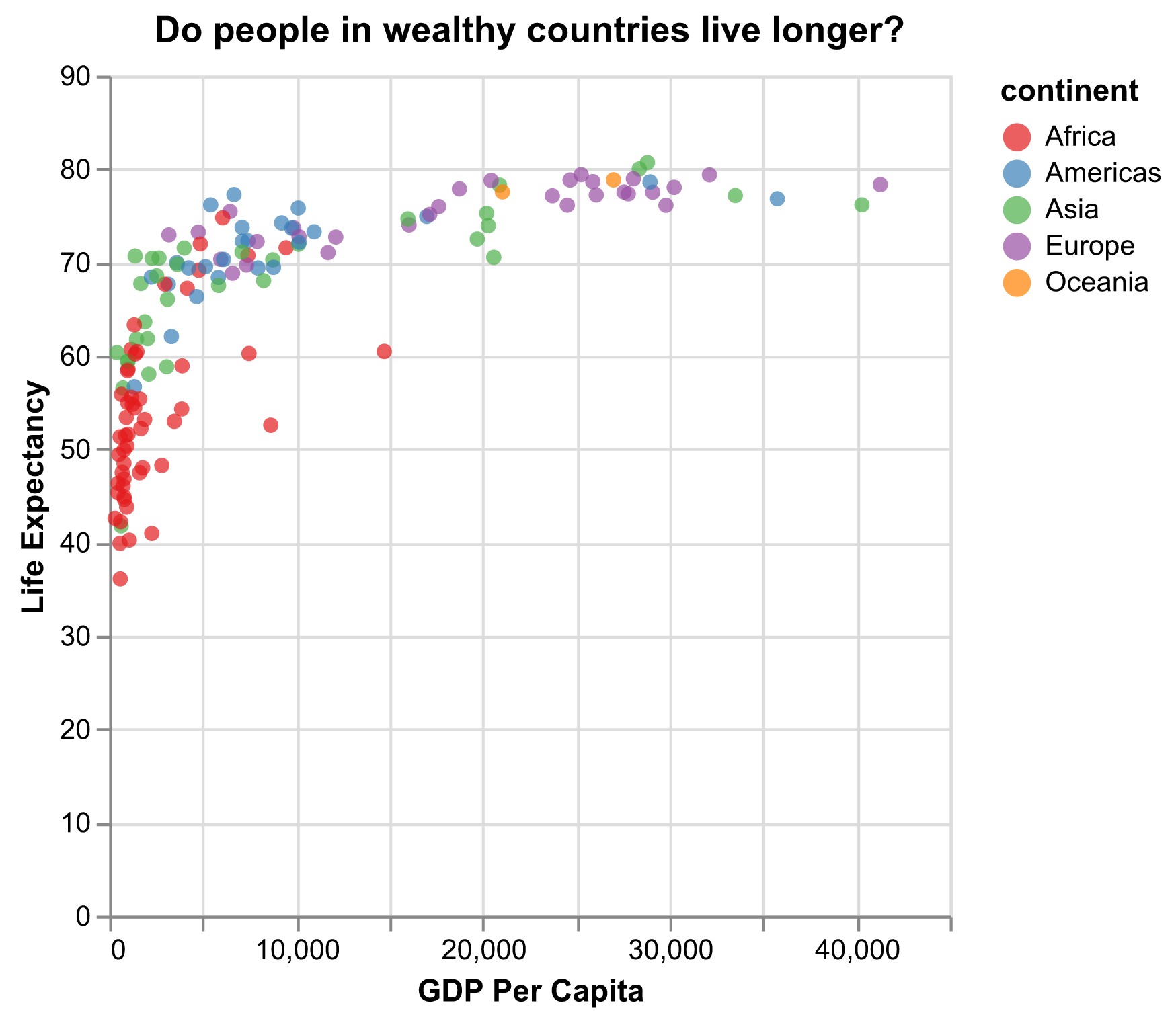

Maybe we are curious if the different continents show different patterns in GDP and life expectancy.

One thing we could do is use a different color for each of the continents.

It is possible to map data values to various graphical properties.

In this case let’s map the continent to the color property.

The quickest way to do this is to set color='continent' inside the encode.

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y=alt.Y('lifeExp', title='Life Expectancy'),

color='continent',

).properties(

title='Do people in wealthy countries live longer?',

)

But here we wish to customize this chart later. So let’s use the syntax that allow us to customize the settings later.

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y=alt.Y('lifeExp', title='Life Expectancy'),

color=alt.Color('continent'),

).properties(

title='Do people in wealthy countries live longer?',

)

Here we can see that in 1997 the African countries had much lower life expectancy than many other continents.

Notice that when we add a mapping for color, Altair automatically provides a legend for us.

It took care of assigning different colors to each of our unique values of the continent variable.

The colors that Altair uses are determined by the color scheme.

If needed, we can change the default color scheme.

Let’s change the colors to make them a bit prettier.

The code below allows us to select a color palette. Altair is built based on the vega project and supports all the color schemes from the vega color schemes.

We can change the color scheme by applying the scale() method to the to the color schema wrapper alt.Color.

The scale() method specifies how the data should be mapped to visual properties, and in this case, how the categorical variable “continent” should be mapped to different colors of the point marks.

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y=alt.Y('lifeExp', title='Life Expectancy'),

color=alt.Color('continent').scale(scheme='set1'),

).properties(

title='Do people in wealthy countries live longer?',

)

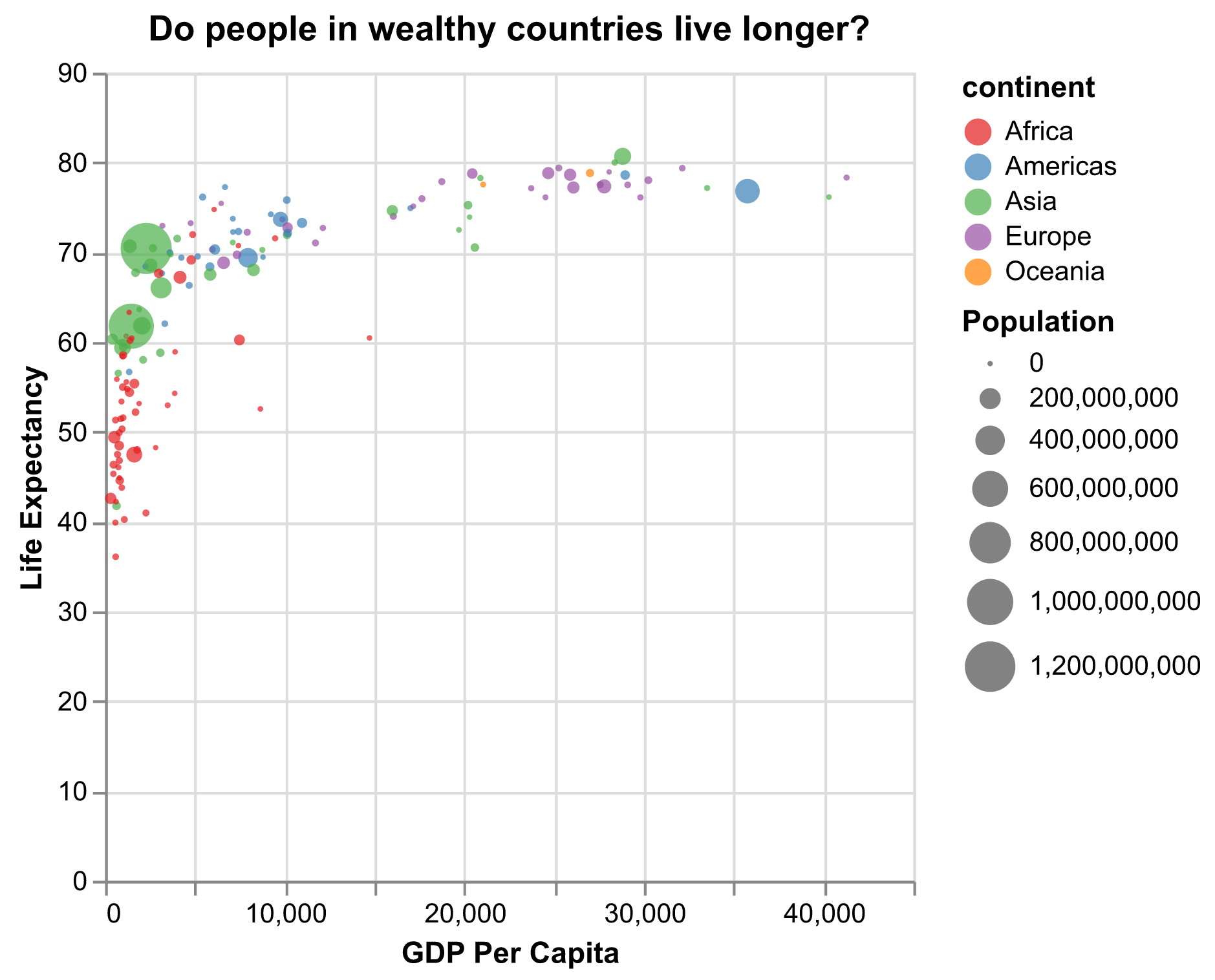

Since we have the data for the population of each country, we might be curious what effect population might have on life expectancy and GDP per capita.

Do you think larger countries will have a longer or shorter life expectancy?

Let’s find out by mapping the population of each country to another visual property: the size of the point marks.

Again, for a quick plot, we can just add size='pop' to the encoding.

But we will use the syntax that supports customization, and add a title to it, so it shows the word “Population” rather than the column name “pop” in the legend.

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y=alt.Y('lifeExp', title='Life Expectancy'),

color=alt.Color('continent').scale(scheme='set1'),

size=alt.Size('pop', title='Population'),

).properties(

title='Do people in wealthy countries live longer?',

)

We got another legend here for size which is nice, but the values look a bit ugly with very long digits.

Let’s assign a new column in our data called pop_million by dividing the population by 1,000,000 and label it “Population (in millions)”

Note for large numbers such as 1000000, it’s easy to mis-count the number of digits when typing or reading it.

Another way to write it is 1e6 which means 10 raised to the 6th power.

alt.Chart(gapminder_1997).mark_circle().encode(

x=alt.X('gdpPercap', title='GDP Per Capita'),

y=alt.Y('lifeExp', title='Life Expectancy'),

color=alt.Color('continent').scale(scheme='set1'),

size=alt.Size('pop', title='Population (in millions)'),

).transform_calculate(

pop='datum.pop/1000000'

).properties(

title='Do people in wealthy countries live longer?',

)

Plotting for data exploration

Many datasets are much more complex than the example we used for the first plot. How can we find meaningful insights in complex data and create visualizations to convey those insights?

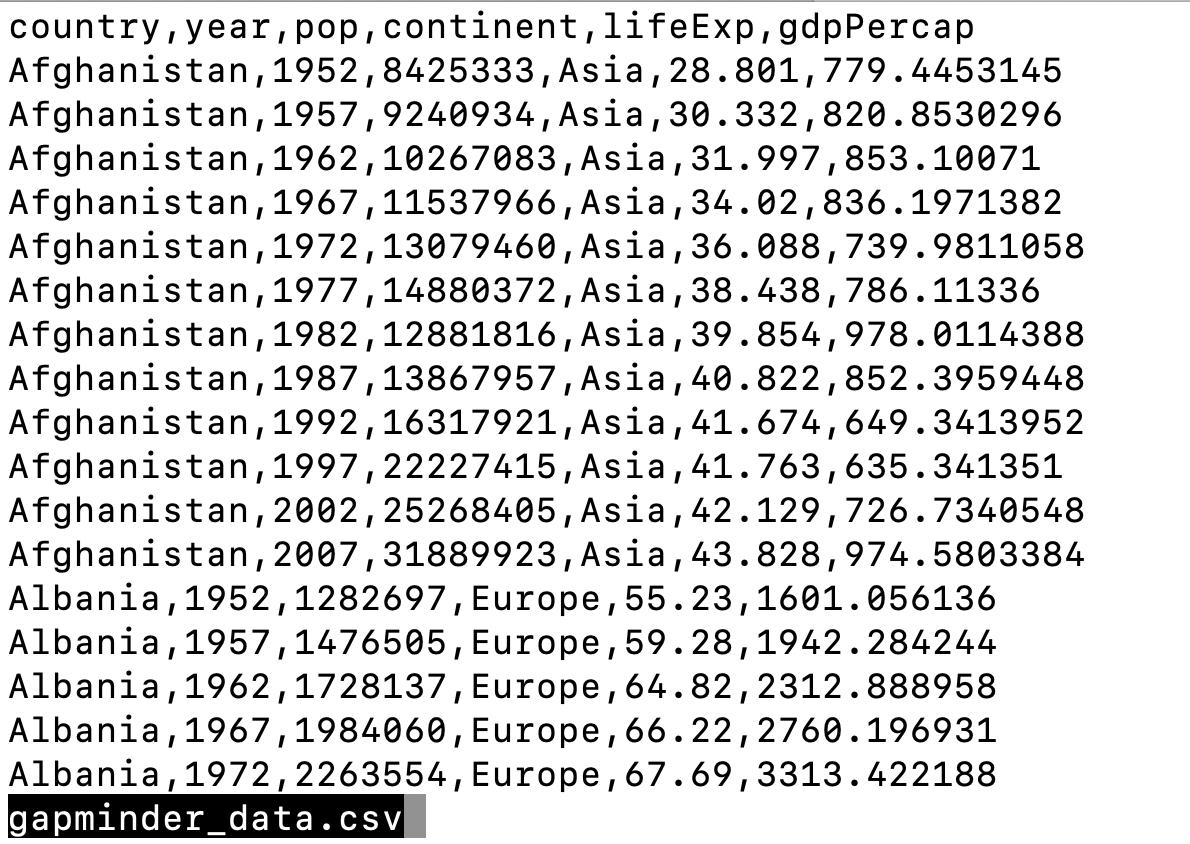

Importing datasets

In the first plot, we looked at a smaller slice of a large dataset. To gain a better understanding of the kinds of patterns we might observe in our own data, we will now use the full dataset, which is stored in a file called “gapminder_data.csv”.

To start, we will read in the data to a pandas DataFrame.

Read in your own data

What argument should be provided in the below code to read in the full dataset?

gapminder_data = pd.read_csv()Solution

gapminder_data = pd.read_csv("gapminder_data.csv")

Let’s take a look at the full dataset.

Pandas offers a way to select the top few rows of a data frame by applying the head() method to the data frame. Try it out!

gapminder_data.head()

country year pop continent lifeExp gdpPercap

0 Afghanistan 1952 8425333.0 Asia 28.801 779.445314

1 Afghanistan 1957 9240934.0 Asia 30.332 820.853030

2 Afghanistan 1962 10267083.0 Asia 31.997 853.100710

3 Afghanistan 1967 11537966.0 Asia 34.020 836.197138

4 Afghanistan 1972 13079460.0 Asia 36.088 739.981106

Notice that this dataset has an additional column “year” compared to the smaller dataset we started with.

Predicting Altair outputs

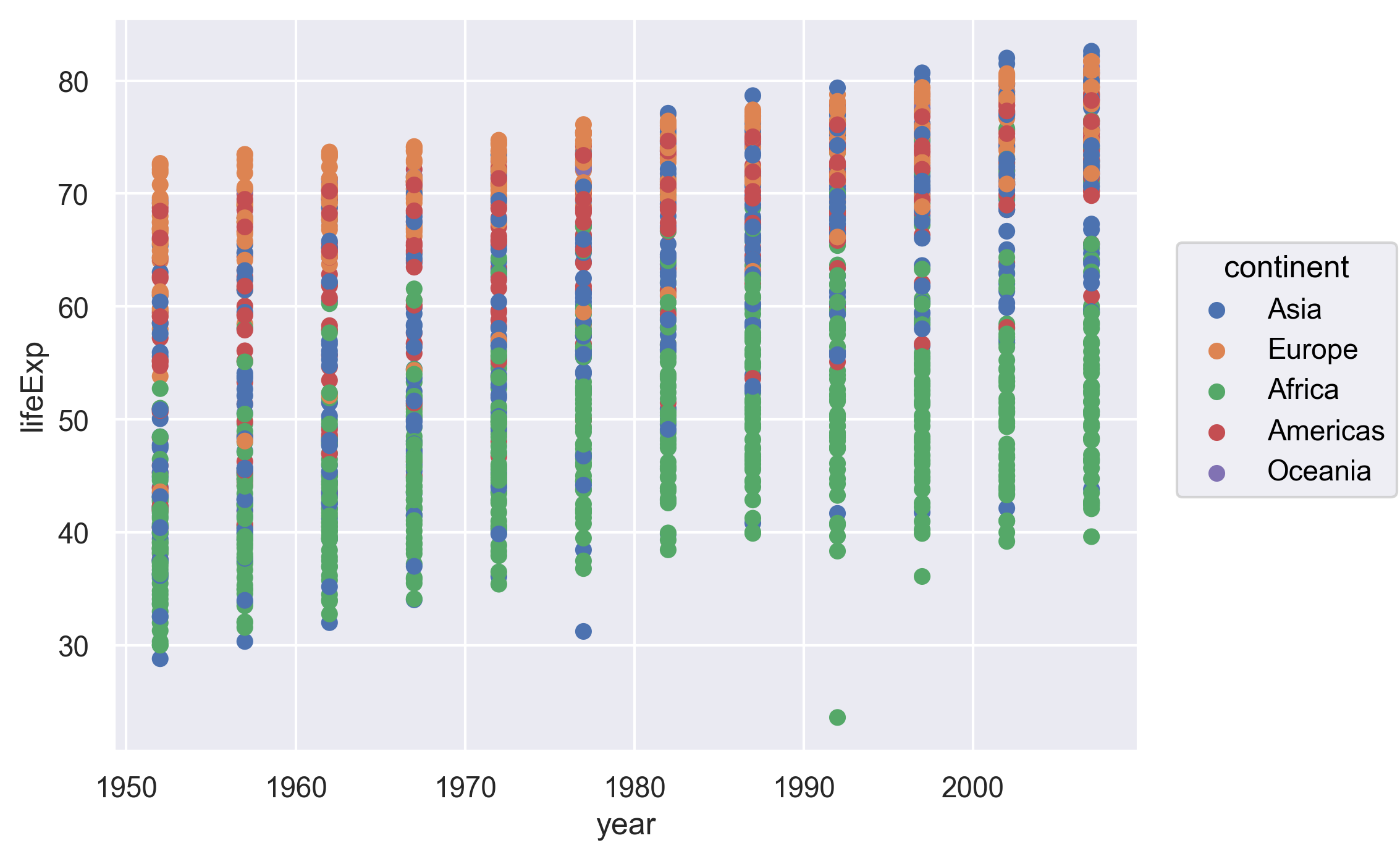

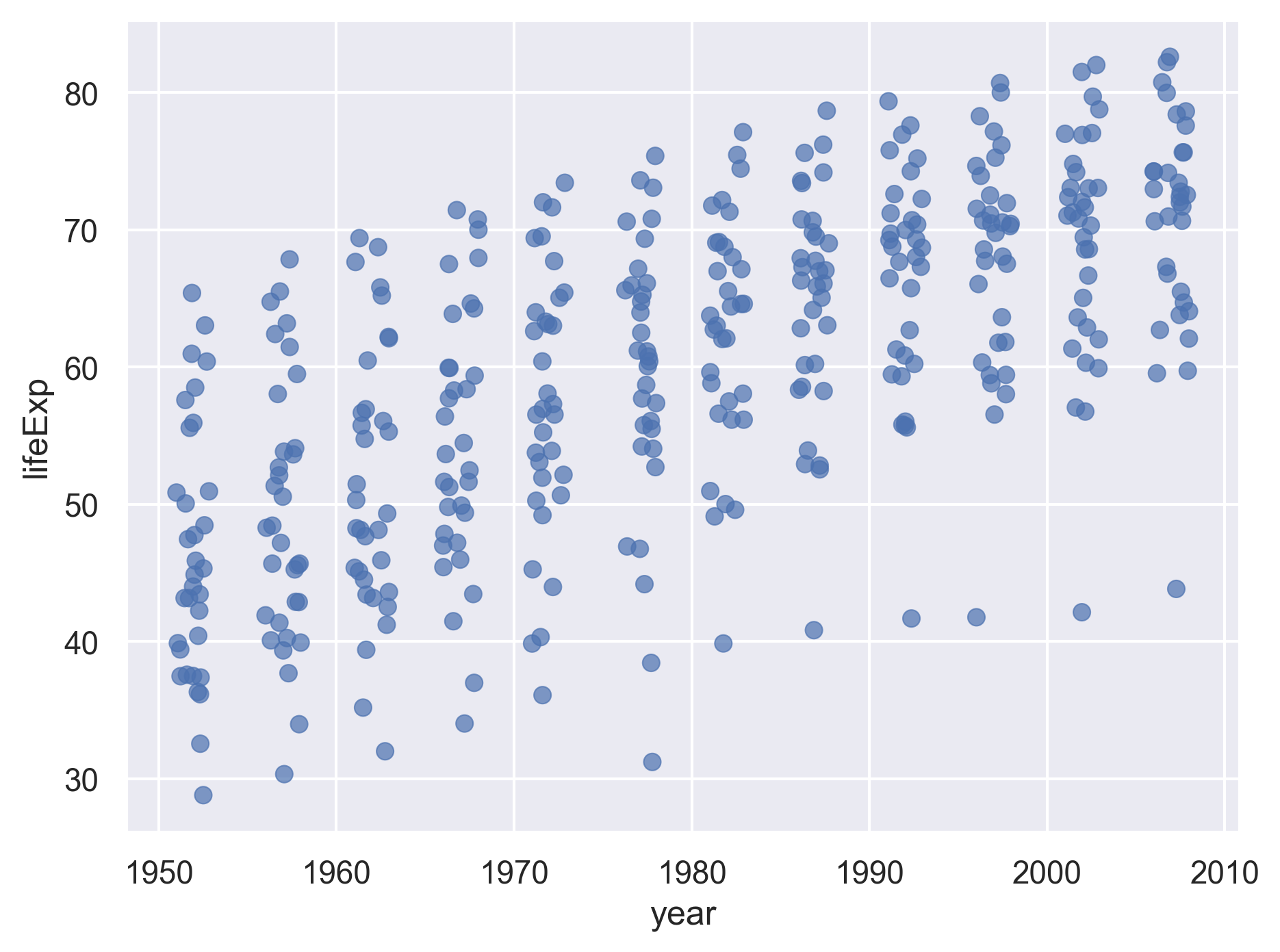

Now that we have the full dataset read into our Python session, let’s plot the data placing our new “year” variable on the x axis and life expectancy on the y axis. We’ve provided the code below. Notice that we’ve left off the labels so there’s not as much code to work with. Before running the code, read through it and see if you can predict what the plot output will look like. Then run the code and check to see if you were right!

alt.Chart(gapminder).mark_circle().encode( x='year:O', y='lifeExp', color='continent', )

Hmm, the plot we created in the last exercise isn’t very clear. What’s going on? Since the dataset is more complex, the plotting options we used for the smaller dataset aren’t as useful for interpreting these data. Luckily, we can add additional attributes to our plots that will make patterns more apparent. For example, we can generate a different type of plot - perhaps a line plot - and assign attributes for columns where we might expect to see patterns.

Let’s review the columns and the types of data stored in our dataset to decide how we should group things together.

We can apply the pandas method info to get the summary information of the data frame.

gapminder.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1704 entries, 0 to 1703

Data columns (total 6 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 country 1704 non-null object

1 year 1704 non-null int64

2 pop 1704 non-null float64

3 continent 1704 non-null object

4 lifeExp 1704 non-null float64

5 gdpPercap 1704 non-null float64

dtypes: float64(3), int64(1), object(2)

memory usage: 80.0+ KB

So, what do we see? The data frame has 1,704 entries (rows) and 6 columns. The “Dtype” shows the data type of each column.

What kind of data do we see?

- “int64”: Integer (or whole number)

- “float64”: Numeric (or non-whole number)

- “object”: String or mixed data type

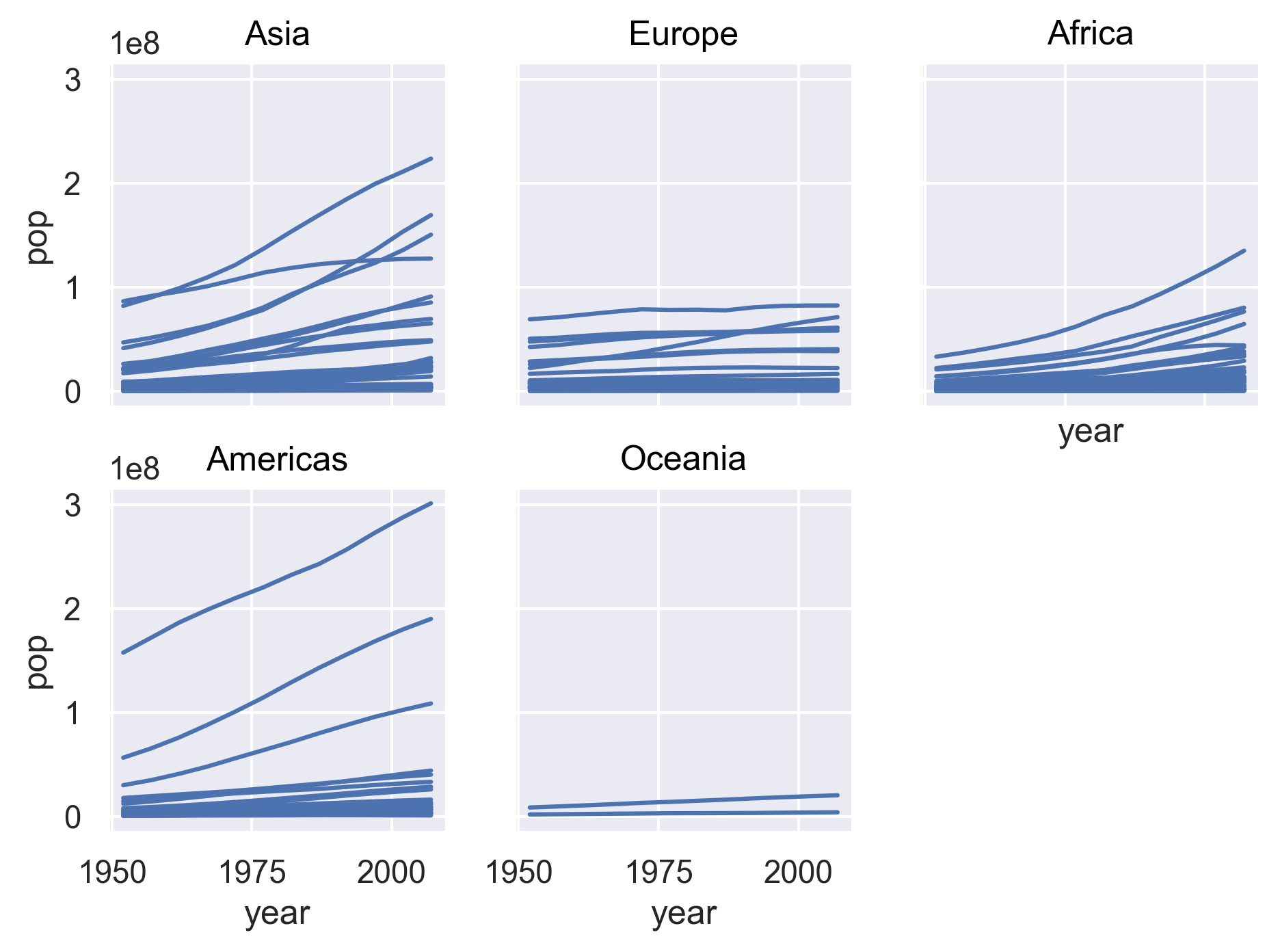

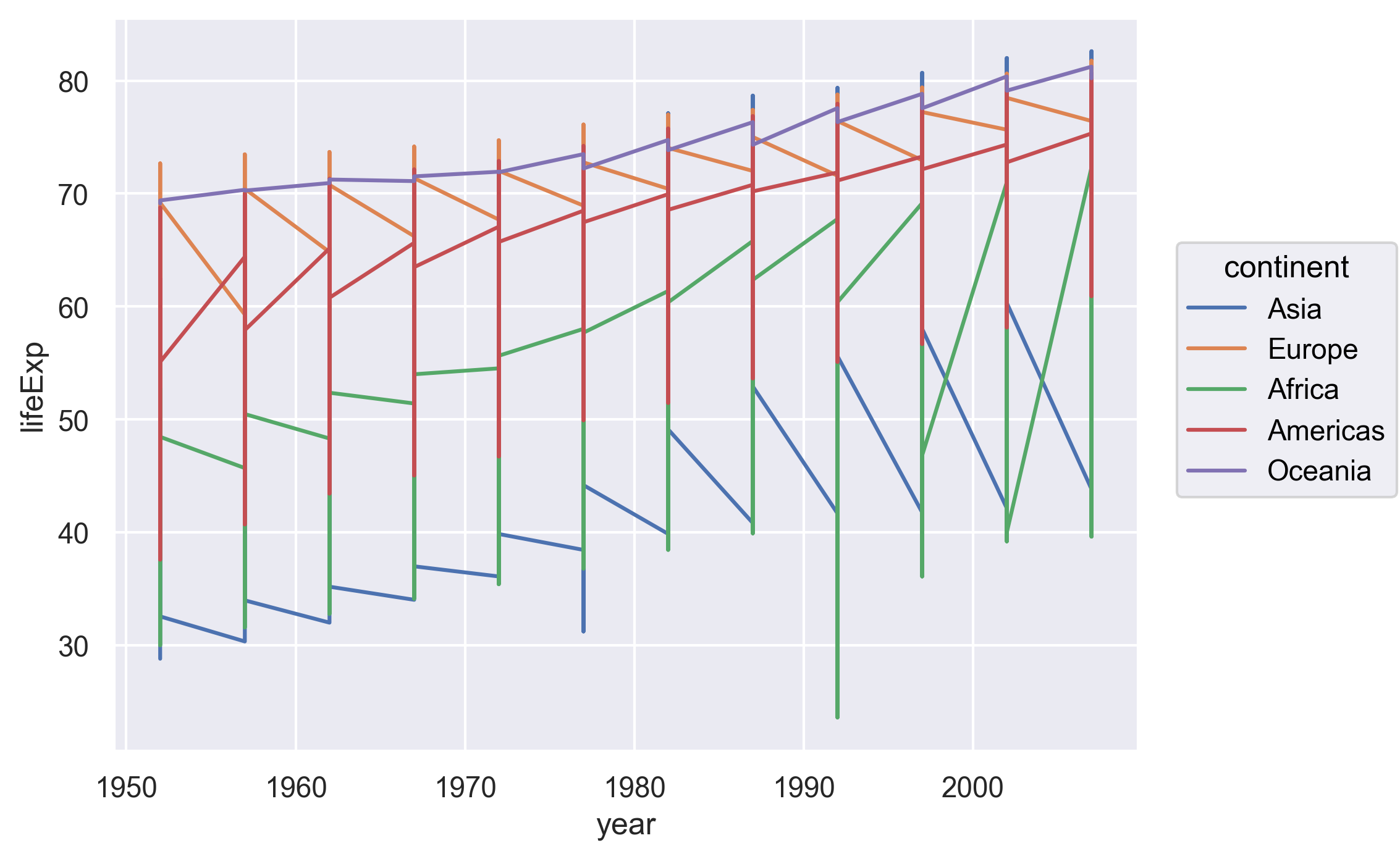

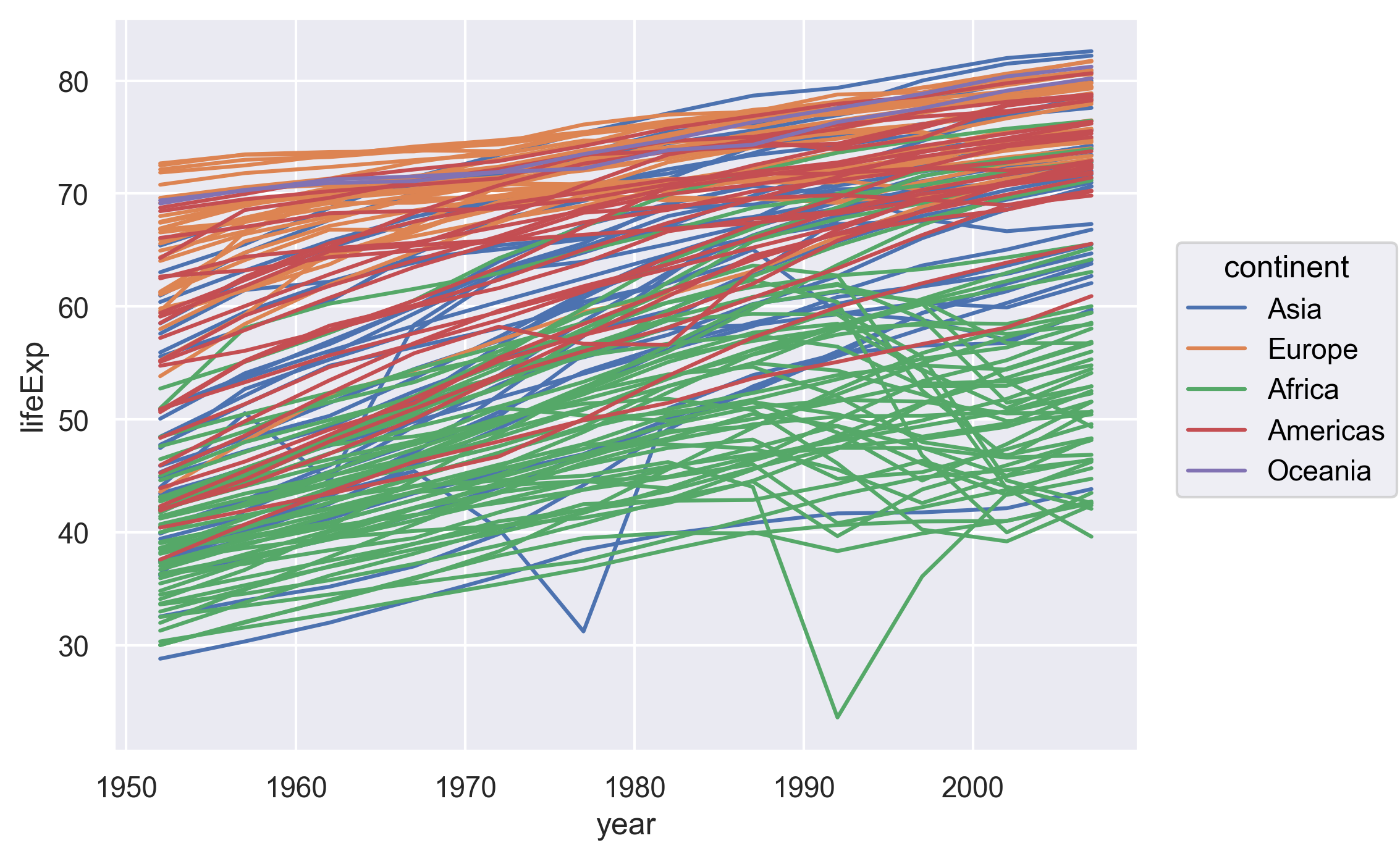

Our plot has a lot of points in columns which makes it hard to see trends over time. A better way to view the data showing changes over time is to use lines. Let’s try changing the mark from dot to line and see what happens.

alt.Chart(gapminder).mark_line().encode(

x='year:O',

y='lifeExp',

color='continent',

)

Hmm. This doesn’t look right.

By setting the color value, we got a line for each continent,

but we really wanted a line for each country.

We need to tell Altair that we want to connect the values for each country value instead.

To do this, we need to specify the detail argument of the encode method.

alt.Chart(gapminder).mark_line().encode(

x='year:O',

y='lifeExp',

color='continent',

detail='country',

)

Sometimes plots like this are called “spaghetti plots” because all the lines look like a bunch of wet noodles.

Bonus Exercise: More line plots

Now create your own line plot comparing population and life expectancy! Looking at your plot, can you guess which two countries have experienced massive change in population from 1952-2007?

Solution

alt.Chart(gapminder).mark_line().encode( x='pop', y='lifeExp', color='continent', detail='country', )

(China and India are the two Asian countries that have experienced massive population growth from 1952-2007.)

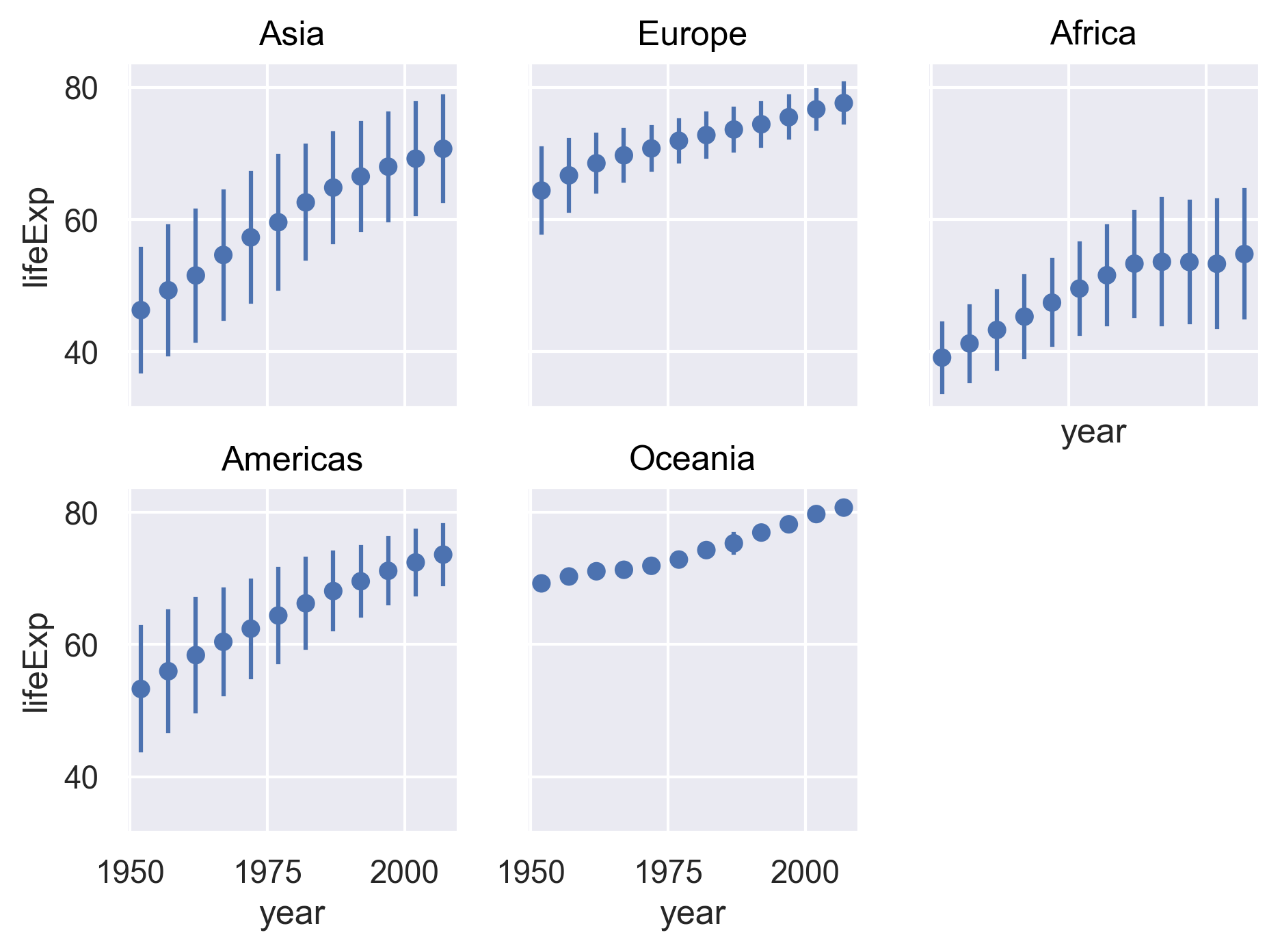

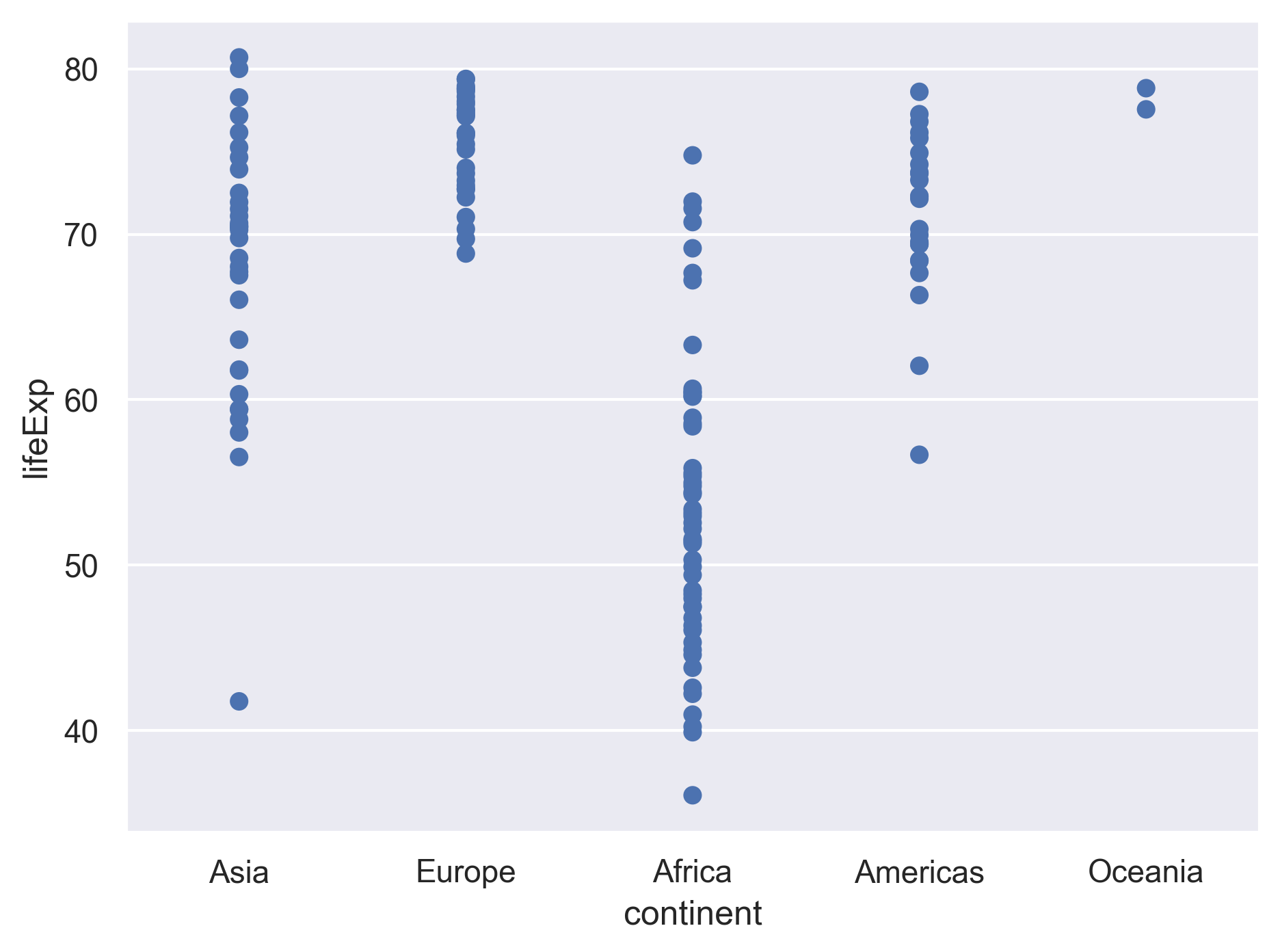

Categorical Plots

So far we’ve looked at plots with both the x and y values being numerical values in a continuous scale (e.g., life expectancy, GDP per capita, year, population, etc.) But sometimes we may want to visualize categorical data (e.g., continents).

We’ve previously used the categorical values of the continent column to color in our points and lines. But now let’s try moving that variable to the x axis.

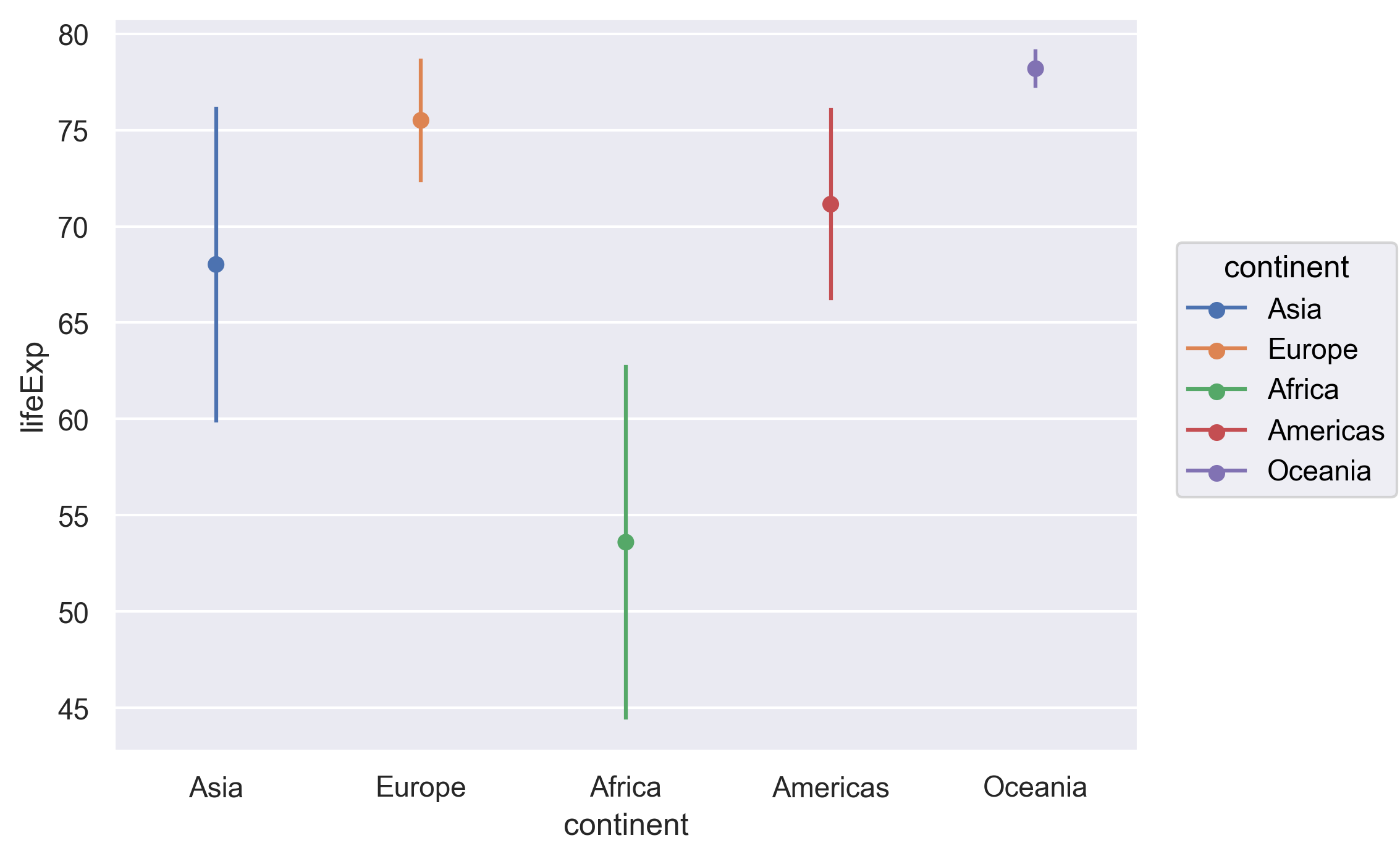

Let’s say we are curious about comparing the distribution of the life expectancy values for each of the different continents for the gapminder_1997 data.

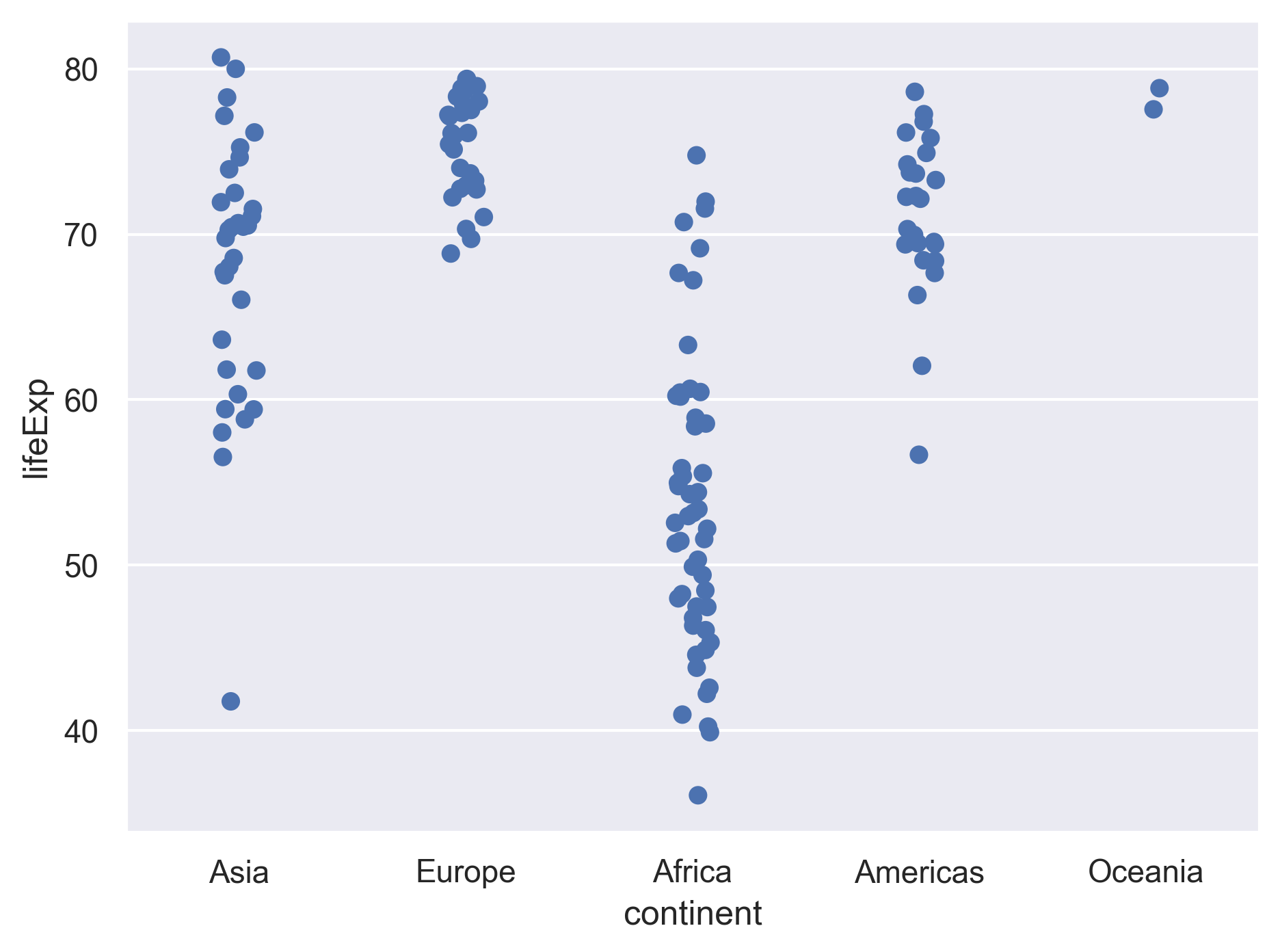

Let’s map the continent to the x axis and the life expectancy to the y axis. Let’s use the dot marks to represent the data.

(

so.Plot(gapminder_1997,

x='continent',

y='lifeExp')

.add(so.Dot())

)

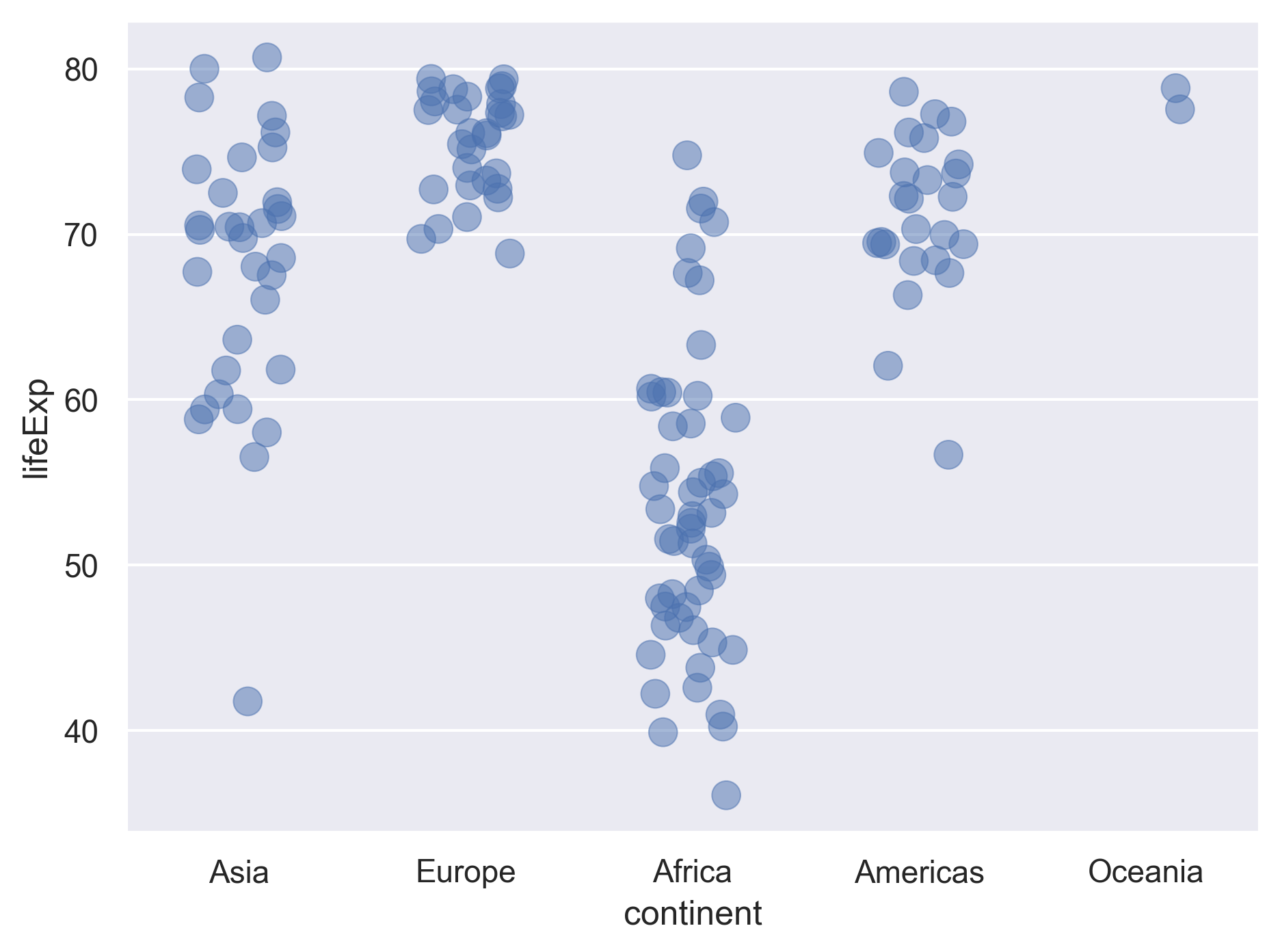

We see that there is some overplotting as countries from the same continents are aligned vertically like a strip of kebab, making it hard to see the dots in some dense areas. The seaborn objects interface leaves it to us to specify who we would like the overplotting to be handled. A common treatment is to spread (or “jitter”) the dots within each group by adding a little random displacement along the categorical axis. The result is sometimes called a “jitter plot”.

Here we can simply add so.Jitter().

(

so.Plot(gapminder_1997,

x='continent',

y='lifeExp')

.add(so.Dot(), so.Jitter())

)

We can control the amount of jitter by setting the width argument.

Let’s also change the size and opacity of the dots.

(

so.Plot(gapminder_1997,

x='continent',

y='lifeExp')

.add(so.Dot(pointsize=10, alpha=.5), so.Jitter(width=.8))

)

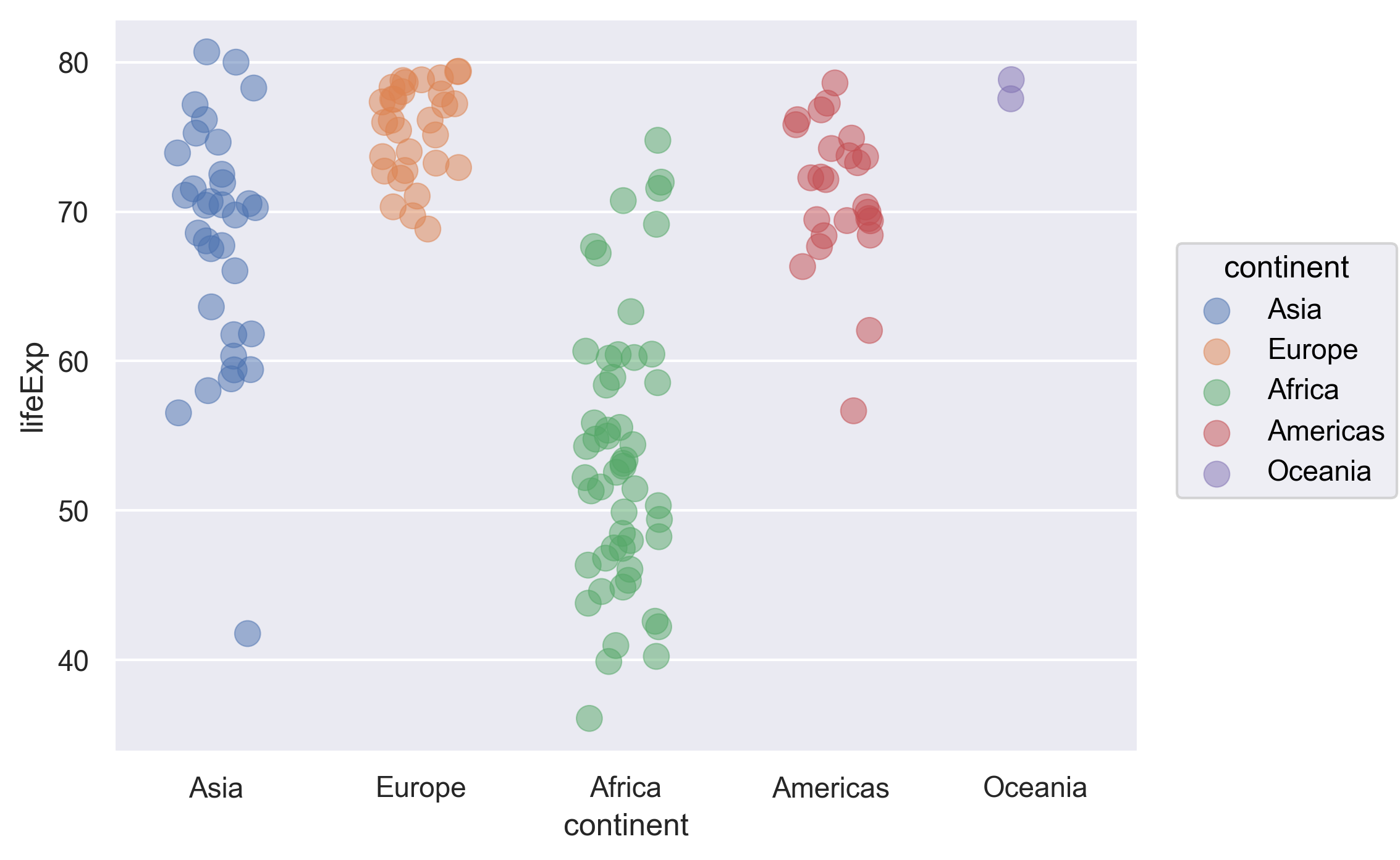

Lastly, let’s further map the continents to the color of the dots.

(

so.Plot(gapminder_1997,

x='continent',

y='lifeExp',

color='continent')

.add(so.Dot(pointsize=10, alpha=.5), so.Jitter(width=.8))

)

This type of visualization makes it easy to compare the distribution (e.g., range, spread) of values across groups.

Bonus Exercise: Other categorical plots

Let’s plot the range of the life expectancy for each continent in terms of its mean plus/minus one standard deviation.

Example solution

( so.Plot(gapminder_1997, x='continent', y='lifeExp', color='continent') .add(so.Range(), so.Est(func='mean', errorbar='sd')) .add(so.Dot(), so.Agg()) )

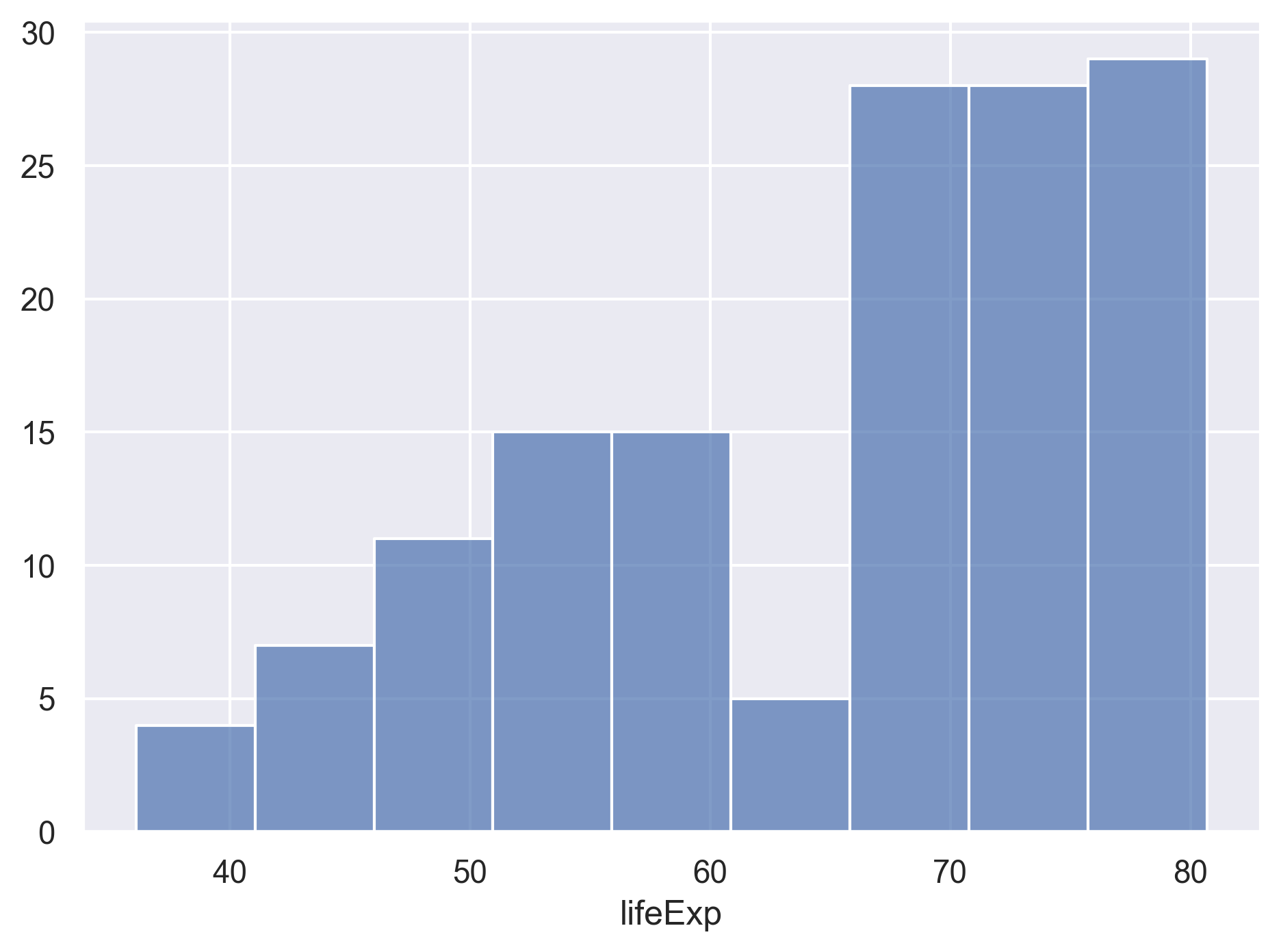

Univariate Plots

We jumped right into making plots with multiple columns.

But what if we wanted to take a look at just one column?

In that case, we only need to specify a mapping for x and choose an appropriate mark.

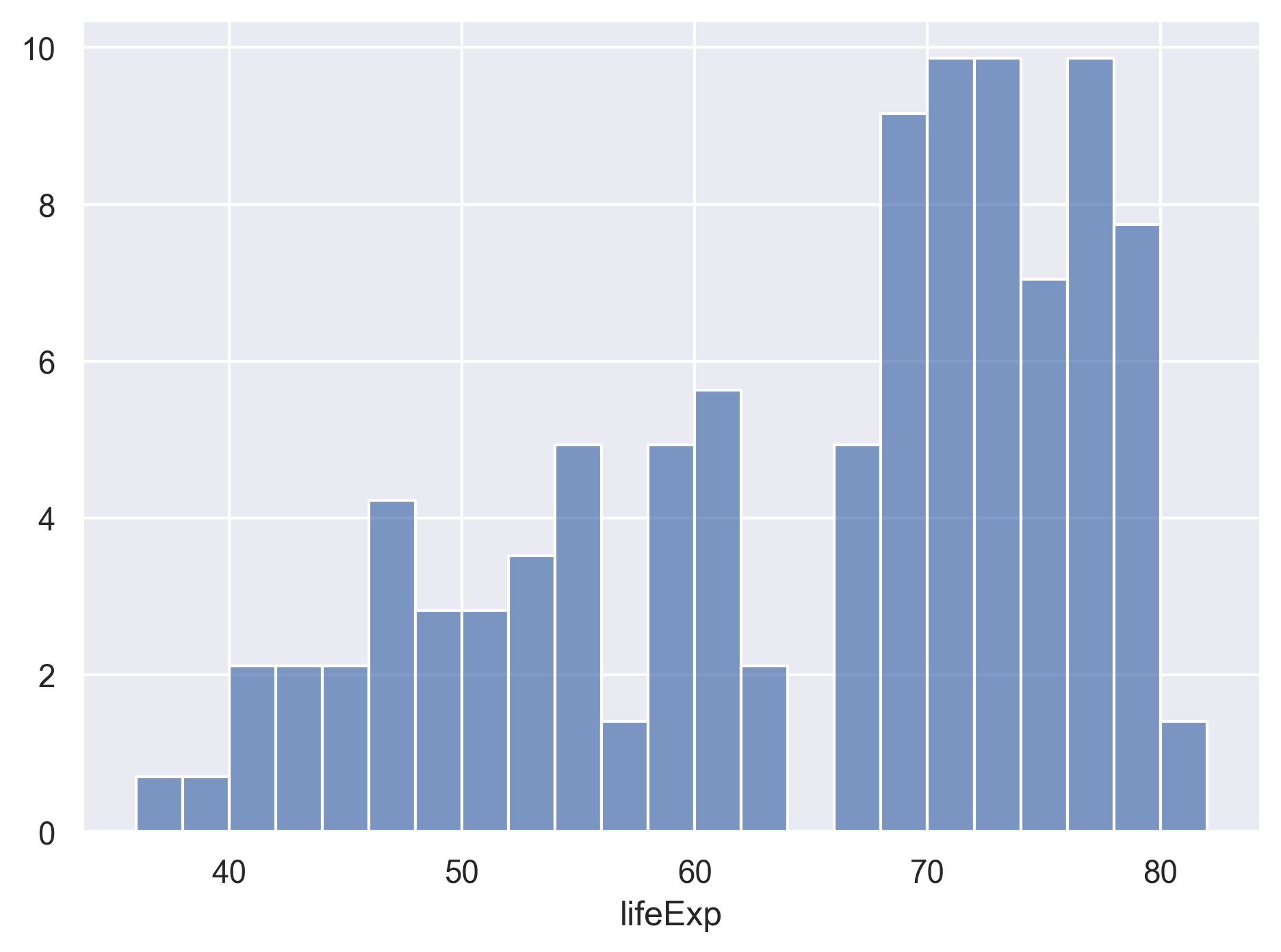

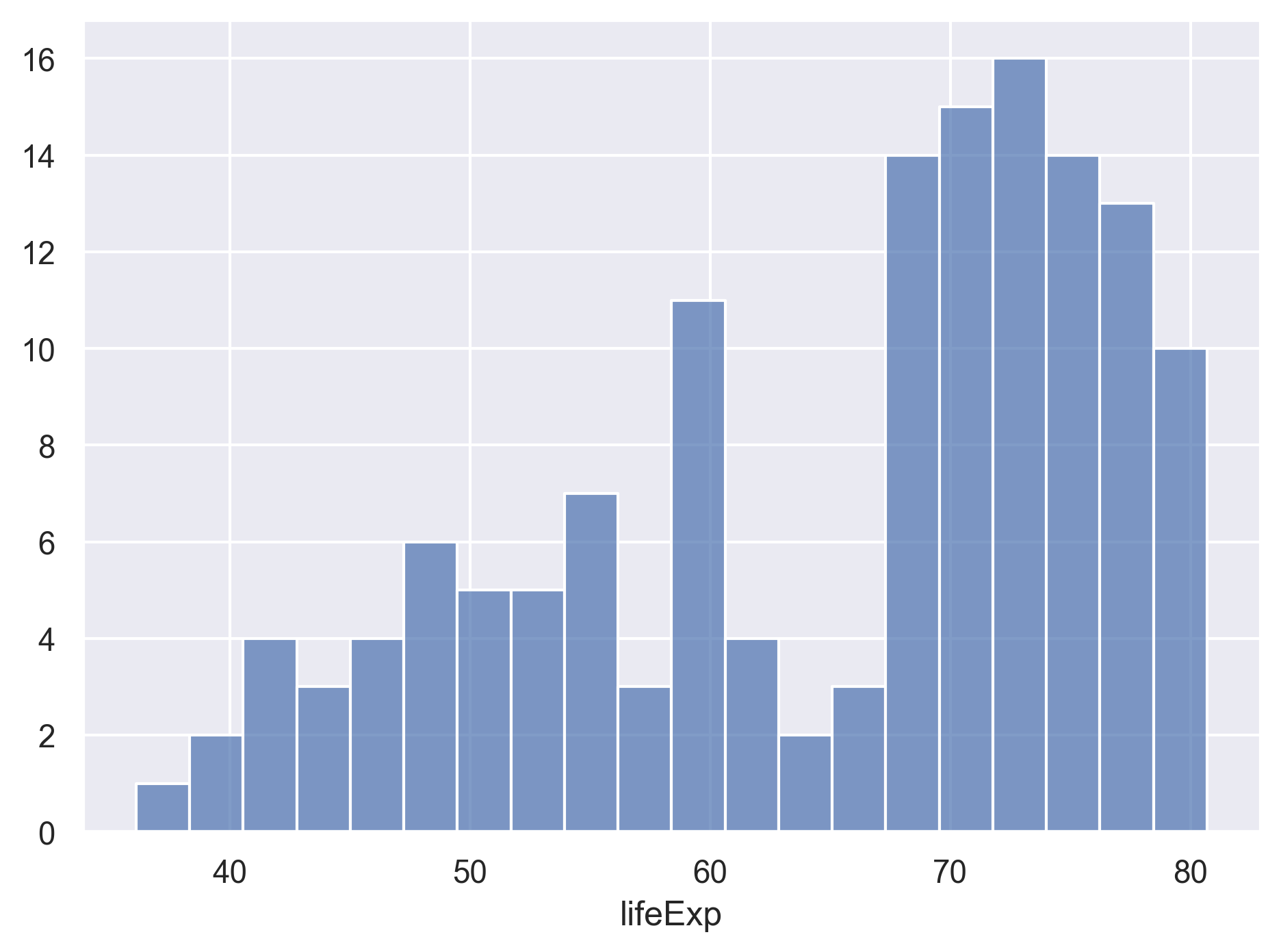

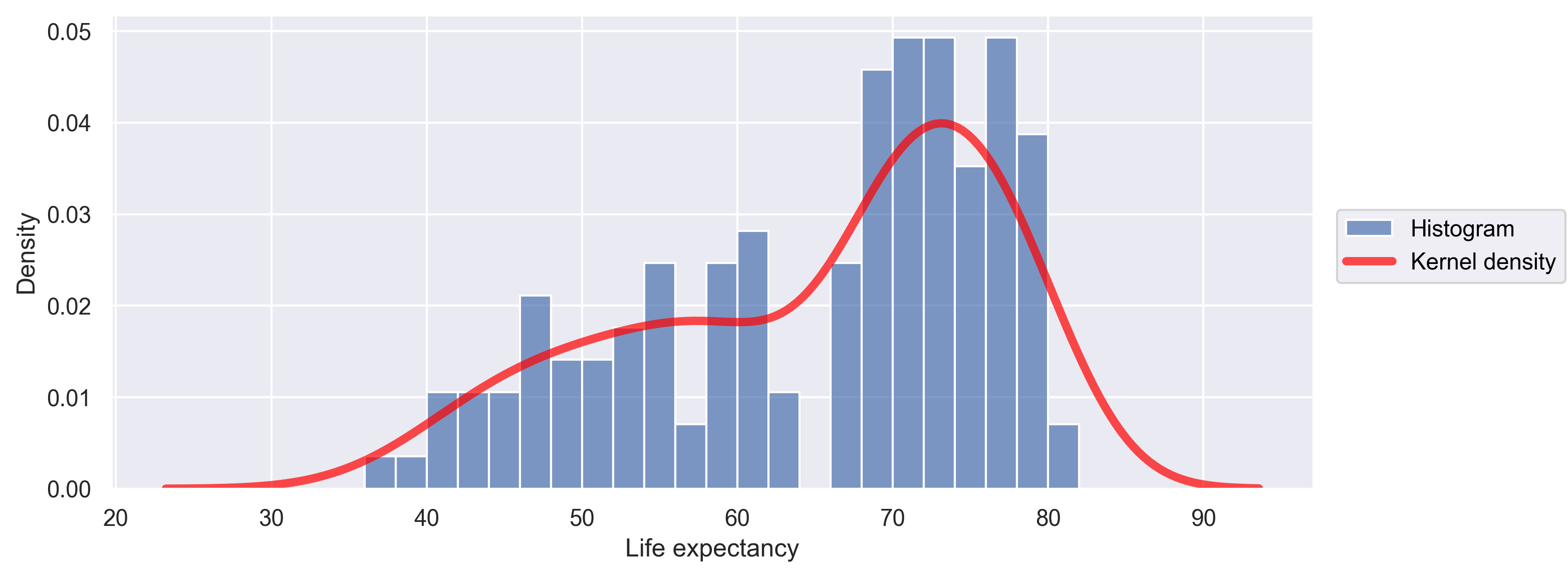

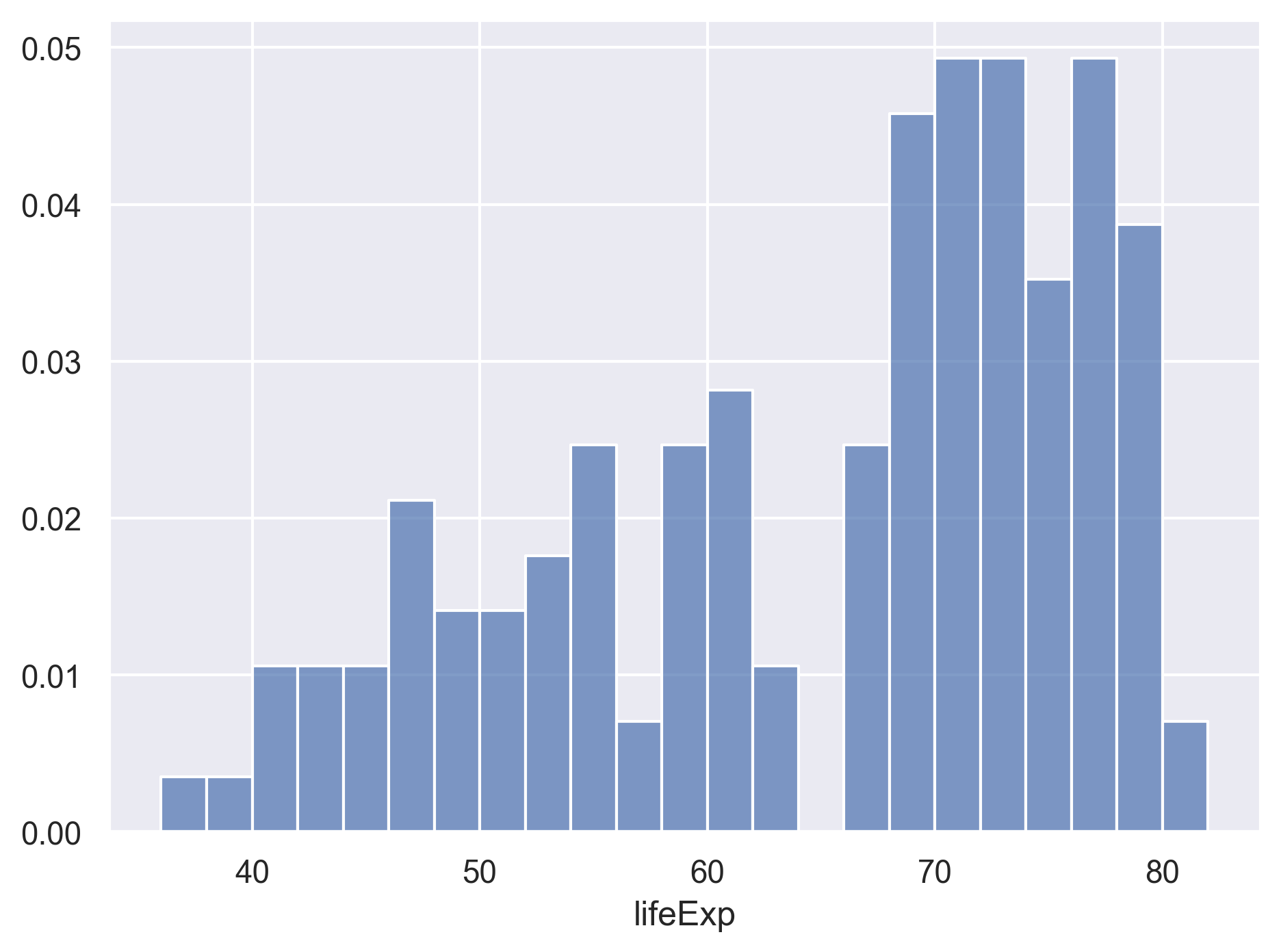

Let’s start with a histogram to see the range and spread of life expectancy.

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Bars(), so.Hist())

)

Histograms can look very different depending on the number of bins you decide to draw.

The default is 10.

Let’s try setting a different value by explicitly passing a bins argument to Hist.

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Bars(), so.Hist(bins=20))

)

You can try different values like 5 or 50 to see how the plot changes.

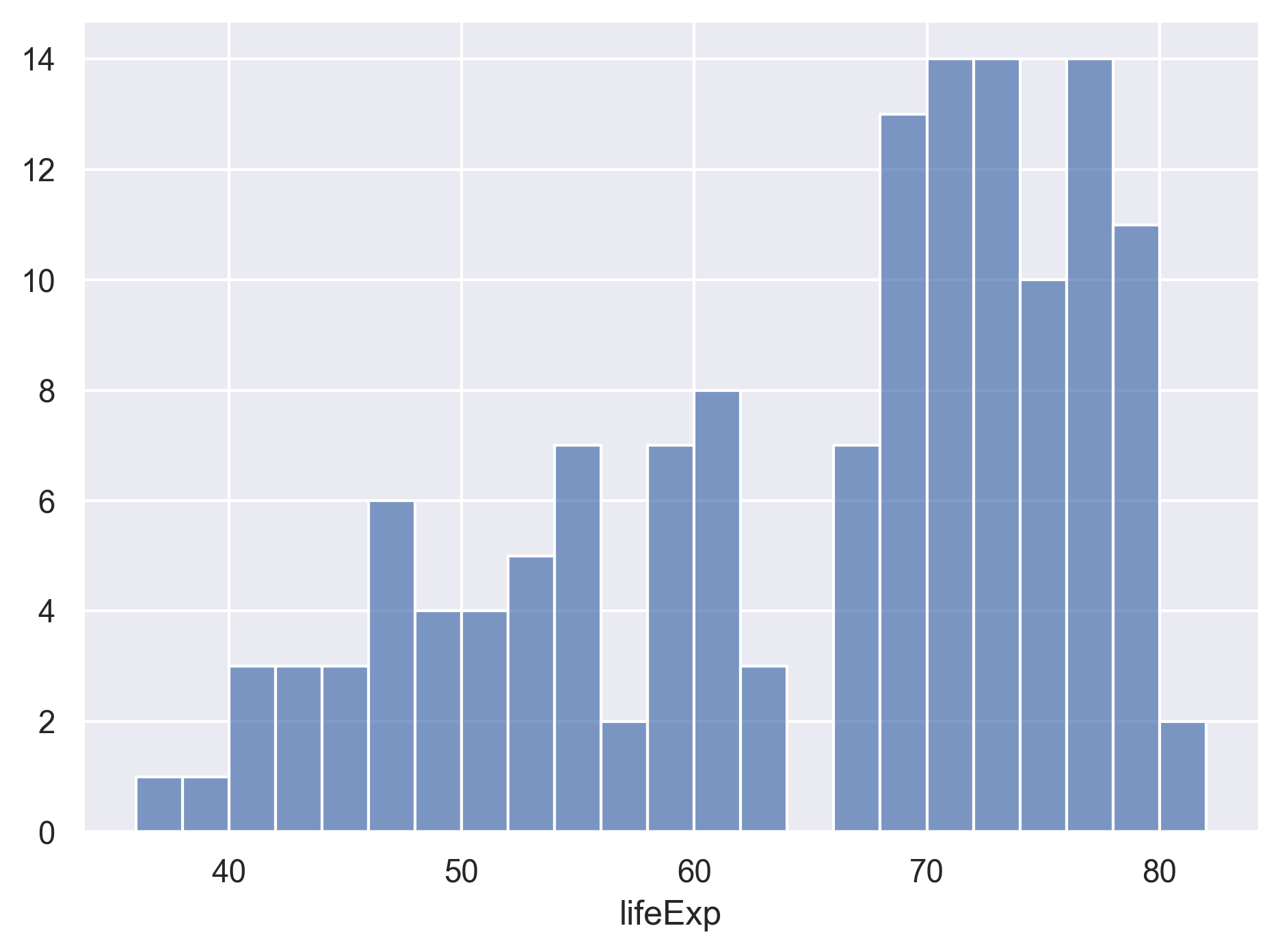

Sometimes we don’t really care about the total number of bins, but rather the bin width and end points.

For example, we may want the bins at 40-42, 42-44, 44-46, and so on.

In this case, we can set the binwidth and binrange arguments to the Hist.

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Bars(), so.Hist(binwidth=5, binrange=(0, 100)))

)

Changing the aggregate statistics

By default the y axis shows the number of observations in each bin, that is,

stat='count'. Sometimes we are more interested in other aggregate statistics rather than count, such as the percentage of the observations in each bin. Check the documentation ofso.Histand see what other aggregate statistics are offered, and change the histogram to show the percentages instead.Solution

( so.Plot(data=gapminder_1997, x='lifeExp') .add(so.Bars(), so.Hist(stat='percent', binwidth=5, binrange=(0, 100))) )

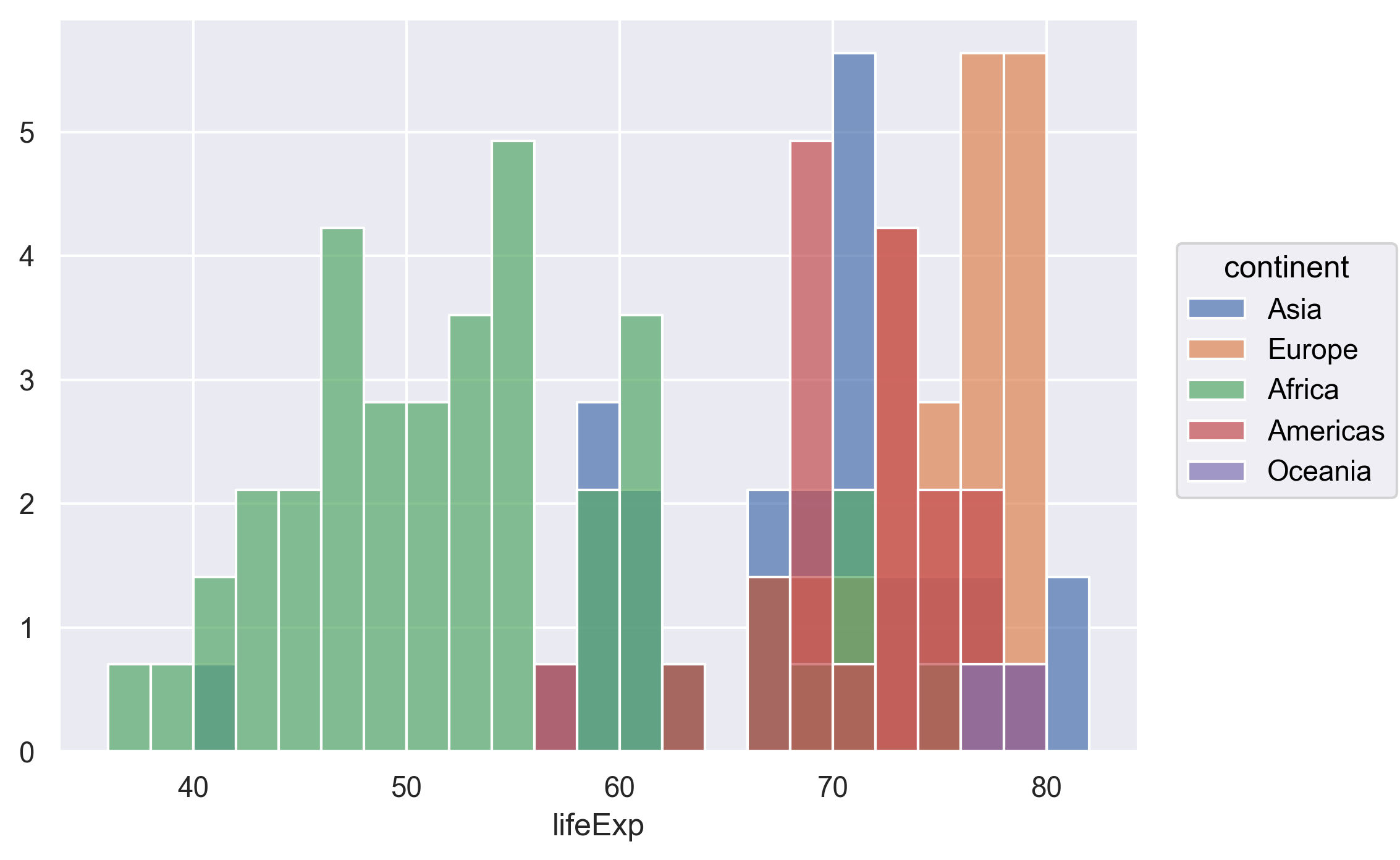

If we want to see a break-down of the life expectancy distribution by each continent, we can add the continent to the color property.

(

so.Plot(data=gapminder_1997,

x='lifeExp',

color='continent')

.add(so.Bars(), so.Hist(stat='percent', binwidth=5, binrange=(0, 100)))

)

Hmm, it looks like the bins for each continent are on top of each other.

It’s not very easy to see the distributions.

Again, we can tell seaborn how overplotting should be handled.

In this case we can use so.Stack() to stack the bins.

This type of chart is often called a “stacked bar chart”.

(

so.Plot(data=gapminder_1997,

x='lifeExp',

color='continent')

.add(so.Bars(), so.Hist(stat='percent', binwidth=5, binrange=(0, 100)), so.Stack())

)

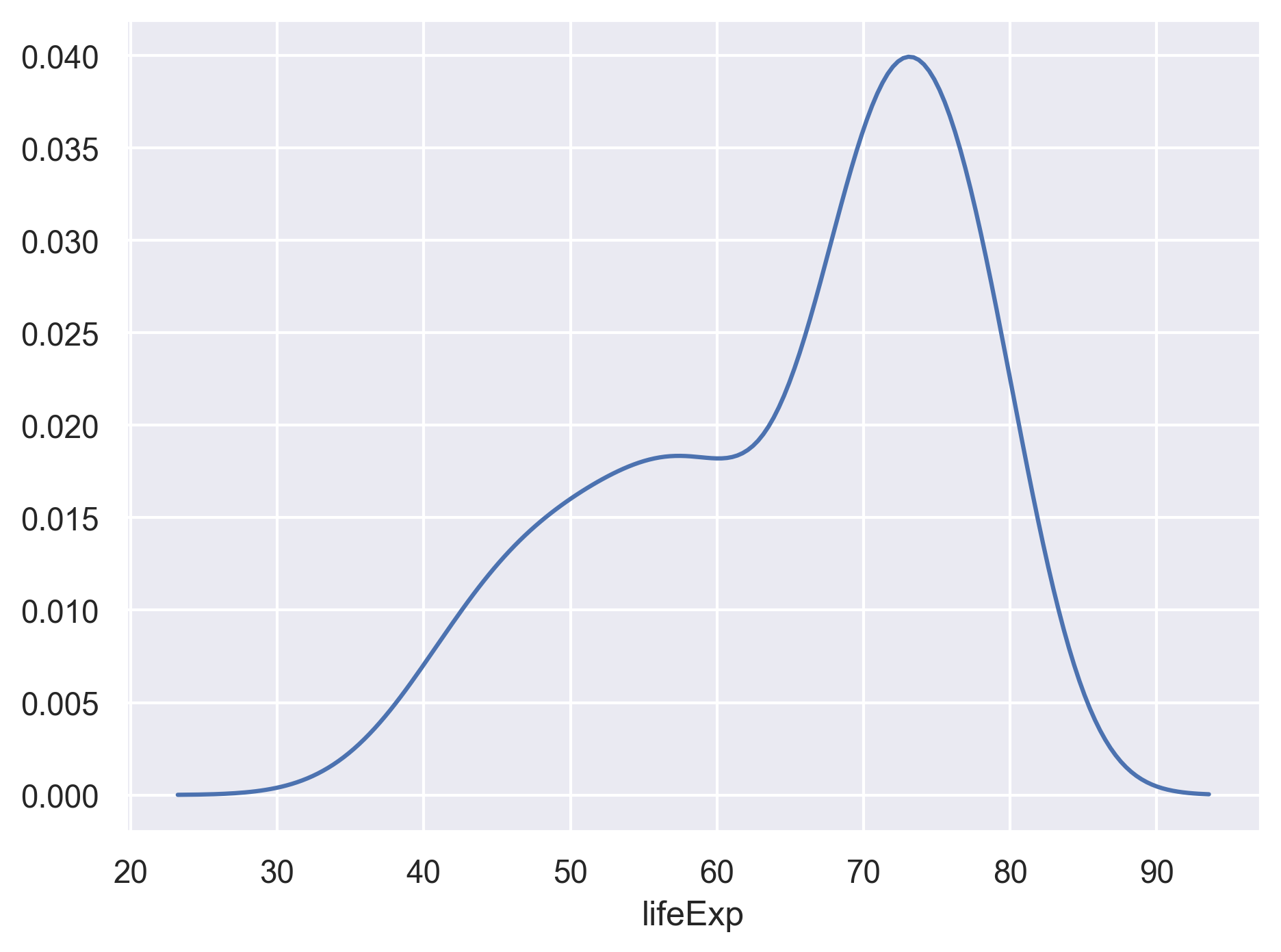

Other than the histogram, we can also usekernel density estimation, a smoothing technique that captures the general shape of the distribution of a continuous variable.

We can add a line so.Line() that represents the kernel density estimates so.KDE().

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Line(), so.KDE())

)

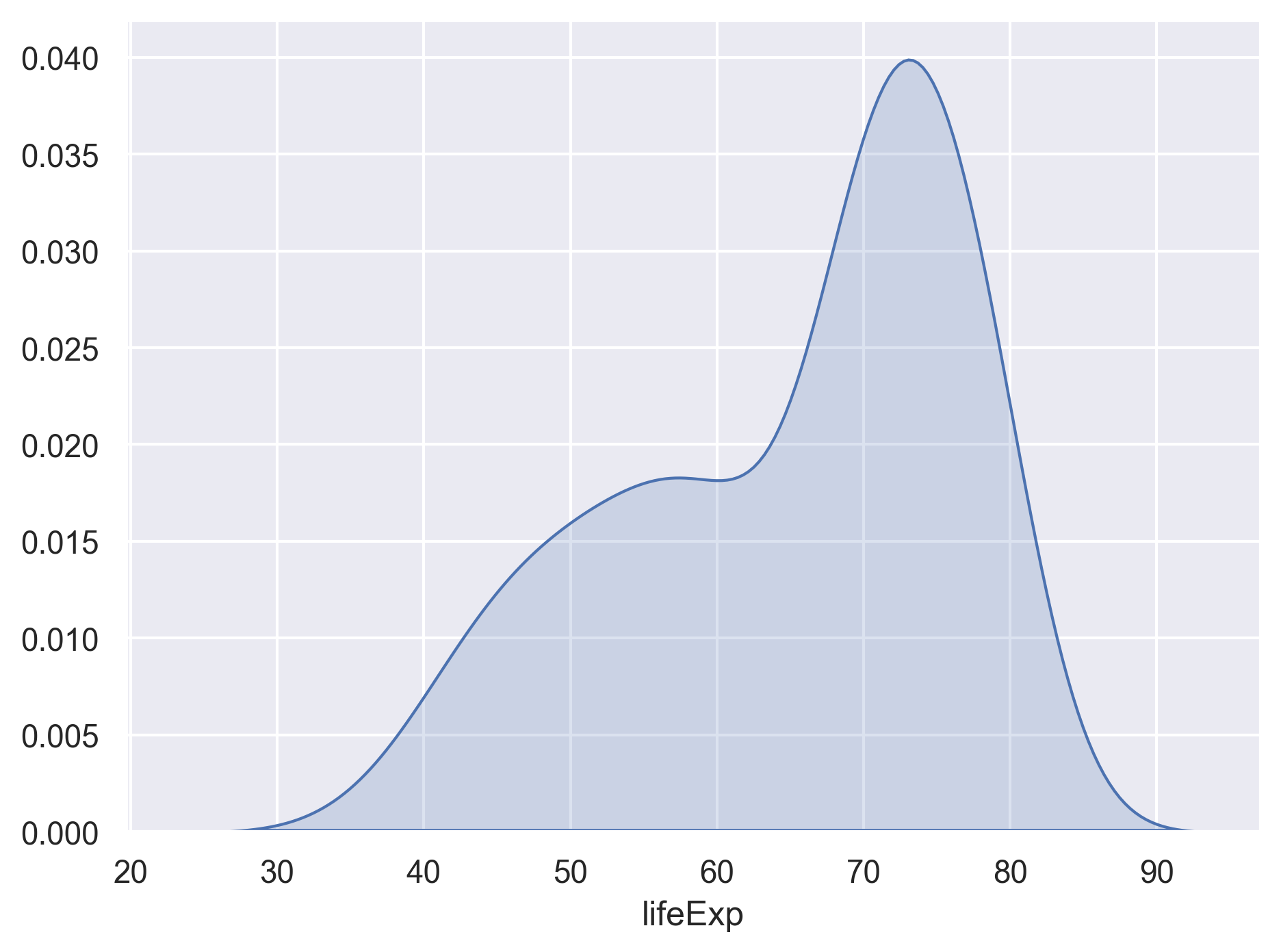

Alternatively, we can also add an area so.Area() that represents the kernel density estimates so.KDE().

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Area(), so.KDE())

)

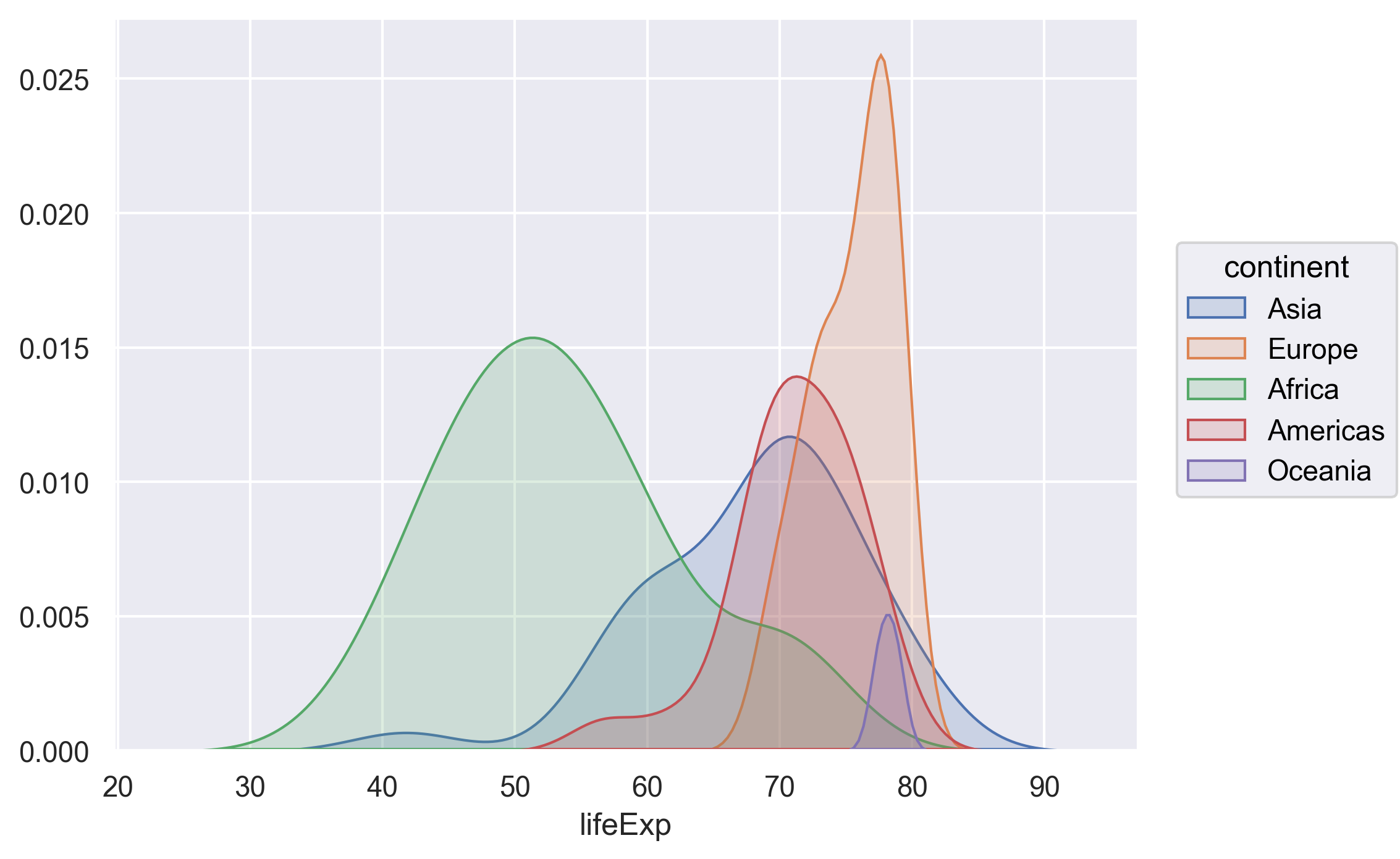

If we want to see the kernel density estimates for each continent, we can map continents to the color in the plot function.

(

so.Plot(data=gapminder_1997,

x='lifeExp',

color='continent')

.add(so.Area(), so.KDE())

)

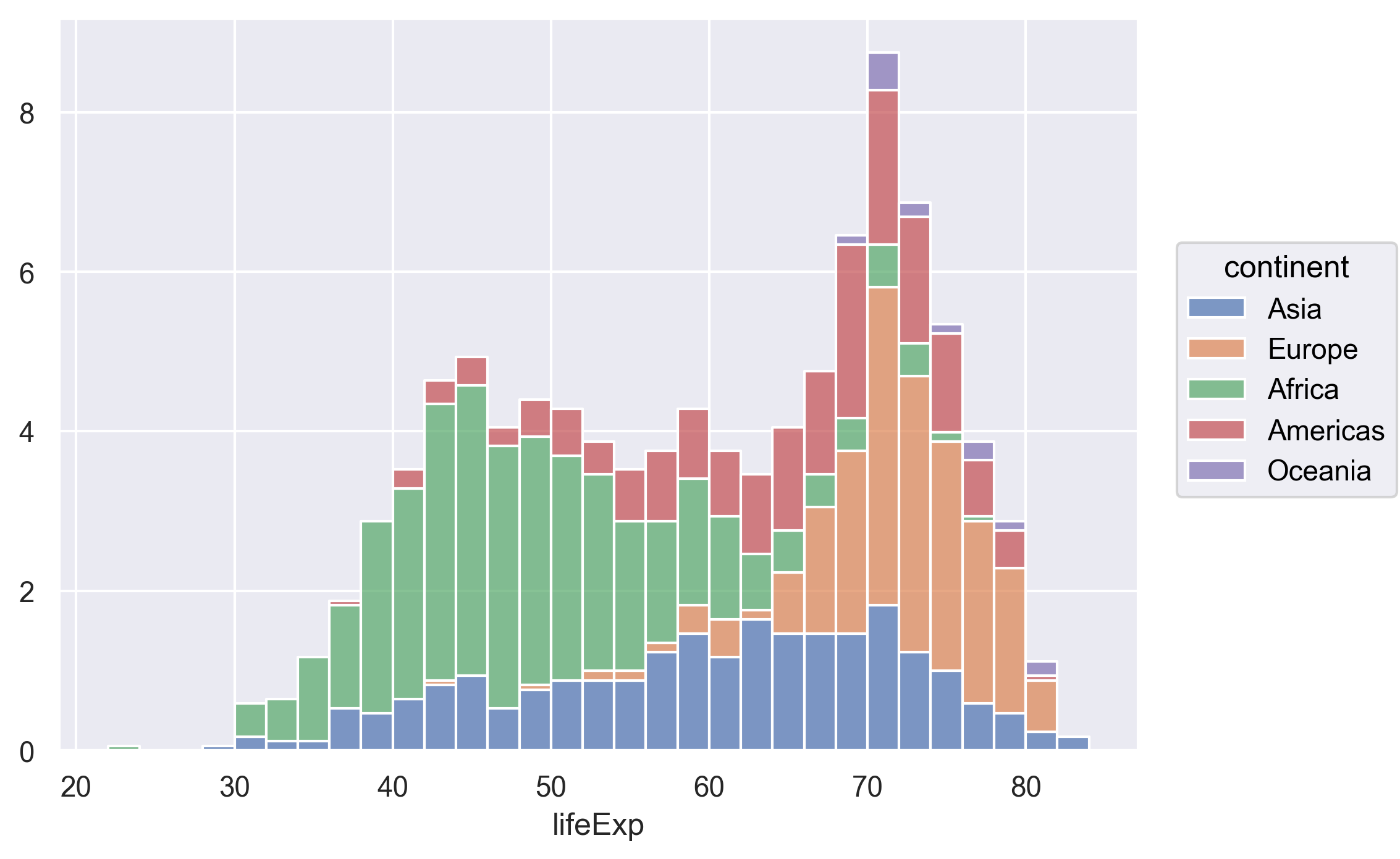

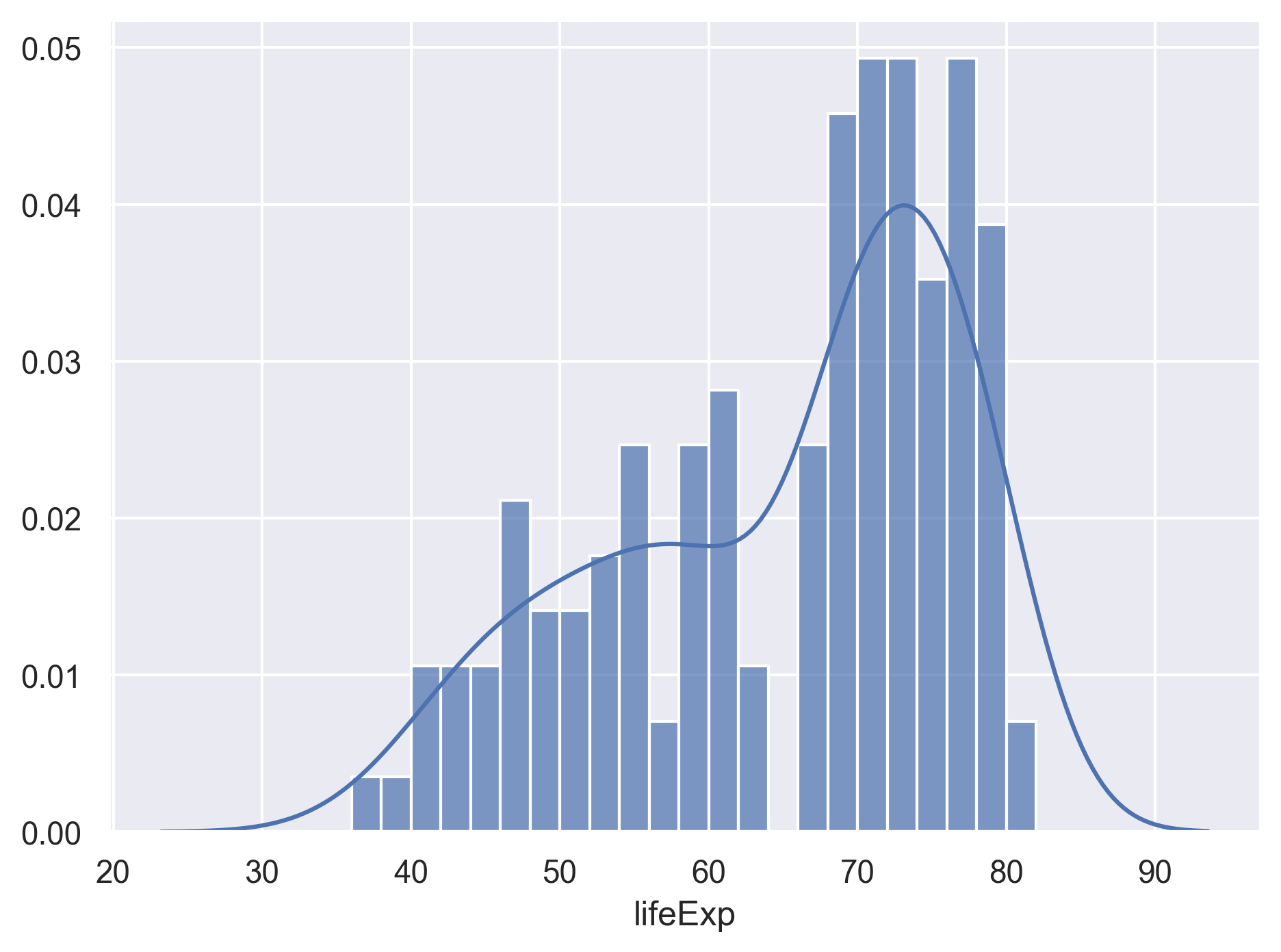

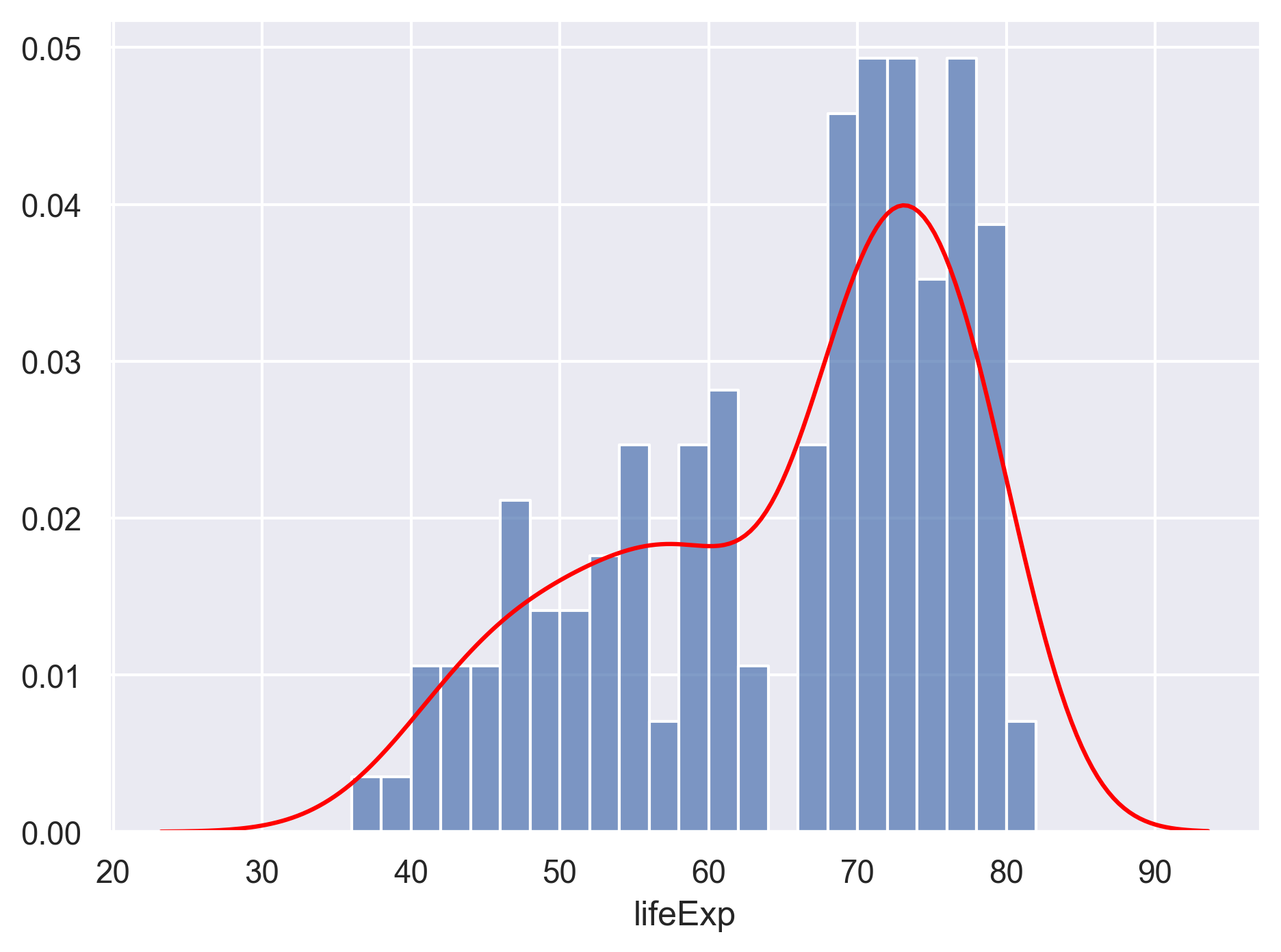

We can overlay multiple visualization layers to the same plot.

Here let’s combine the histogram and the kernel density estimate.

Note we will need to change the stat argument of the so.Hist() to density, so that the y axis values of the histogram are comparable with the kernel density.

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Bars(), so.Hist(stat='density', binwidth=5, binrange=(0, 100)))

.add(so.Line(), so.KDE())

)

Lastly, we can make a few further improvements to the plot.

- Specify the

labelparameter for the two data layers (i.e., the lines starts with.add()), so they will show up in a “layer legend”. - Change the line color, width, and opacity.

- Add x and y axis labels.

- Change the size of the plot by calling the

layout()method.

(

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Bars(), so.Hist(stat='density', binwidth=5, binrange=(0, 100)), label='Histogram')

.add(so.Line(color='red', linewidth=4, alpha=.7), so.KDE(), label='Kernel density')

.label(x="Life expectancy", y="Density")

.layout(size=(9, 4))

)

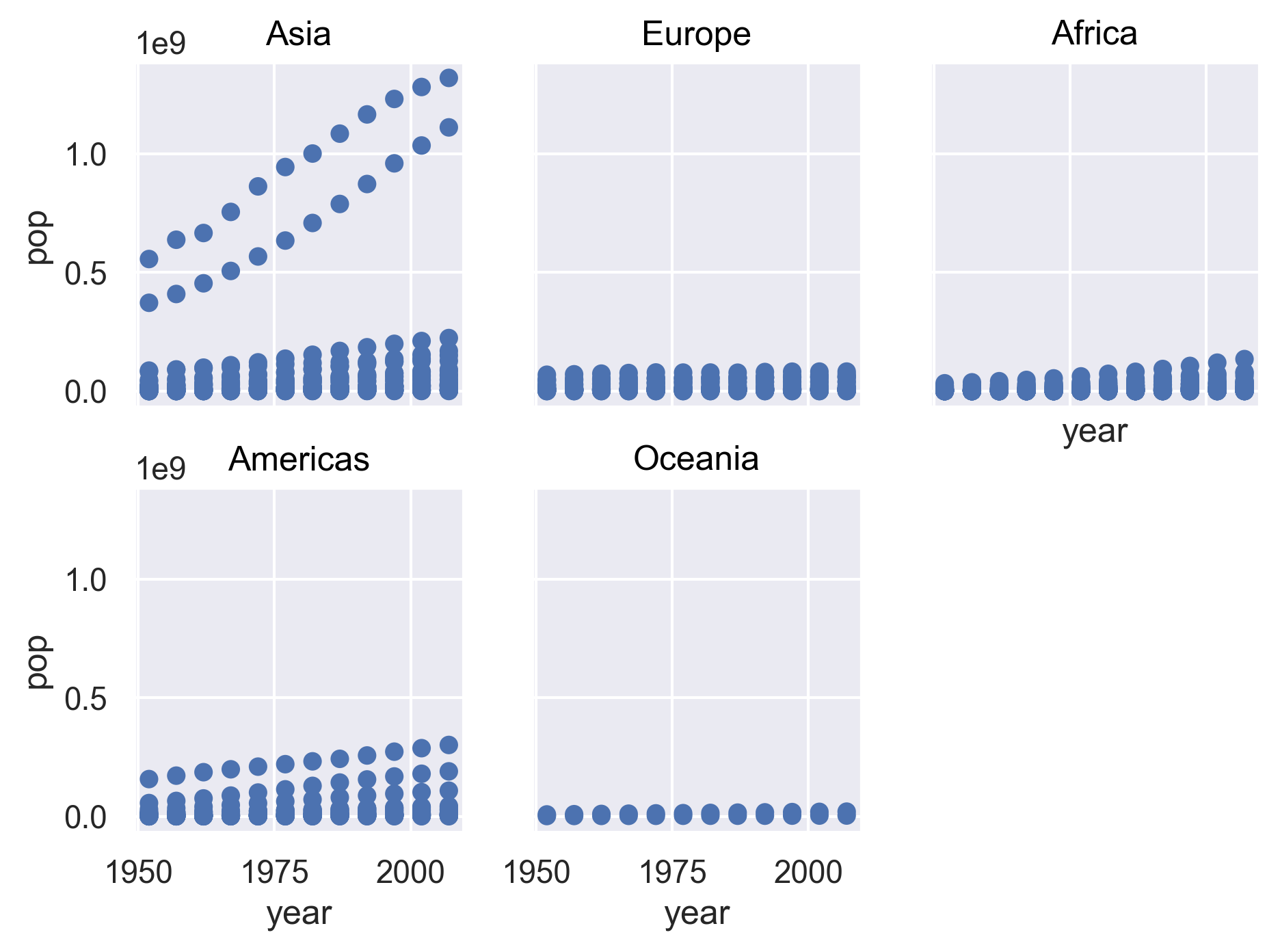

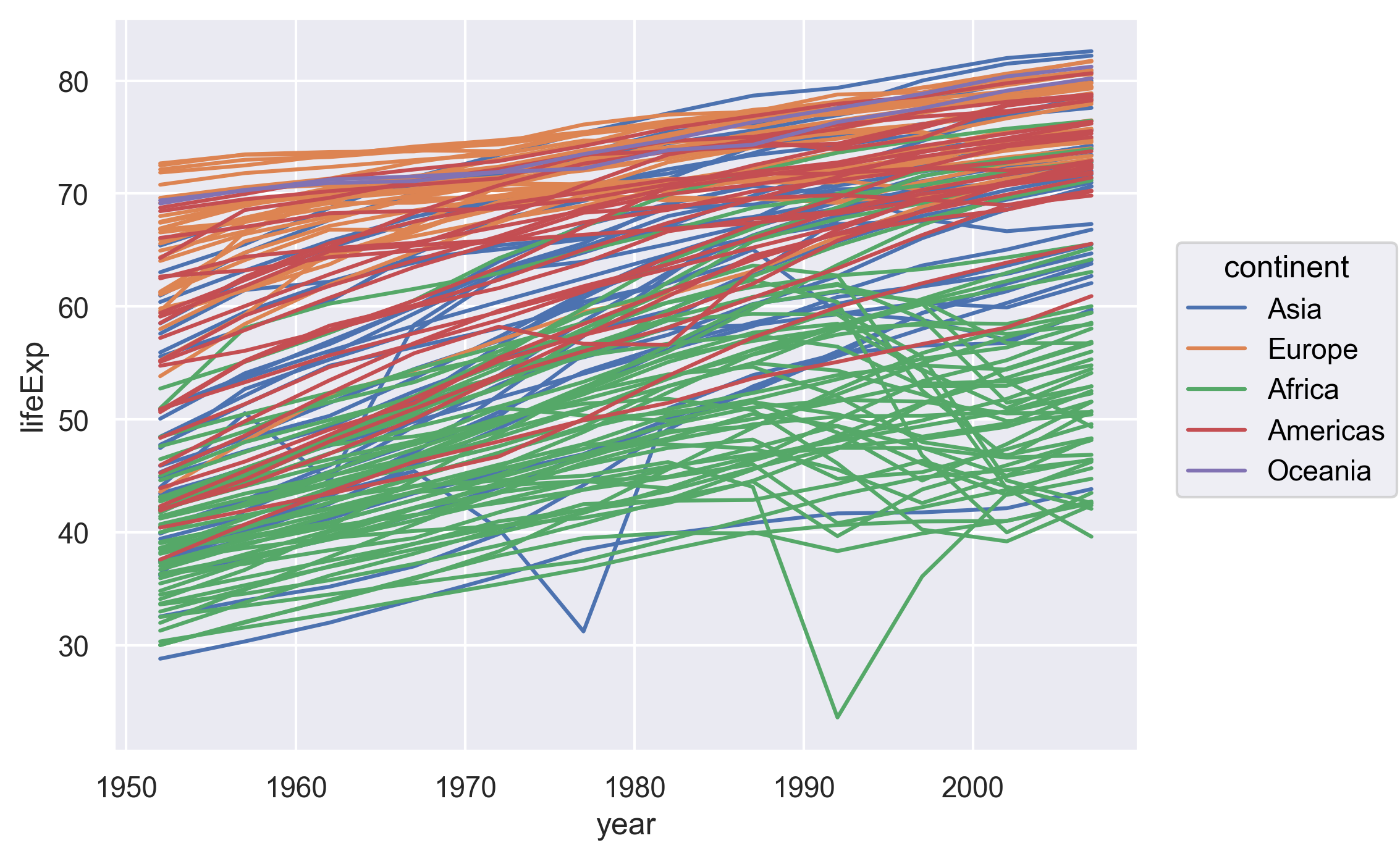

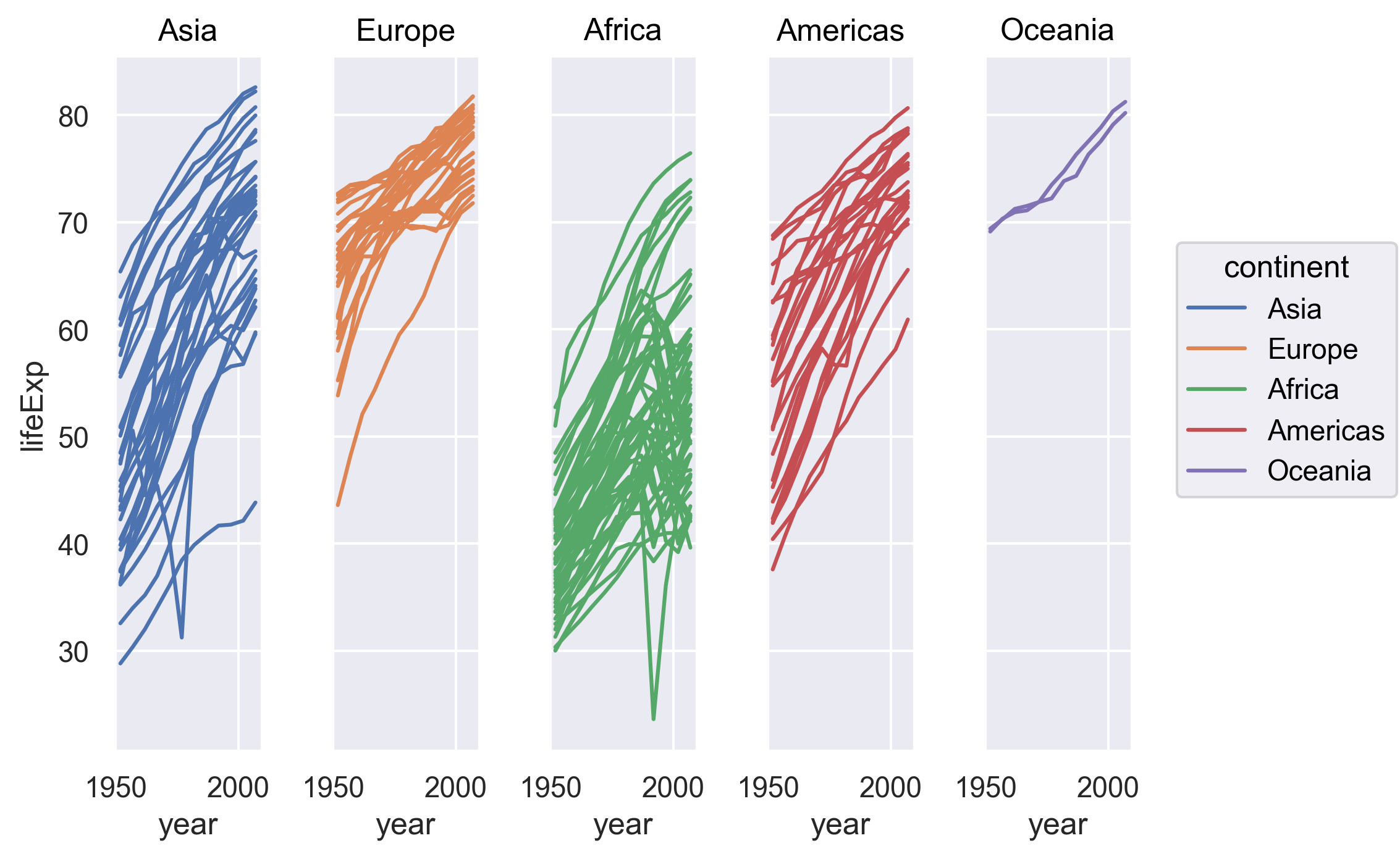

Facets

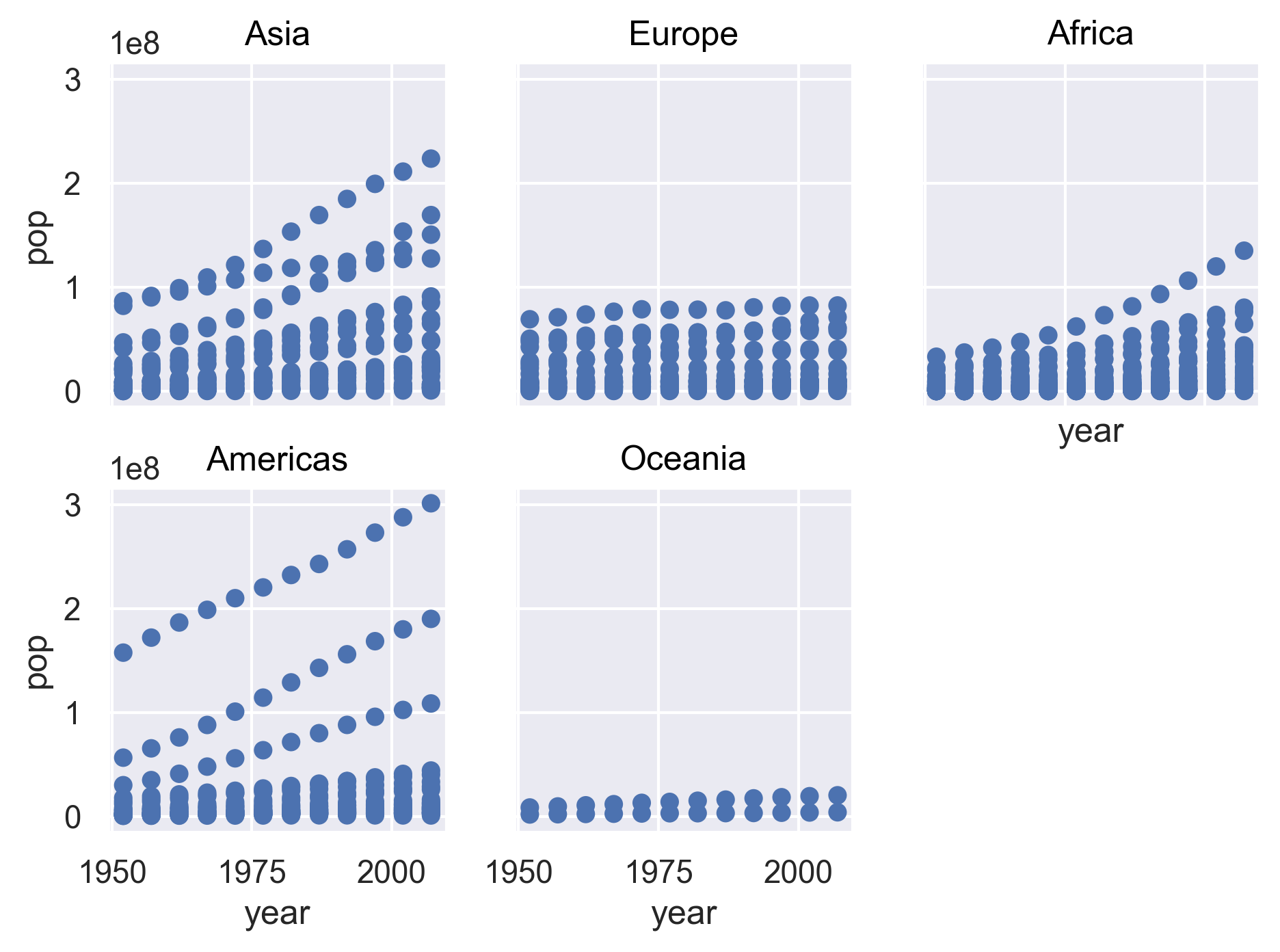

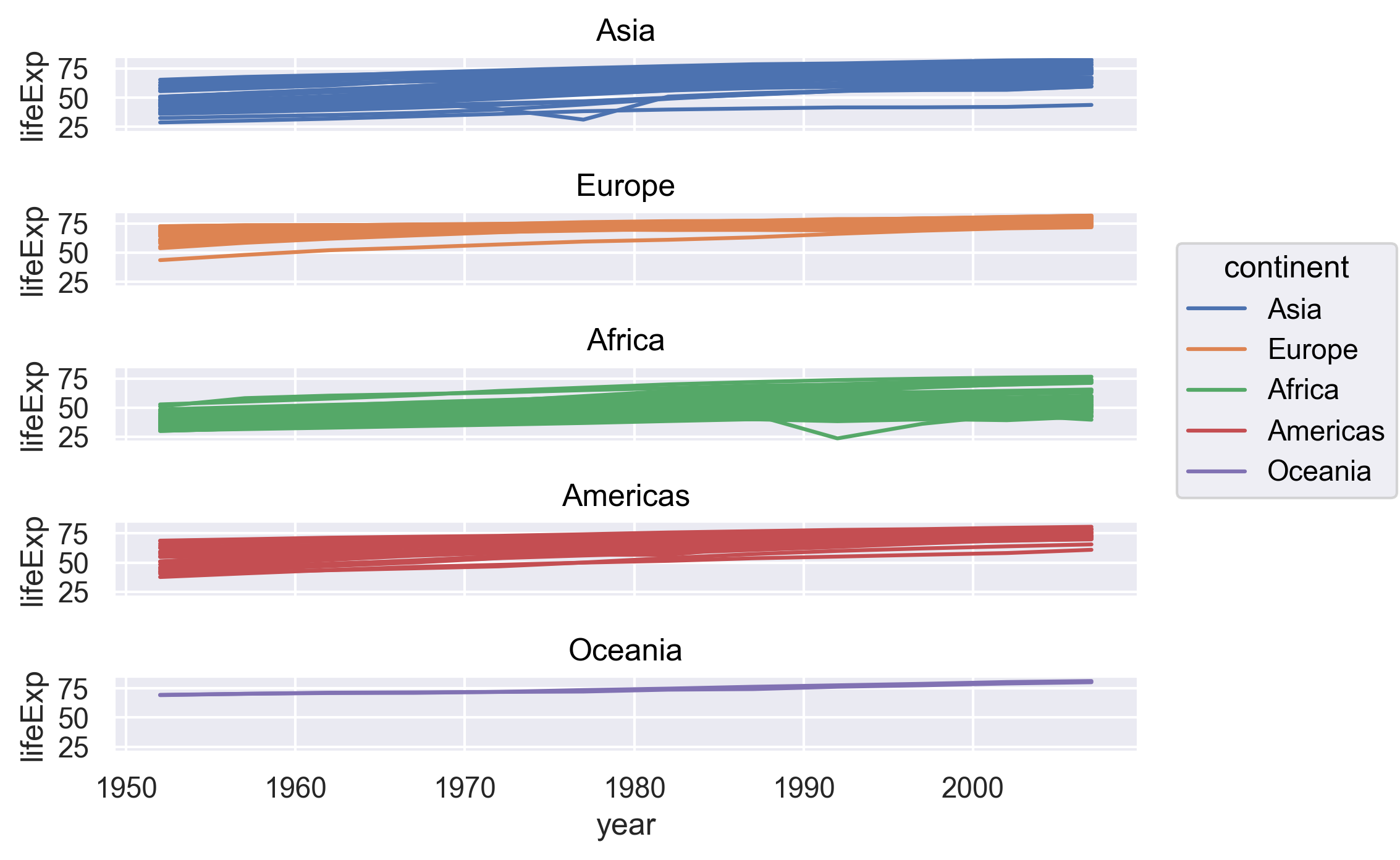

If you have a lot of different columns to try to plot or have distinguishable subgroups in your data, a powerful plotting technique called faceting might come in handy. When you facet your plot, you basically make a bunch of smaller plots and combine them together into a single image. Luckily, seaborn makes this very easy. Let’s start with the “spaghetti plot” that we made earlier.

(

so.Plot(data=gapminder,

x='year',

y='lifeExp',

group='country',

color='continent')

.add(so.Line())

)

Rather than having all the countries in a single plot, this time let’s draw a separate box (a “subplot”) for countries in each continent.

We can do this by applying the facet() method to the plot.

(

so.Plot(data=gapminder,

x='year',

y='lifeExp',

group='country',

color='continent')

.add(so.Line())

.facet('continent')

)

Note now we have a separate subplot for countries in each continent. This type of faceted plots are sometimes called small multiples.

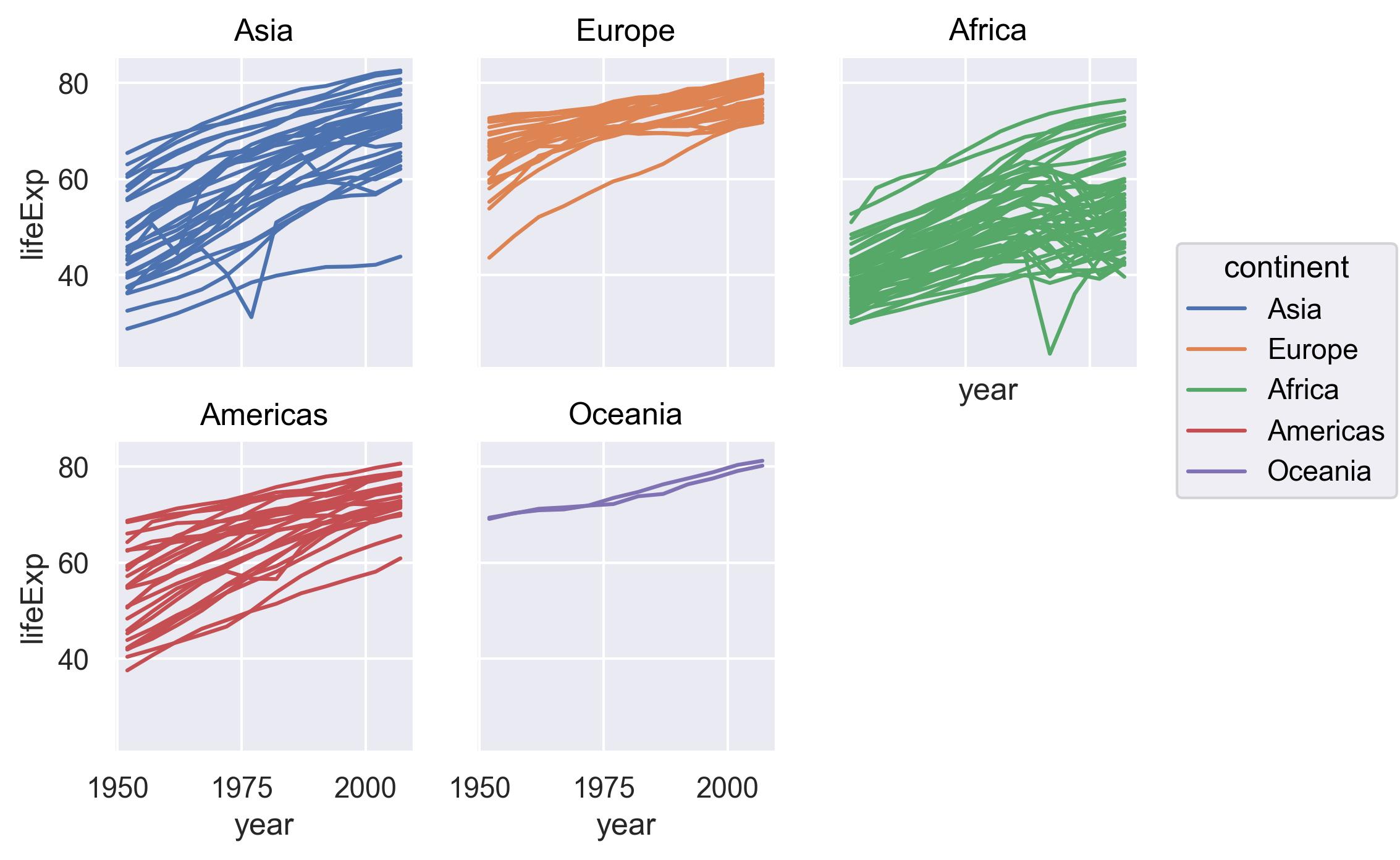

Note all five subplots are in one row. If we want, we can “wrap” the subplots across a two-dimentional grid. For example, if we want the subplots to have a maximum of 3 columns, we can do the following.

so.Plot(data=gapminder,

x='year',

y='lifeExp',

group='country',

color='continent')

.add(so.Line())

.facet('continent', wrap=3)

By default, the facet() method will place the subplots along the columns of the grid.

If we want to place the subplots along the rows (it’s probably not a good idea in this example as we want to compare the life expectancies), we can set row='continent' when applying facet to the plot.

(

so.Plot(data=gapminder,

x='year',

y='lifeExp',

group='country',

color='continent')

.add(so.Line())

.facet(row='continent')

)

Saving plots

We’ve made a bunch of plots today, but we never talked about how to share them with our friends who aren’t running Python! It’s wise to keep all the code we used to draw the plot, but sometimes we need to make a PNG or PDF version of the plot so we can share it with our colleagues or post it to our Instagram story.

We can save a plot by applying the save() method to the plot.

(

so.Plot(data=gapminder,

x='year',

y='lifeExp',

group='country',

color='continent')

.add(so.Line())

.facet('continent', wrap=3)

.save("awesome_plot.png", bbox_inches='tight', dpi=200)

)

Saving a plot

Try rerunning one of your plots and then saving it using

save. Find and open the plot to see if it worked!Example solution

( so.Plot(data=gapminder_1997, x='lifeExp') .add(so.Bars(), so.Hist(stat='density', binwidth=5, binrange=(0, 100)), label='Histogram') .add(so.Line(color='red', linewidth=4, alpha=.7), so.KDE(), label='Kernal density') .label(x="Life expectency", y="Density") .layout(size=(9, 4)) .save("another_awesome_plot.png", bbox_inches='tight', dpi=200) )Check your current working directory to find the plot!

We also might want to just temporarily save a plot while we’re using Python, so that you can come back to it later.

Luckily, a plot is just an object, like any other object we’ve been working with!

Let’s try storing our histogram from earlier in an object called hist_plot.

hist_plot = (

so.Plot(data=gapminder_1997,

x='lifeExp')

.add(so.Bars(), so.Hist(stat='density', binwidth=5, binrange=(0, 100)))

)

Now if we want to see our plot again, we can just run:

hist_plot

We can also add changes to the plot. Let’s say we want to add another layer of the kernel density estimation.

hist_plot.add(so.Line(color='red'), so.KDE())

Watch out! Adding the theme does not change the hist_plot object!

If we want to change the object, we need to store our changes:

hist_plot = hist_plot.add(so.Line(color='red'), so.KDE())

Bonus Exercise: Create and save a plot

Now try it yourself! Create your own plot using

so.Plot(), store it in an object namedmy_plot, and save the plot using thesave()method.Example solution

( so.Plot(gapminder_1997, x='gdpPercap', y='lifeExp', color='continent') .add(so.Dot()) .label(x="GDP Per Capita", y="Life Expectancy", title = "Do people in wealthy countries live longer?") .save("my_awesome_plot.png", bbox_inches='tight', dpi=200) )

Bonus

Creating complex plots

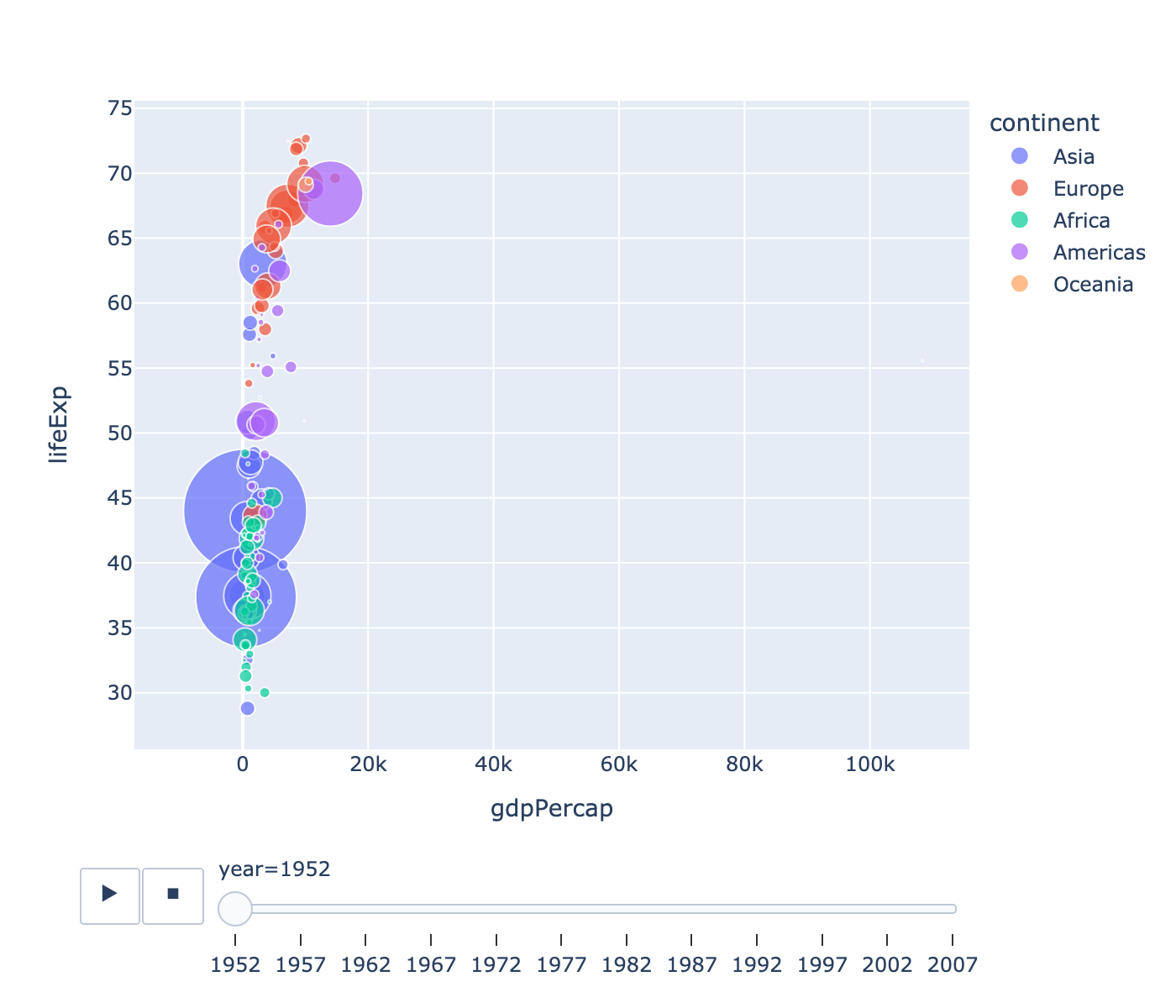

Animated plots

Sometimes it can be cool (and useful) to create animated graphs, like this famous one by Hans Rosling using the Gapminder dataset that plots GDP vs. Life Expectancy over time. Let’s try to recreate this plot!

The seaborn library that we used so far does not support annimated plots. We will use a different visualization library in Python called Plotly - a popular library for making interactive visualizations.

Plotly is already pre-installed with Anaconda. All we need to do is to import the library.

import plotly.express as px

(

px.scatter(data_frame=gapminder,

x='gdpPercap',

y='lifeExp',

size='pop',

animation_frame='year',

hover_name='country',

color='continent',

height=600,

size_max=80)

)

Awesome! This is looking sweet! Let’s make sure we understand the code above:

- The

animation_frameargument of the plotting function tells it which variable should be different in each frame of our animation: in this case, we want each frame to be a different year. - There are quite a few more parameters that we have control over the plot. Feel free to check out more options from the documentation of the

px.scatter()function.

So we’ve made this cool animated plot - how do we save it?

We can apply the write_html() method to save the plot to a standalone HTML file.

(

px.scatter(data_frame=gapminder,

x='gdpPercap',

y='lifeExp',

size='pop',

animation_frame='year',

hover_name='country',

color='continent',

height=600,

size_max=80)

.write_html("./hansAnimatedPlot.html")

)

Glossary of terms

- Mark: an object that is used to graphically represents data values. Examples include dots, bars, lines, areas, band, and paths. Each mark has a number of properties (e.g., color, size, opacity) that can be set to change its appearance.

- Facets: Dividing your data into groups and making a subplot for each.

- Layer: Each plot is made up of one or more layers. Each layer contains one mark.

- Scale: specifying mappings from data units to visual properties.

Key Points

Python is a free general purpose programming language used by many for reproducible data analysis.

Use Python library pandas’

read_csv()function to read tabular data.Use Python library seaborn to create and save data visualizations.

The Unix Shell

Overview

Teaching: 60 min

Exercises: 30 minQuestions

What is a command shell and why would I use one?

How can I move around on my computer?

How can I see what files and directories I have?

How can I specify the location of a file or directory on my computer?

How can I create, copy, and delete files and directories?

How can I edit files?

Objectives

Explain how the shell relates to users’ programs.

Explain when and why command-line interfaces should be used instead of graphical interfaces.

Construct absolute and relative paths that identify specific files and directories.

Demonstrate the use of tab completion and explain its advantages.

Create a directory hierarchy that matches a given diagram.

Create files in the directory hierarchy using an editor or by copying and renaming existing files.

Delete, copy, and move specified files and/or directories.

Contents

Introducing the Shell

Motivation

Usually you move around your computer and run programs through graphical user interfaces (GUIs). For example, Finder for Mac and Explorer for Windows. These GUIs are convenient because you can use your mouse to navigate to different folders and open different files. However, there are some things you simply can’t do from these GUIs.

The Unix Shell (or the command line) allows you to do everything you would do through Finder/Explorer, and a lot more. But it’s so scary! I thought so at first, too. Since then, I’ve learned that it’s just another way to navigate your computer and run programs, and it can be super useful for your work. For instance, you can use it to combine existing tools into a pipeline to automate analyses, you can write a script to do things for you and improve reproducibility, you can interact with remote machines and supercomputers that are far away from you, and sometimes it’s the only option for the program you want to run.

We’re going to use it to:

- Organize our Python code and plots from the Python plotting lesson.

- Perform version control using git during the rest of the workshop.

What the Shell looks like

When you open up the terminal for the first time, it can look pretty scary - it’s basically just a blank screen. Don’t worry - we’ll take you through how to use it step by step.

The first line of the shell shows a prompt - the shell is waiting for an input. When you’re following along in the lesson, don’t type the prompt when typing commands. To make the prompt the same for all of us, run this command:

PS1='$ '

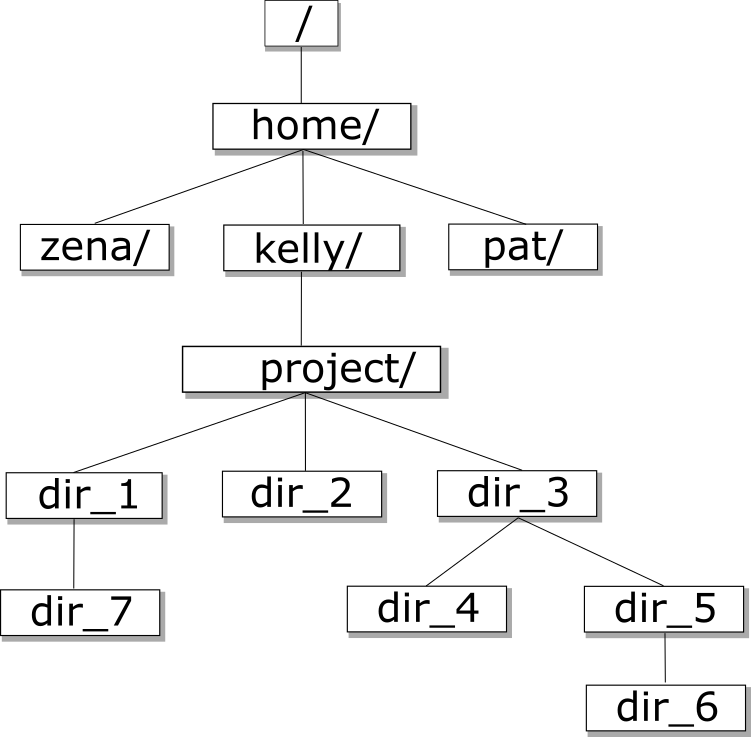

Tree Structure

The first thing we need to learn when using the shell is how to get around our computer.

The shell folder (directory) structure is the same file structure as you’re used to.

We call the way that different directories are nested the “directory tree”.

You start at the root directory (/) and you can move “up” and “down” the tree. Here’s an example:

Now that we understand directory trees a bit, let’s check it out from the command line.

We can see where we are by using the command pwd which stands for “print working directory”, or the directory we are currently in:

pwd

/home/USERNAME/

Congrats! You just ran your first command from the command line. The output is a file path to a location (a directory) on your computer.

The output will look a little different depending on what operating system you’re using:

- Mac:

/Users/USERNAME - Linux:

/home/USERNAME - Windows:

/c/Users/USERNAME

Let’s check to see what’s in your home directory using the ls command, which lists all of the files in your working directory:

ls

Desktop Downloads Movies Pictures

Documents Library Music Public

You should see some files and directories you’re familiar with such as Documents and Desktop.

If you make a typo, don’t worry. If the shell can’t find a command you type, it will show you a helpful error message.

ks

ks: command not found

This error message tells us the command we tried to run, ks, is not a command that is recognized, letting us know we might have made a mistake when typing.

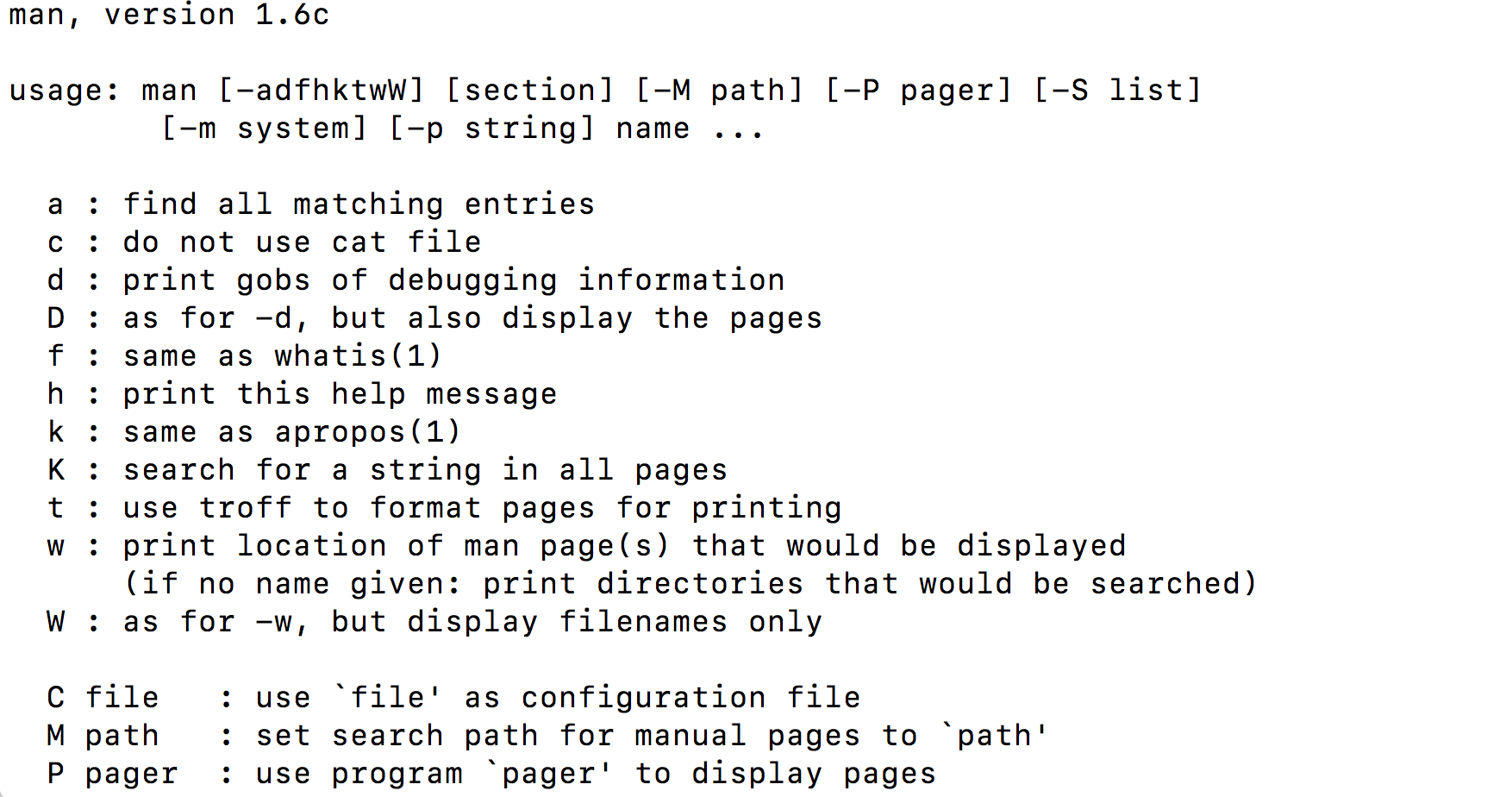

Man and Help

Often we’ll want to learn more about how to use a certain command such as ls. There are several different ways you can

learn more about a specific command.

Some commands have additional information that can be found by using the -h or --help

flags. This will print brief documentation for the command:

man -h

man --help

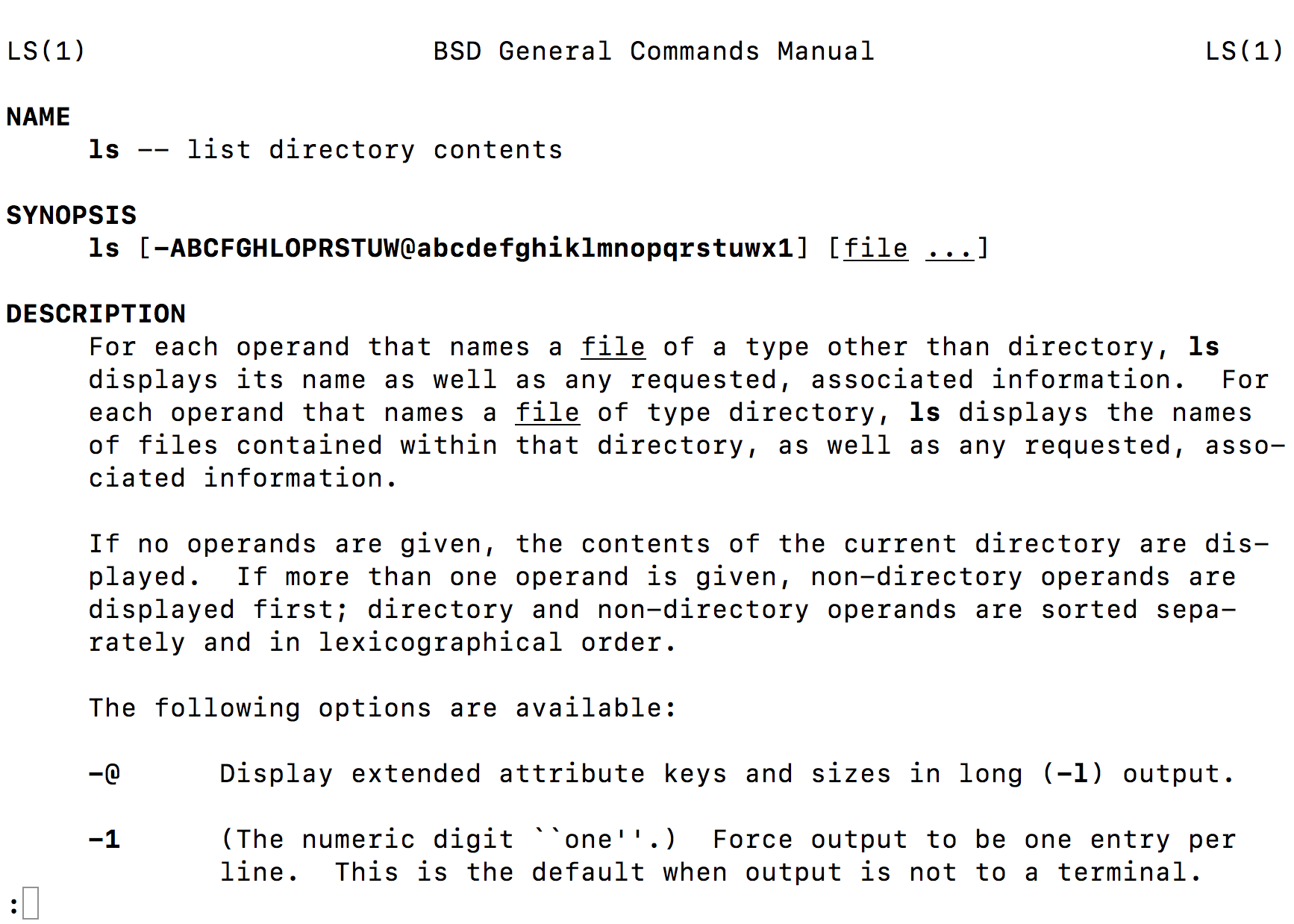

Other commands, such as ls, don’t have help flags, but have manual pages with more information. We can navigate

the manual page using the man command to view the description of a command and its options. For example,

if you want to know more about the navigation options of ls you can type man ls on the command line:

man ls

On the manual page for ls, we see a section titled options. These options, also called flags, are like arguments in Python functions, and allow us to customize how ls runs.

To get out of the man page, click q.

Sometimes, commands will have multiple flags that we want to use at the same time. For example,

ls has a flag -F that displays a slash after all directories, as well as a flag -a that

includes hidden files and directories (ones that begin with a .). There are two ways to run

ls using both of these flags:

ls -F -a

ls -Fa

Note that when we run the -a command, we see a . and a .. in the directory. The . corresponds to the current directory we are in and the .. corresponds to the directory directly above us in the directory tree. We’ll learn more about why this is useful in a bit.

Using the Manual Pages

Use

manto open the manual for the commandls.What flags would you use to…

- Print files in order of size?

- Print files in order of the last time they were edited?

- Print more information about the files?

- Print more information about the files with unit suffixes?

- Print files in order of size AND also print more information about the files?

Solution

ls -Sls -tls -lls -lhls -lS

Next, let’s move to our Desktop. To do this, we use cd to change directories.

Run the following command:

cd Desktop

Let’s see if we’re in the right place:

pwd

/home/USERNAME/Desktop

We just moved down the directory tree into the Desktop directory.

What files and directories do you have on your Desktop? How can you check?

ls

list.txt

un-report

notes.pdf

Untitled.png

Your Desktop will likely look different, but the important thing is that you see the folder we worked in for the Python plotting lesson.

Is the un-report directory listed on your Desktop?

How can we get into the un-report directory?

cd un-report

We just went down the directory tree again.

Let’s see what files are in un-report:

ls

awesome_plot.png

awesome_hist_plot.png

gapminder_1997.csv

gapminder_data.csv

gdp_population.ipynb

Is it what you expect? Are the files you made in the Python plotting lesson there?

Now let’s move back up the directory tree. First, let’s try this command:

cd Desktop

cd: Desktop: No such file or directory

This doesn’t work because the Desktop directory is not within the directory that we are currently in.

To move up the directory tree, you can use .., which is the parent of the current directory:

cd ..

pwd

/home/USERNAME/Desktop

Everything that we’ve been doing is working with file paths. We tell the computer where we want to go using cd plus the file path. We can also tell the computer what files we want to list by giving a file path to ls:

ls un-report

awesome_plot.png

awesome_hist_plot.png

gapminder_1997.csv

gapminder_data.csv

gdp_population.ipynb

ls ..

list.txt

un-report

notes.pdf

Untitled.png

What happens if you just type cd without a file path?

cd

pwd

/home/USERNAME

It takes you back to your home directory!

To get back to your projects directory you can use the following command:

cd Desktop/un-report

We have been using relative paths, meaning you use your current working directory to get to where you want to go.

You can also use the absolute path, or the entire path from the root directory. What’s listed when you use the pwd command is the absolute path:

pwd

You can also use ~ for the path to your home directory:

cd ~

pwd

/home/USERNAME

Absolute vs Relative Paths

Starting from

/Users/amanda/data, which of the following commands could Amanda use to navigate to her home directory, which is/Users/amanda?

cd .cd /cd /home/amandacd ../..cd ~cd homecd ~/data/..cdcd ..Solution

- No:

.stands for the current directory.- No:

/stands for the root directory.- No: Amanda’s home directory is

/Users/amanda.- No: this goes up two levels, i.e. ends in

/Users.- Yes:

~stands for the user’s home directory, in this case/Users/amanda.- No: this would navigate into a directory

homein the current directory if it exists.- Yes: unnecessarily complicated, but correct.

- Yes: shortcut to go back to the user’s home directory.

- Yes: goes up one level.

Working with files and directories

Now that we know how to move around your computer using the command line, our next step is to organize the project that we started in the Python plotting lesson You might ask: why would I use the command line when I could just use the GUI? My best response is that if you ever need to use a high-performance computing cluster (such as Great Lakes at the University of Michigan), you’ll have no other option. You might also come to like it more than clicking around to get places once you get comfortable, because it’s a lot faster!

First, let’s make sure we’re in the right directory (the un-reports directory):

pwd

/home/USERNAME/Desktop/un-reports

If you’re not there, cd to the correct place.

Next, let’s remind ourselves what files are in this directory:

ls

awesome_plot.png

awesome_hist_plot.png

gapminder_1997.csv

gapminder_data.csv

gdp_population.ipynb

TODO: update listing output

You can see that right now all of our files are in our main directory. However, it can start to get crazy if you have too many different files of different types all in one place! We’re going to create a better project directory structure that will help us organize our files. This is really important, particularly for larger projects. If you’re interested in learning more about structuring computational biology projects in particular, here is a useful article.

What do you think good would be a good way to organize our files?

One way is the following:

.

├── code

│ └── gdp_population.ipynb

├── data

│ ├── gapminder_1997.csv

└── gapminder_data.csv

└── figures

├── awesome_plot.png

└── awesome_hist_plot.png

The Jupyter notebook goes in the code directory, the gapminder datasets go in the data directory, and the figures go in the figures directory. This way, all of the files are organized into a clearer overall structure.

A few notes about naming files and directories:

- Don’t use white spaces because they’re used to break arguments on the command line, so it makes things like moving and viewing files more complicated.

Instead you can use a dash (

-) or an underscore (_). - Don’t start names with a dash (

-) because the shell will interpret it incorrectly. - Stick with letters, numbers, periods, dashes, and underscores, because other symbols (e.g.

^,&) have special meanings. - If you have to refer to names of files or directories with whitespace or other special characters, use double quotes. For example, if you wanted to change into a directory called

My Code, you will want to typecd "My Code", notcd My Code.

So how do we make our directory structure look like this?

First, we need to make a new directory. Let’s start with the code directory. To do this, we use the command mkdir plus the name of the directory we want to make:

mkdir code

Now, let’s see if that directory exists now:

ls

awesome_plot.png

awesome_hist_plot.png

code

gapminder_1997.csv

gapminder_data.csv

How can we check to see if there’s anything in the code directory?

ls code

Nothing in there yet, which is expected since we just made the directory.

The next step is to move the gdp_population.ipynb file into the code directory. To do this, we use the mv command. The first argument after mv is the file you want to move, and the second argument is the place you want to move it:

mv gdp_population.ipynb code

Okay, let’s see what’s in our current directory now:

ls

awesome_plot.png

awesome_hist_plot.png

code

gapminder_1997.csv

gapminder_data.csv

gdp_population.ipynb is no longer there! Where did it go? Let’s check the code directory, where we moved it to:

ls code

gdp_population.ipynb

There it is!

Creating directories and moving files

Create a

datadirectory and movegapminder_data.csvandgapminder_1997.csvinto the newly createddatadirectory.Solution

From the

un-reportdirectory:mkdir data mv gapminder_data.csv data mv gapminder_1997.csv data

Okay, now we have the code and data in the right place. But we have several figures that should still be in their own directory.

First, let’s make a figures directory:

mkdir figures

Next, we have to move the figures. But we have so many figures! It’d be annoying to move them one at a time. Thankfully, we can use a wildcard to move them all at once. Wildcards are used to match files and directories to patterns.

One example of a wildcard is the asterisk, *. This special character is interpreted as “multiple characters of any kind”.

Let’s see how we can use a wildcard to list only files with the extension .png:

ls *png

awesome_plot.png

awesome_hist_plot.png

See how only the files ending in .png were listed? The shell expands the wildcard to create a list of matching file names before running the commands. Can you guess how we move all of these files at once to the figures directory?

mv *png figures

We can also use the wildcard to list all of the files in all of the directories:

ls *

code:

gdp_population.ipynb

data:

gapminder_1997.csv gapminder_data.csv

figures:

awesome_plot.png awesome_hist_plot.png

This output shows each directory name, followed by its contents on the next line. As you can see, all of the files are now in the right place!

Working with Wildcards

Suppose we are in a directory containing the following files:

cubane.pdb ethane.pdb methane.pdb octane.pdb pentane.pdb propane.pdb README.mdWhat would be the output of the following commands?

ls *ls *.pdbls *ethane.pdbls *anels p*Solution

cubane.pdb ethane.pdb methane.pdb octane.pdb pentane.pdb propane.pdb README.mdcubane.pdb ethane.pdb methane.pdb octane.pdb pentane.pdb propane.pdbethane.pdb methane.pdb- None. None of the files end in only

ane. This would have listed files ifls *ane*were used instead.pentane.pdb propane.pdb

Viewing Files

To view and navigate the contents of a file we can use the command less. This will open a full screen view of the file.

For instance, we can run the command less on our gapminder_data.csv file:

less data/gapminder_data.csv

To navigate, press spacebar to scroll to the next page and b to scroll up to the previous page. You can also use the up and down arrows to scroll line-by-line. Note that less defaults to line wrapping, meaning that any lines longer than the width of the screen will be wrapped to the next line. To exit less, press the letter q.

One particularly useful flag for less is -S which cuts off really long lines (rather than having the text wrap around):

less -S data/gapminder_data.csv

To navigate, press spacebar to scroll to the next page and b to scroll up to the previous page. You can also use the up and down arrows to scroll line-by-line. Note that less defaults to line wrapping, meaning that any lines longer than the width of the screen will be wrapped to the next line, (to disable this use the option -S when running less, ex less -S file.txt). To exit less, press the letter q.

Note that not all file types can be viewed with less. While we can open PDFs and excel spreadsheets easily with programs on our computer, less doesn’t render them well on the command line. For example, if we try to less a .pdf file we will see a warning.

less figures/awesome_plot.png

figures/awesome_plot.png may be a binary file. See it anyway?

If we say “yes”, less will render the file but it will appear as a seemingly random display of characters that won’t make much sense to us.

Glossary of terms

- root: the very top of the file system tree

- absolute path: the location of a specific file or directory starting from the root of the file system tree

-

relative path: the location of a specific file or directory starting from where you currently are in the file system tree

pwd: Print working directory - prints the absolute path from the root directory to the directory where you currently are.ls: List files - lists files in the current directory. You can provide a path to list files to another directory as well (ls [path]).cd [path]: Change directories - move to another folder.mkdir: Make directory - creates a new directory..: This will move you up one level in the file system treemv: Move - move a file to a new location (mv [file] [/path/to/new/location]) OR remaning a file (mv [oldfilename] [newfilename])less: - quick way to view a document without using a full text editorman: Manual - allows you to view the bash manual for another command (e.g.man ls)-h/--help: Help - argument that pulls up the help manual for a programnano: a user-friendly text editor*: Wildcard - matches zero of more characters in a filename

Key Points

A shell is a program whose primary purpose is to read commands and run other programs.

Tab completion can help you save a lot of time and frustration.

The shell’s main advantages are its support for automating repetitive tasks and its capacity to access network machines.

Information is stored in files, which are stored in directories (folders).

Directories nested in other directories for a directory tree.

cd [path]changes the current working directory.

ls [path]prints a listing of a specific file or directory.

lslists the current working directory.

pwdprints the user’s current working directory.

/is the root directory of the whole file system.A relative path specifies a location starting from the current location.

An absolute path specifies a location from the root of the file system.

Directory names in a path are separated with

/on Unix, but\on Windows.

..means ‘the directory above the current one’;.on its own means ‘the current directory’.

cp [old] [new]copies a file.

mkdir [path]creates a new directory.

mv [old] [new]moves (renames) a file or directory.

rm [path]removes (deletes) a file.

*matches zero or more characters in a filename.The shell does not have a trash bin — once something is deleted, it’s really gone.

Intro to Git & GitHub

Overview

Teaching: 90 min

Exercises: 60 minQuestions

What is version control and why should I use it?

How do I get set up to use Git?

How do I share my changes with others on the web?

How can I use version control to collaborate with other people?

Objectives

Explain what version control is and why it’s useful.

Configure

gitthe first time it is used on a computer.Learn the basic git workflow.

Push, pull, or clone a remote repository.

Describe the basic collaborative workflow with GitHub.

Contents

- Background

- Setting up git

- Creating a Repository

- Tracking Changes

- Intro to GitHub

- Collaborating with GitHub

- BONUS

Background

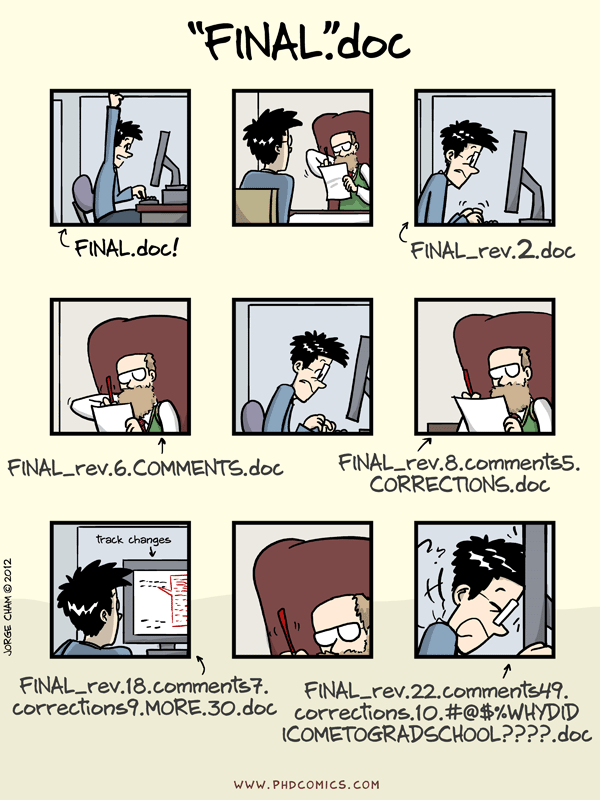

We’ll start by exploring how version control can be used to keep track of what one person did and when. Even if you aren’t collaborating with other people, automated version control is much better than this situation:

“Piled Higher and Deeper” by Jorge Cham, http://www.phdcomics.com

We’ve all been in this situation before: it seems ridiculous to have multiple nearly-identical versions of the same document. Some word processors let us deal with this a little better, such as Microsoft Word’s Track Changes, Google Docs’ version history, or LibreOffice’s Recording and Displaying Changes.

Version control systems start with a base version of the document and then record changes you make each step of the way. You can think of it as a recording of your progress: you can rewind to start at the base document and play back each change you made, eventually arriving at your more recent version.

Once you think of changes as separate from the document itself, you can then think about “playing back” different sets of changes on the base document, ultimately resulting in different versions of that document. For example, two users can make independent sets of changes on the same document.

Unless multiple users make changes to the same section of the document - a conflict - you can incorporate two sets of changes into the same base document.

A version control system is a tool that keeps track of these changes for us, effectively creating different versions of our files. It allows us to decide which changes will be made to the next version (each record of these changes is called a commit), and keeps useful metadata about them. The complete history of commits for a particular project and their metadata make up a repository. Repositories can be kept in sync across different computers, facilitating collaboration among different people.

Paper Writing

Imagine you drafted an excellent paragraph for a paper you are writing, but later ruin it. How would you retrieve the excellent version of your conclusion? Is it even possible?

Imagine you have 5 co-authors. How would you manage the changes and comments they make to your paper? If you use LibreOffice Writer or Microsoft Word, what happens if you accept changes made using the

Track Changesoption? Do you have a history of those changes?Solution

Recovering the excellent version is only possible if you created a copy of the old version of the paper. The danger of losing good versions often leads to the problematic workflow illustrated in the PhD Comics cartoon at the top of this page.

Collaborative writing with traditional word processors is cumbersome. Either every collaborator has to work on a document sequentially (slowing down the process of writing), or you have to send out a version to all collaborators and manually merge their comments into your document. The ‘track changes’ or ‘record changes’ option can highlight changes for you and simplifies merging, but as soon as you accept changes you will lose their history. You will then no longer know who suggested that change, why it was suggested, or when it was merged into the rest of the document. Even online word processors like Google Docs or Microsoft Office Online do not fully resolve these problems.

Setting up Git